MongoDB 提供两种方式创建集合:隐式创建 和 显式创建。

方式 1:隐式创建(推荐)

当你向不存在的集合中插入文档时,MongoDB 会自动创建该集合。

示例

在 db中隐式创建 users 集合:

javascript

db.users.insertOne({ name: "Alice", age: 30 })

方式 2:显式创建(自定义配置)

使用 createCollection() 方法手动创建集合,并可指定配置选项(如文档大小限制、索引等)。

命令语法

javascript

db.createCollection(<集合名>, { <选项> })

常用选项

capped: 是否为固定大小集合(默认false)。size: 固定大小集合的最大字节数。max: 固定大小集合的最大文档数量。

mongo/db/commands/dbcommands.cpp中CmdCreate对象执行创建集合动作

/* create collection */

class CmdCreate : public BasicCommand {

public:CmdCreate() : BasicCommand("create") {}AllowedOnSecondary secondaryAllowed(ServiceContext*) const override {return AllowedOnSecondary::kNever;}virtual bool adminOnly() const {return false;}virtual bool supportsWriteConcern(const BSONObj& cmd) const override {return true;}std::string help() const override {return str::stream()<< "explicitly creates a collection or view\n"<< "{\n"<< " create: <string: collection or view name> [,\n"<< " capped: <bool: capped collection>,\n"<< " autoIndexId: <bool: automatic creation of _id index>,\n"<< " idIndex: <document: _id index specification>,\n"<< " size: <int: size in bytes of the capped collection>,\n"<< " max: <int: max number of documents in the capped collection>,\n"<< " storageEngine: <document: storage engine configuration>,\n"<< " validator: <document: validation rules>,\n"<< " validationLevel: <string: validation level>,\n"<< " validationAction: <string: validation action>,\n"<< " indexOptionDefaults: <document: default configuration for indexes>,\n"<< " viewOn: <string: name of source collection or view>,\n"<< " pipeline: <array<object>: aggregation pipeline stage>,\n"<< " collation: <document: default collation for the collection or view>,\n"<< " writeConcern: <document: write concern expression for the operation>]\n"<< "}";}virtual Status checkAuthForCommand(Client* client,const std::string& dbname,const BSONObj& cmdObj) const {const NamespaceString nss(parseNs(dbname, cmdObj));return AuthorizationSession::get(client)->checkAuthForCreate(nss, cmdObj, false);}virtual bool run(OperationContext* opCtx,const string& dbname,const BSONObj& cmdObj,BSONObjBuilder& result) {IDLParserErrorContext ctx("create");CreateCommand cmd = CreateCommand::parse(ctx, cmdObj);const NamespaceString ns = cmd.getNamespace();if (cmd.getAutoIndexId()) {const char* deprecationWarning ="the autoIndexId option is deprecated and will be removed in a future release";warning() << deprecationWarning;result.append("note", deprecationWarning);}// Ensure that the 'size' field is present if 'capped' is set to true.if (cmd.getCapped()) {uassert(ErrorCodes::InvalidOptions,str::stream() << "the 'size' field is required when 'capped' is true",cmd.getSize());}// If the 'size' or 'max' fields are present, then 'capped' must be set to true.if (cmd.getSize() || cmd.getMax()) {uassert(ErrorCodes::InvalidOptions,str::stream() << "the 'capped' field needs to be true when either the 'size'"<< " or 'max' fields are present",cmd.getCapped());}// The 'temp' field is only allowed to be used internally and isn't available to clients.if (cmd.getTemp()) {uassert(ErrorCodes::InvalidOptions,str::stream() << "the 'temp' field is an invalid option",opCtx->getClient()->isInDirectClient() ||(opCtx->getClient()->session()->getTags() |transport::Session::kInternalClient));}// Validate _id index spec and fill in missing fields.if (cmd.getIdIndex()) {auto idIndexSpec = *cmd.getIdIndex();uassert(ErrorCodes::InvalidOptions,str::stream() << "'idIndex' is not allowed with 'viewOn': " << idIndexSpec,!cmd.getViewOn());uassert(ErrorCodes::InvalidOptions,str::stream() << "'idIndex' is not allowed with 'autoIndexId': " << idIndexSpec,!cmd.getAutoIndexId());// Perform index spec validation.idIndexSpec = uassertStatusOK(index_key_validate::validateIndexSpec(opCtx, idIndexSpec, serverGlobalParams.featureCompatibility));uassertStatusOK(index_key_validate::validateIdIndexSpec(idIndexSpec));// Validate or fill in _id index collation.std::unique_ptr<CollatorInterface> defaultCollator;if (cmd.getCollation()) {auto collatorStatus = CollatorFactoryInterface::get(opCtx->getServiceContext())->makeFromBSON(*cmd.getCollation());uassertStatusOK(collatorStatus.getStatus());defaultCollator = std::move(collatorStatus.getValue());}idIndexSpec = uassertStatusOK(index_key_validate::validateIndexSpecCollation(opCtx, idIndexSpec, defaultCollator.get()));std::unique_ptr<CollatorInterface> idIndexCollator;if (auto collationElem = idIndexSpec["collation"]) {auto collatorStatus = CollatorFactoryInterface::get(opCtx->getServiceContext())->makeFromBSON(collationElem.Obj());// validateIndexSpecCollation() should have checked that the _id index collation// spec is valid.invariant(collatorStatus.isOK());idIndexCollator = std::move(collatorStatus.getValue());}if (!CollatorInterface::collatorsMatch(defaultCollator.get(), idIndexCollator.get())) {uasserted(ErrorCodes::BadValue,"'idIndex' must have the same collation as the collection.");}// Remove "idIndex" field from command.auto resolvedCmdObj = cmdObj.removeField("idIndex");uassertStatusOK(createCollection(opCtx, dbname, resolvedCmdObj, idIndexSpec));return true;}BSONObj idIndexSpec;uassertStatusOK(createCollection(opCtx, dbname, cmdObj, idIndexSpec));return true;}

} cmdCreate;CmdCreate核心方法是run,run方法先解析CreateCommand::parse命令;参数验证;createCollection创建Collection;

mongo/db/catalog/create_collection.cpp中createCollection(4个参数)方法:

Status createCollection(OperationContext* opCtx,const std::string& dbName,const BSONObj& cmdObj,const BSONObj& idIndex) {return createCollection(opCtx,CommandHelpers::parseNsCollectionRequired(dbName, cmdObj),cmdObj,idIndex,CollectionOptions::parseForCommand);

}mongo/db/catalog/create_collection.cpp中createCollection(5个参数)方法:

/*** Shared part of the implementation of the createCollection versions for replicated and regular* collection creation.*/

Status createCollection(OperationContext* opCtx,const NamespaceString& nss,const BSONObj& cmdObj,const BSONObj& idIndex,CollectionOptions::ParseKind kind) {BSONObjIterator it(cmdObj);// Skip the first cmdObj element.BSONElement firstElt = it.next();invariant(firstElt.fieldNameStringData() == "create");Status status = userAllowedCreateNS(nss.db(), nss.coll());if (!status.isOK()) {return status;}// Build options object from remaining cmdObj elements.BSONObjBuilder optionsBuilder;while (it.more()) {const auto elem = it.next();if (!isGenericArgument(elem.fieldNameStringData()))optionsBuilder.append(elem);if (elem.fieldNameStringData() == "viewOn") {// Views don't have UUIDs so it should always be parsed for command.kind = CollectionOptions::parseForCommand;}}BSONObj options = optionsBuilder.obj();uassert(14832,"specify size:<n> when capped is true",!options["capped"].trueValue() || options["size"].isNumber());CollectionOptions collectionOptions;{StatusWith<CollectionOptions> statusWith = CollectionOptions::parse(options, kind);if (!statusWith.isOK()) {return statusWith.getStatus();}collectionOptions = statusWith.getValue();}if (collectionOptions.isView()) {return _createView(opCtx, nss, collectionOptions, idIndex);} else {return _createCollection(opCtx, nss, collectionOptions, idIndex);}

}mongo/db/catalog/create_collection.cpp中userAllowedCreateNS(nss.db(), nss.coll())验证数据库名字和集合的名字是否合法,判断是否和系统名字冲突。比如system.users;system.version;system.role等;

Status userAllowedCreateNS(StringData db, StringData coll) {// validity checkingif (db.size() == 0)return Status(ErrorCodes::InvalidNamespace, "db cannot be blank");if (!NamespaceString::validDBName(db, NamespaceString::DollarInDbNameBehavior::Allow))return Status(ErrorCodes::InvalidNamespace, "invalid db name");if (coll.size() == 0)return Status(ErrorCodes::InvalidNamespace, "collection cannot be blank");if (!NamespaceString::validCollectionName(coll))return Status(ErrorCodes::InvalidNamespace, "invalid collection name");if (!NamespaceString(db, coll).checkLengthForFCV())return Status(ErrorCodes::IncompatibleServerVersion,str::stream() << "Cannot create collection with a long name " << db << "."<< coll << " - upgrade to feature compatibility version "<< FeatureCompatibilityVersionParser::kVersion44<< " to be able to do so.");// check special areasif (db == "system")return Status(ErrorCodes::InvalidNamespace, "cannot use 'system' database");if (coll.startsWith("system.")) {if (coll == "system.js")return Status::OK();if (coll == "system.profile")return Status::OK();if (coll == "system.users")return Status::OK();if (coll == DurableViewCatalog::viewsCollectionName())return Status::OK();if (db == "admin") {if (coll == "system.version")return Status::OK();if (coll == "system.roles")return Status::OK();if (coll == "system.new_users")return Status::OK();if (coll == "system.backup_users")return Status::OK();if (coll == "system.keys")return Status::OK();}if (db == "config") {if (coll == "system.sessions")return Status::OK();if (coll == "system.indexBuilds")return Status::OK();}if (db == "local") {if (coll == "system.replset")return Status::OK();if (coll == "system.healthlog")return Status::OK();}return Status(ErrorCodes::InvalidNamespace,str::stream() << "cannot write to '" << db << "." << coll << "'");}CollectionOptions::parse(options, kind)解析集合参数;

_createView创建视图代码:

_createCollection创建集合代码;

Status _createCollection(OperationContext* opCtx,const NamespaceString& nss,const CollectionOptions& collectionOptions,const BSONObj& idIndex) {return writeConflictRetry(opCtx, "create", nss.ns(), [&] {AutoGetOrCreateDb autoDb(opCtx, nss.db(), MODE_IX);Lock::CollectionLock collLock(opCtx, nss, MODE_X);AutoStatsTracker statsTracker(opCtx,nss,Top::LockType::NotLocked,AutoStatsTracker::LogMode::kUpdateTopAndCurop,autoDb.getDb()->getProfilingLevel());if (opCtx->writesAreReplicated() &&!repl::ReplicationCoordinator::get(opCtx)->canAcceptWritesFor(opCtx, nss)) {return Status(ErrorCodes::NotMaster,str::stream() << "Not primary while creating collection " << nss);}WriteUnitOfWork wunit(opCtx);Status status = autoDb.getDb()->userCreateNS(opCtx, nss, collectionOptions, true, idIndex);if (!status.isOK()) {return status;}wunit.commit();return Status::OK();});

}使用 writeConflictRetry 模板处理写冲突自动重试;

AutoGetOrCreateDb autoDb获取db数据库,如果没有就创建,有就直接返回;

使用 CollectionLock 获取集合的排它锁 (MODE_X);

数据库对象调用mongo/db/catalog/database_impl.cpp中userCreateNS执行实际创建逻辑;

Status DatabaseImpl::userCreateNS(OperationContext* opCtx,const NamespaceString& nss,CollectionOptions collectionOptions,bool createDefaultIndexes,const BSONObj& idIndex) const {// 记录创建集合的日志LOG(1) << "create collection " << nss << ' ' << collectionOptions.toBSON();// 验证命名空间合法性if (!NamespaceString::validCollectionComponent(nss.ns()))return Status(ErrorCodes::InvalidNamespace, str::stream() << "invalid ns: " << nss);// 检查集合是否已存在Collection* collection = CollectionCatalog::get(opCtx).lookupCollectionByNamespace(nss);if (collection)return Status(ErrorCodes::NamespaceExists,str::stream() << "a collection '" << nss << "' already exists");// 检查视图是否已存在if (ViewCatalog::get(this)->lookup(opCtx, nss.ns()))return Status(ErrorCodes::NamespaceExists,str::stream() << "a view '" << nss << "' already exists");// 处理排序规则(collation)std::unique_ptr<CollatorInterface> collator;if (!collectionOptions.collation.isEmpty()) {auto collatorWithStatus = CollatorFactoryInterface::get(opCtx->getServiceContext())->makeFromBSON(collectionOptions.collation);if (!collatorWithStatus.isOK()) {return collatorWithStatus.getStatus();}collator = std::move(collatorWithStatus.getValue());collectionOptions.collation = collator ? collator->getSpec().toBSON() : BSONObj();}// 验证文档验证器(validator)表达式if (!collectionOptions.validator.isEmpty()) {boost::intrusive_ptr<ExpressionContext> expCtx(new ExpressionContext(opCtx, collator.get()));const auto currentFCV = serverGlobalParams.featureCompatibility.getVersion();if (serverGlobalParams.validateFeaturesAsMaster.load() &¤tFCV != ServerGlobalParams::FeatureCompatibility::Version::kFullyUpgradedTo44) {expCtx->maxFeatureCompatibilityVersion = currentFCV;}expCtx->isParsingCollectionValidator = true;auto statusWithMatcher =MatchExpressionParser::parse(collectionOptions.validator, std::move(expCtx));if (!statusWithMatcher.isOK()) {return statusWithMatcher.getStatus();}}// 验证集合存储引擎选项Status status = validateStorageOptions(opCtx->getServiceContext(),collectionOptions.storageEngine,[](const auto& x, const auto& y) { return x->validateCollectionStorageOptions(y); });if (!status.isOK())return status;// 验证索引存储引擎选项if (auto indexOptions = collectionOptions.indexOptionDefaults["storageEngine"]) {status = validateStorageOptions(opCtx->getServiceContext(), indexOptions.Obj(), [](const auto& x, const auto& y) {return x->validateIndexStorageOptions(y);});if (!status.isOK()) {return status;}}// 根据类型创建集合或视图if (collectionOptions.isView()) {uassertStatusOK(createView(opCtx, nss, collectionOptions));} else {invariant(createCollection(opCtx, nss, collectionOptions, createDefaultIndexes, idIndex),str::stream() << "Collection creation failed after validating options: " << nss<< ". Options: " << collectionOptions.toBSON());}return Status::OK();

}CollectionCatalog::get(opCtx).lookupCollectionByNamespace(nss)根据集合名字查找是否存在对应的集合对象Collection;

mongo/db/catalog/database_impl.cpp中createCollection继续创建集合

Collection* DatabaseImpl::createCollection(OperationContext* opCtx,const NamespaceString& nss,const CollectionOptions& options,bool createIdIndex,const BSONObj& idIndex) const {// 前置条件检查invariant(!options.isView());invariant(opCtx->lockState()->isDbLockedForMode(name(), MODE_IX));// 检查是否允许隐式创建集合uassert(CannotImplicitlyCreateCollectionInfo(nss),"request doesn't allow collection to be created implicitly",serverGlobalParams.clusterRole != ClusterRole::ShardServer ||OperationShardingState::get(opCtx).allowImplicitCollectionCreation() ||options.temp);// 检查是否可以接受写操作auto coordinator = repl::ReplicationCoordinator::get(opCtx);bool canAcceptWrites =(coordinator->getReplicationMode() != repl::ReplicationCoordinator::modeReplSet) ||coordinator->canAcceptWritesForDatabase(opCtx, nss.db()) || nss.isSystemDotProfile();// 处理集合UUIDCollectionOptions optionsWithUUID = options;bool generatedUUID = false;if (!optionsWithUUID.uuid) {if (!canAcceptWrites) {uasserted(ErrorCodes::InvalidOptions, "Attempted to create a new collection without a UUID");} else {optionsWithUUID.uuid.emplace(CollectionUUID::gen());generatedUUID = true;}}// 预留oplog槽位,用于保证复制一致性OplogSlot createOplogSlot;if (canAcceptWrites && supportsDocLocking() && !coordinator->isOplogDisabledFor(opCtx, nss)) {createOplogSlot = repl::getNextOpTime(opCtx);}// 内部故障注入点(用于测试)if (MONGO_unlikely(hangAndFailAfterCreateCollectionReservesOpTime.shouldFail())) {hangAndFailAfterCreateCollectionReservesOpTime.pauseWhileSet(opCtx);uasserted(51267, "hangAndFailAfterCreateCollectionReservesOpTime fail point enabled");}// 检查是否可以创建集合_checkCanCreateCollection(opCtx, nss, optionsWithUUID);audit::logCreateCollection(&cc(), nss.ns());// 记录创建集合日志log() << "createCollection: " << nss << " with " << (generatedUUID ? "generated" : "provided")<< " UUID: " << optionsWithUUID.uuid.get() << " and options: " << options.toBSON();// 创建底层存储结构auto storageEngine = opCtx->getServiceContext()->getStorageEngine();std::pair<RecordId, std::unique_ptr<RecordStore>> catalogIdRecordStorePair =uassertStatusOK(storageEngine->getCatalog()->createCollection(opCtx, nss, optionsWithUUID, true /*allocateDefaultSpace*/));// 创建集合对象auto catalogId = catalogIdRecordStorePair.first;std::unique_ptr<Collection> ownedCollection =Collection::Factory::get(opCtx)->make(opCtx,nss,catalogId,optionsWithUUID.uuid.get(),std::move(catalogIdRecordStorePair.second));auto collection = ownedCollection.get();ownedCollection->init(opCtx);// 设置提交回调,确保集合可见性opCtx->recoveryUnit()->onCommit([collection](auto commitTime) {if (commitTime)collection->setMinimumVisibleSnapshot(commitTime.get());});// 注册集合到Catalogauto& catalog = CollectionCatalog::get(opCtx);auto uuid = ownedCollection->uuid();catalog.registerCollection(uuid, std::move(ownedCollection));opCtx->recoveryUnit()->onRollback([uuid, &catalog] { catalog.deregisterCollection(uuid); });// 创建_id索引BSONObj fullIdIndexSpec;if (createIdIndex && collection->requiresIdIndex()) {if (optionsWithUUID.autoIndexId == CollectionOptions::YES ||optionsWithUUID.autoIndexId == CollectionOptions::DEFAULT) {IndexCatalog* ic = collection->getIndexCatalog();fullIdIndexSpec = uassertStatusOK(ic->createIndexOnEmptyCollection(opCtx, !idIndex.isEmpty() ? idIndex : ic->getDefaultIdIndexSpec()));} else {uassert(50001,"autoIndexId:false is not allowed for replicated collections",!nss.isReplicated());}}// 内部测试故障注入点hangBeforeLoggingCreateCollection.pauseWhileSet();// 触发创建集合的观察者事件opCtx->getServiceContext()->getOpObserver()->onCreateCollection(opCtx, collection, nss, optionsWithUUID, fullIdIndexSpec, createOplogSlot);// 为系统集合创建额外索引if (canAcceptWrites && createIdIndex && nss.isSystem()) {createSystemIndexes(opCtx, collection);}return collection;

}auto storageEngine = opCtx->getServiceContext()->getStorageEngine();获取存储引擎;

storageEngine->getCatalog()->createCollection继续创建集合,storage是与底层存储引擎打交道的一层,MongoDB在设计上也是支持不同的存储引擎的,不同的引擎都需要在storage进行实现(讲道理完全可以做一个内存数据库),而MongoDB默认支持的就是Wiredtiger存储引擎。

ownedCollection->init(opCtx)集合对象进行初始化;

catalog.registerCollection(uuid, std::move(ownedCollection))注册集合到Catalog;

ic->createIndexOnEmptyCollection(opCtx, !idIndex.isEmpty() ? idIndex : ic->getDefaultIdIndexSpec()常见默认索引_id;

在catalog可以只管创建collection需要做什么,而到了storage就需要管如何创建collection了。接下来需要看看storage是如何与Wiredtiger打交道,完成创建Collection的。

mongo/db/storage/durable_catalog_impl.cpp

StatusWith<std::pair<RecordId, std::unique_ptr<RecordStore>>> DurableCatalogImpl::createCollection(OperationContext* opCtx,const NamespaceString& nss,const CollectionOptions& options,bool allocateDefaultSpace) {// 前置条件检查:确保数据库已获取意向排它锁(MODE_IX)invariant(opCtx->lockState()->isDbLockedForMode(nss.db(), MODE_IX));invariant(nss.coll().size() > 0); // 集合名非空// 检查集合是否已存在(通过内存Catalog快速校验)if (CollectionCatalog::get(opCtx).lookupCollectionByNamespace(nss)) {return Status(ErrorCodes::NamespaceExists, "collection already exists " + nss);}// 分配键值存储前缀(KVPrefix),用于底层KV存储的键空间隔离KVPrefix prefix = KVPrefix::getNextPrefix(nss);// 持久化集合元数据到存储引擎StatusWith<Entry> swEntry = _addEntry(opCtx, nss, options, prefix);if (!swEntry.isOK()) return swEntry.getStatus();Entry& entry = swEntry.getValue(); // Entry包含UUID、prefix、catalogId等元数据// 调用存储引擎创建数据存储实体(RecordStore)Status status = _engine->getEngine()->createGroupedRecordStore(opCtx, nss.ns(), entry.ident, options, prefix);if (!status.isOK()) return status;// 标记 collation 特性已使用(用于存储引擎特性追踪)if (!options.collation.isEmpty()) {const auto feature = DurableCatalogImpl::FeatureTracker::NonRepairableFeature::kCollation;if (getFeatureTracker()->isNonRepairableFeatureInUse(opCtx, feature)) {getFeatureTracker()->markNonRepairableFeatureAsInUse(opCtx, feature);}}// 注册回滚钩子:若事务回滚,删除已创建的存储实体opCtx->recoveryUnit()->onRollback([opCtx, catalog = this, nss, ident = entry.ident, uuid = options.uuid.get()]() {catalog->_engine->getEngine()->dropIdent(opCtx, ident).ignore(); // 忽略删除失败});// 获取刚创建的 RecordStore(存储引擎中的数据容器)auto rs = _engine->getEngine()->getGroupedRecordStore(opCtx, nss.ns(), entry.ident, options, prefix);invariant(rs); // 确保存储实例不为空// 返回目录ID(RecordId)和存储实例return std::make_pair(entry.catalogId, std::move(rs));

}持久化集合元数据到存储引擎StatusWith<Entry> swEntry = _addEntry(opCtx, nss, options, prefix); 记录collection的meta到系统集合中(这样MongoDB才能通过show collections命令查看所有collection)

调用存储引擎创建数据存储实体(RecordStore) Status status = _engine->getEngine()->createGroupedRecordStore( opCtx, nss.ns(), entry.ident, options, prefix);

mongo/db/storage/durable_catalog_impl.cpp中_addEntry(opCtx, nss, options, prefix)代码:

StatusWith<DurableCatalog::Entry> DurableCatalogImpl::_addEntry(OperationContext* opCtx,NamespaceString nss,const CollectionOptions& options,KVPrefix prefix) {// 前置条件:持有数据库意向排它锁(MODE_IX)invariant(opCtx->lockState()->isDbLockedForMode(nss.db(), MODE_IX));// 生成唯一的存储标识(ident),例如:"5.1" 对应 "db.coll"const string ident = _newUniqueIdent(nss, "collection");// 构建元数据BSON文档BSONObj obj;{BSONObjBuilder b;b.append("ns", nss.ns()); // 命名空间(如 "db.coll")b.append("ident", ident); // 存储引擎中的唯一标识BSONCollectionCatalogEntry::MetaData md;md.ns = nss.ns();md.options = options;md.prefix = prefix; // 键前缀(用于KV存储的键隔离)b.append("md", md.toBSON()); // 序列化元数据obj = b.obj();}// 将元数据写入Catalog的RecordStore(底层存储)StatusWith<RecordId> res = _rs->insertRecord(opCtx, obj.objdata(), obj.objsize(), Timestamp() // 时间戳,可选,此处可能为默认值);if (!res.isOK()) return res.getStatus(); // 写入失败,返回错误// 维护内存中的目录映射(线程安全:通过Latch加锁)stdx::lock_guard<Latch> lk(_catalogIdToEntryMapLock);RecordId catalogId = res.getValue(); // RecordId是元数据在存储中的唯一ID// 确保目录ID未被占用invariant(_catalogIdToEntryMap.find(catalogId) == _catalogIdToEntryMap.end());// 存储映射关系:catalogId → {catalogId, ident, nss}_catalogIdToEntryMap[catalogId] = {catalogId, ident, nss};// 注册事务变更:若回滚,需从内存映射中移除该条目opCtx->recoveryUnit()->registerChange(std::make_unique<AddIdentChange>(this, catalogId));// 日志记录LOG(1) << "stored meta data for " << nss.ns() << " @ " << catalogId;// 返回包含元数据的Entry结构体return {{catalogId, ident, nss}};

}DurableCatalogImpl::_addEntry 是createCollection的关键子函数,主要流程包括:

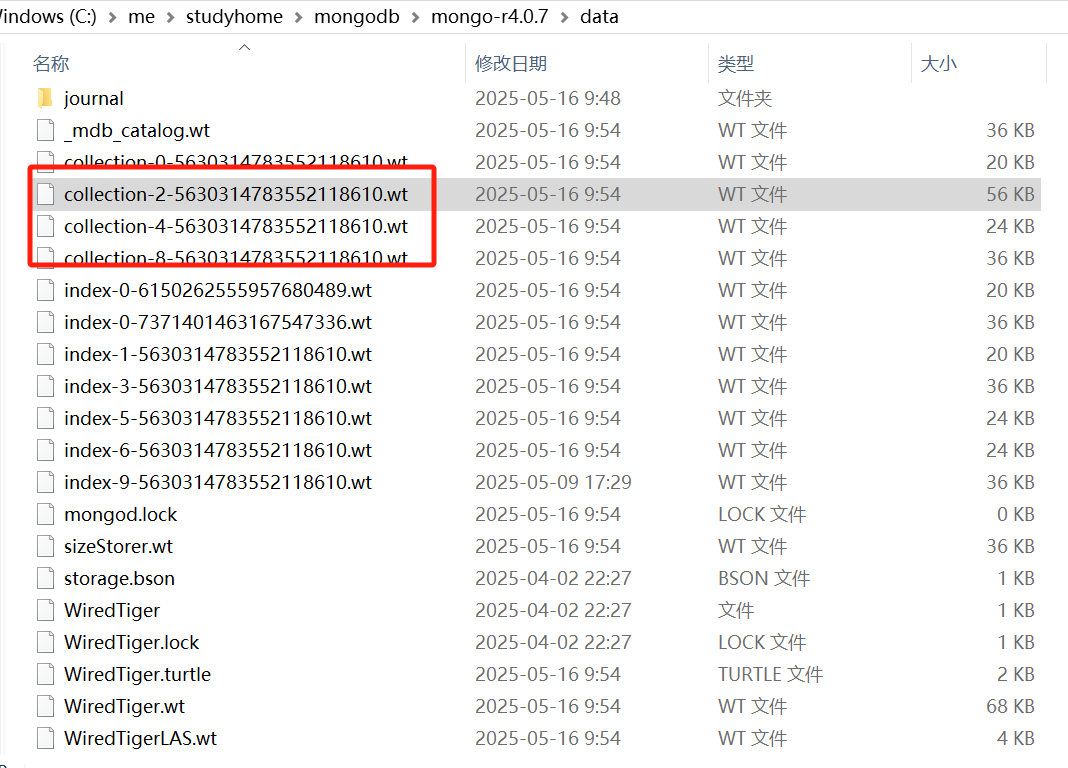

生成唯一存储标识(ident):为集合在存储引擎中分配唯一名称(如 WiredTiger 的表名collection-0--9135487495984222338),例如下面的截图

构建元数据文档:将集合信息(命名空间、选项、键前缀等)序列化为 BSON 格式,{ ns: "db.conca", ident: "collection-0--8262702921578327518", md: }

写入底层存储: _rs->insertRecord将元数据文档插入到 Catalog 的记录存储(RecordStore)中,写入到系统表_uri:table:_mdb_catalog中。

/*** A thin wrapper around insertRecords() to simplify handling of single document inserts.*/StatusWith<RecordId> insertRecord(OperationContext* opCtx,const char* data,int len,Timestamp timestamp) {std::vector<Record> inOutRecords{Record{RecordId(), RecordData(data, len)}};Status status = insertRecords(opCtx, &inOutRecords, std::vector<Timestamp>{timestamp});if (!status.isOK())return status;return inOutRecords.front().id;}

mongo/db/storage/wiredtiger/wiredtiger_record_store.cpp的_insertRecords将集合信息 JSON对象{ ns: "db2.conca", ident: "collection-0--9135487495984222338", md: {...}}写入到系统表_mdb_catalog中,_mdb_catalog存储的是表和索引的元数据信息。

Status WiredTigerRecordStore::insertRecords(OperationContext* opCtx,std::vector<Record>* records,const std::vector<Timestamp>& timestamps) {return _insertRecords(opCtx, records->data(), timestamps.data(), records->size());

}Status WiredTigerRecordStore::_insertRecords(OperationContext* opCtx,Record* records,const Timestamp* timestamps,size_t nRecords) {dassert(opCtx->lockState()->isWriteLocked());// We are kind of cheating on capped collections since we write all of them at once ....// Simplest way out would be to just block vector writes for everything except oplog ?int64_t totalLength = 0;for (size_t i = 0; i < nRecords; i++)totalLength += records[i].data.size();// caller will retry one element at a timeif (_isCapped && totalLength > _cappedMaxSize)return Status(ErrorCodes::BadValue, "object to insert exceeds cappedMaxSize");LOG(1) << "conca WiredTigerRecordStore::insertRecords _uri:" << _uri;LOG(1) << "conca WiredTigerRecordStore::insertRecords _tableId:" << _tableId;WiredTigerCursor curwrap(_uri, _tableId, true, opCtx);curwrap.assertInActiveTxn();WT_CURSOR* c = curwrap.get();invariant(c);RecordId highestId = RecordId();dassert(nRecords != 0);for (size_t i = 0; i < nRecords; i++) {auto& record = records[i];if (_isOplog) {StatusWith<RecordId> status =oploghack::extractKey(record.data.data(), record.data.size());if (!status.isOK())return status.getStatus();record.id = status.getValue();} else {record.id = _nextId(opCtx);}dassert(record.id > highestId);highestId = record.id;}for (size_t i = 0; i < nRecords; i++) {auto& record = records[i];Timestamp ts;if (timestamps[i].isNull() && _isOplog) {// If the timestamp is 0, that probably means someone inserted a document directly// into the oplog. In this case, use the RecordId as the timestamp, since they are// one and the same. Setting this transaction to be unordered will trigger a journal// flush. Because these are direct writes into the oplog, the machinery to trigger a// journal flush is bypassed. A followup oplog read will require a fresh visibility// value to make progress.ts = Timestamp(record.id.repr());opCtx->recoveryUnit()->setOrderedCommit(false);} else {ts = timestamps[i];}if (!ts.isNull()) {LOG(4) << "inserting record with timestamp " << ts;fassert(39001, opCtx->recoveryUnit()->setTimestamp(ts));}setKey(c, record.id);WiredTigerItem value(record.data.data(), record.data.size());c->set_value(c, value.Get());int ret = WT_OP_CHECK(c->insert(c));if (ret)return wtRCToStatus(ret, "WiredTigerRecordStore::insertRecord");}_changeNumRecords(opCtx, nRecords);_increaseDataSize(opCtx, totalLength);if (_oplogStones) {_oplogStones->updateCurrentStoneAfterInsertOnCommit(opCtx, totalLength, highestId, nRecords);} else {_cappedDeleteAsNeeded(opCtx, highestId);}return Status::OK();

}维护内存映射:在内存中建立 “目录 ID(RecordId)→ 集合元数据” 的映射关系,加速后续查询。

注册事务变更:确保元数据写入可参与事务回滚,保证原子性。

mongo/db/storage/wiredtiger/wiredtiger_kv_engine.h

Status createRecordStore(OperationContext* opCtx,StringData ns,StringData ident,const CollectionOptions& options) override {return createGroupedRecordStore(opCtx, ns, ident, options, KVPrefix::kNotPrefixed);}mongo/db/storage/wiredtiger/wiredtiger_kv_engine.cpp

Status WiredTigerKVEngine::createGroupedRecordStore(OperationContext* opCtx,StringData ns,StringData ident,const CollectionOptions& options,KVPrefix prefix) {_ensureIdentPath(ident);WiredTigerSession session(_conn);const bool prefixed = prefix.isPrefixed();StatusWith<std::string> result = WiredTigerRecordStore::generateCreateString(_canonicalName, ns, options, _rsOptions, prefixed);if (!result.isOK()) {return result.getStatus();}std::string config = result.getValue();string uri = _uri(ident);WT_SESSION* s = session.getSession();LOG(2) << "WiredTigerKVEngine::createRecordStore ns: " << ns << " uri: " << uri<< " config: " << config;return wtRCToStatus(s->create(s, uri.c_str(), config.c_str()));

}

_ensureIdentPath(ident);确保存储标识(ident)对应的物理路径存在。若 ident 为 collection-0--9135487495984222338,对应文件路径为 /data/collection-0--9135487495984222338.wt,需确保 /data存在。

string uri = _uri(ident); // 转换为 "table:collection-0--9135487495984222338" WT_SESSION* s = session.getSession(); s->create(s, uri.c_str(), config.c_str());

URI 格式:_uri 将 ident 转换为 WiredTiger 资源标识符,如 table:collection-0--9135487495984222338。

WiredTiger API 调用:通过 WT_SESSION::create 执行底层表创建,参数为 URI 和配置字符串。

错误处理:wtRCToStatus 将 WiredTiger 返回码转换为 MongoDB 的 Status 对象。

> db.createCollection('conca', {})创建conca集合,mongo打印日志如下:

2025-05-21T11:59:39.452+0800 D1 COMMAND [conn1] conca findCommand create|

2025-05-21T11:59:39.452+0800 D1 COMMAND [conn1] run command db2.$cmd { create: "conca", lsid: { id: UUID("e50ec2ba-3fe4-4d2f-990c-291ce2a25bdd") }, $db: "db2" }

2025-05-21T11:59:39.453+0800 D1 COMMAND [conn1] conca runCommandImpl

2025-05-21T11:59:39.453+0800 D1 COMMAND [conn1] conca invocation->run 1

2025-05-21T11:59:39.455+0800 D1 - [conn1] reloading view catalog for database db2

2025-05-21T11:59:39.455+0800 D1 STORAGE [conn1] create collection db2.conca {}

2025-05-21T11:59:39.456+0800 I STORAGE [conn1] createCollection: db2.conca with generated UUID: 4ce9d174-a254-442b-9d24-90fa114fa669 and options: {}

2025-05-21T11:59:39.456+0800 D1 STORAGE [conn1] conca _addEntry ident:collection-0--9135487495984222338

2025-05-21T11:59:39.459+0800 D3 STORAGE [conn1] WT begin_transaction for snapshot id 1678

2025-05-21T11:59:39.460+0800 D2 STORAGE [conn1] WiredTigerSizeStorer::store Marking table:_mdb_catalog dirty, numRecords: 6, dataSize: 2801, use_count: 3

2025-05-21T11:59:39.460+0800 D1 STORAGE [conn1] conca _addEntry res.getValue():RecordId(6)

2025-05-21T11:59:39.460+0800 D1 STORAGE [conn1] stored meta data for db2.conca @ RecordId(6)

2025-05-21T11:59:39.461+0800 D2 STORAGE [conn1] WiredTigerKVEngine::createRecordStore ns: db2.conca uri: table:collection-0--9135487495984222338 config: type=file,memory_page_max=10m,split_pct=90,leaf_value_max=64MB,checksum=on,block_compressor=snappy,,key_format=q,value_format=u,app_metadata=(formatVersion=1),log=(enabled=true)

2025-05-21T11:59:39.466+0800 D2 STORAGE [conn1] WiredTigerUtil::checkApplicationMetadataFormatVersion uri: table:collection-0--9135487495984222338 ok range 1 -> 1 current: 1

2025-05-21T11:59:39.467+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.467+0800 D3 STORAGE [conn1] fetched CCE metadata: { ns: "db2.conca", ident: "collection-0--9135487495984222338", md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 } }

2025-05-21T11:59:39.468+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 }

2025-05-21T11:59:39.468+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.469+0800 D3 STORAGE [conn1] fetched CCE metadata: { ns: "db2.conca", ident: "collection-0--9135487495984222338", md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 } }

2025-05-21T11:59:39.469+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 }

2025-05-21T11:59:39.470+0800 D1 STORAGE [conn1] db2.conca: clearing plan cache - collection info cache reset

2025-05-21T11:59:39.470+0800 D1 STORAGE [conn1] Registering collection db2.conca with UUID 4ce9d174-a254-442b-9d24-90fa114fa669

2025-05-21T11:59:39.471+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.471+0800 D3 STORAGE [conn1] fetched CCE metadata: { ns: "db2.conca", ident: "collection-0--9135487495984222338", md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 } }

2025-05-21T11:59:39.472+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [], prefix: -1 }

2025-05-21T11:59:39.473+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.475+0800 D3 STORAGE [conn1] recording new metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.476+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.477+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.477+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.478+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.479+0800 D3 STORAGE [conn1] index create string: type=file,internal_page_max=16k,leaf_page_max=16k,checksum=on,prefix_compression=true,block_compressor=,,,,key_format=u,value_format=u,app_metadata=(formatVersion=8),log=(enabled=true)

2025-05-21T11:59:39.479+0800 D2 STORAGE [conn1] WiredTigerKVEngine::createSortedDataInterface ns: db2.conca ident: index-1--9135487495984222338 config: type=file,internal_page_max=16k,leaf_page_max=16k,checksum=on,prefix_compression=true,block_compressor=,,,,key_format=u,value_format=u,app_metadata=(formatVersion=8),log=(enabled=true)

2025-05-21T11:59:39.480+0800 D1 STORAGE [conn1] create uri: table:index-1--9135487495984222338 config: type=file,internal_page_max=16k,leaf_page_max=16k,checksum=on,prefix_compression=true,block_compressor=,,,,key_format=u,value_format=u,app_metadata=(formatVersion=8),log=(enabled=true)

2025-05-21T11:59:39.484+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.484+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.484+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.485+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.486+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.486+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.487+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.490+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.491+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.492+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.493+0800 D2 STORAGE [conn1] WiredTigerUtil::checkApplicationMetadataFormatVersion uri: table:index-1--9135487495984222338 ok range 6 -> 12 current: 8

2025-05-21T11:59:39.493+0800 D1 STORAGE [conn1] db2.conca: clearing plan cache - collection info cache reset

2025-05-21T11:59:39.494+0800 I INDEX [conn1] index build: done building index _id_ on ns db2.conca

2025-05-21T11:59:39.494+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.494+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.495+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: false, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.496+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.496+0800 D3 STORAGE [conn1] recording new metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: true, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.497+0800 D3 STORAGE [conn1] looking up metadata for: RecordId(6)

2025-05-21T11:59:39.497+0800 D3 STORAGE [conn1] fetched CCE metadata: { md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: true, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }, idxIdent: { _id_: "index-1--9135487495984222338" }, ns: "db2.conca", ident: "collection-0--9135487495984222338" }

2025-05-21T11:59:39.498+0800 D3 STORAGE [conn1] returning metadata: md: { ns: "db2.conca", options: { uuid: UUID("4ce9d174-a254-442b-9d24-90fa114fa669") }, indexes: [ { spec: { v: 2, key: { _id: 1 }, name: "_id_" }, ready: true, multikey: false, multikeyPaths: { _id: BinData(0, 00) }, head: 0, prefix: -1, backgroundSecondary: false, runTwoPhaseBuild: false, versionOfBuild: 1 } ], prefix: -1 }

2025-05-21T11:59:39.499+0800 D3 STORAGE [conn1] WT commit_transaction for snapshot id 1679

2025-05-21T11:59:39.499+0800 D2 STORAGE [conn1] CUSTOM COMMIT class mongo::WiredTigerRecordStore::NumRecordsChange

2025-05-21T11:59:39.499+0800 D2 STORAGE [conn1] CUSTOM COMMIT class mongo::WiredTigerRecordStore::DataSizeChange

2025-05-21T11:59:39.500+0800 D2 STORAGE [conn1] CUSTOM COMMIT class mongo::DurableCatalogImpl::AddIdentChange----设计模式(抽象工厂))

)

)

html基础和开发工具)

——在职考研 199 管综 + 英语二 30 周「顺水行舟」上岸指南)