本人使用的是京东云服务器配置milvus

参考教程:https://blog.csdn.net/withme977/article/details/137270087

首先确保安装了docker 、docker compose

docker -- version

docker-compose --version

创建milvus工作目录

mkdir milvus

# 进入到新建的目录

cd milvus

接下来需要下载docker-compose.yml和milvus.yml文件:

下载docker-compose.yml,或者你也可以直接用我提供的代码:

# CPU单机版

wget https://github.com/milvus-io/milvus/releases/download/v2.3.5/milvus-standalone-docker-compose.yml -O docker-compose.yml

# GPU单机版

wget https://github.com/milvus-io/milvus/releases/download/v2.3.5/milvus-standalone-docker-compose-gpu.yml -O docker-compose.yml

# docker-compose.yml

version: '3.5'services:etcd:container_name: milvus-etcdimage: quay.io/coreos/etcd:v3.5.5environment:- ETCD_AUTO_COMPACTION_MODE=revision- ETCD_AUTO_COMPACTION_RETENTION=1000- ETCD_QUOTA_BACKEND_BYTES=4294967296- ETCD_SNAPSHOT_COUNT=50000volumes:- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/etcd:/etcdcommand: etcd -advertise-client-urls=http://127.0.0.1:2379 -listen-client-urls http://0.0.0.0:2379 --data-dir /etcdhealthcheck:test: ["CMD", "etcdctl", "endpoint", "health"]interval: 30stimeout: 20sretries: 3minio:container_name: milvus-minioimage: minio/minio:RELEASE.2023-03-20T20-16-18Zenvironment:MINIO_ACCESS_KEY: minioadminMINIO_SECRET_KEY: minioadminports:- "9001:9001"- "19531:19530"volumes:- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/minio:/minio_datacommand: minio server /minio_data --console-address ":9001"healthcheck:test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]interval: 30stimeout: 20sretries: 3standalone:container_name: milvus-standaloneimage: milvusdb/milvus:v2.3.5command: ["milvus", "run", "standalone"]security_opt:- seccomp:unconfinedenvironment:ETCD_ENDPOINTS: etcd:2379MINIO_ADDRESS: minio:9000volumes:- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/milvus:/var/lib/milvus- ${DOCKER_VOLUME_DIRECTORY:-.}/milvus.yaml:/milvus/configs/milvus.yamlhealthcheck:test: ["CMD", "curl", "-f", "http://localhost:9091/healthz"]interval: 30sstart_period: 90stimeout: 20sretries: 3ports:- "19530:19530"- "9091:9091"depends_on:- "etcd"- "minio"# 在原docker-compose文件的这个位置添加下面这个attu容器,注意版本号和行前空格。attu:container_name: attuimage: zilliz/attu:v2.3.6environment:MILVUS_URL: milvus-standalone:19530ports:- "8000:3000" # 外部端口8000可以自定义depends_on:- "standalone"networks:default:name: milvus

注:默认数据挂载在这两个目录下:

volumes:- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/milvus:/var/lib/milvus- ${DOCKER_VOLUME_DIRECTORY:-.}/milvus.yaml:/milvus/configs/milvus.yaml

下载milvus.yml文件,或者你也可以直接用我的:

# 注意改成自己对应的milvus版本号

wget https://raw.githubusercontent.com/milvus-io/milvus/v2.3.5/configs/milvus.yaml

# milvus.yml 文件

# Licensed to the LF AI & Data foundation under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.# Related configuration of etcd, used to store Milvus metadata & service discovery.

etcd:endpoints: localhost:2379rootPath: by-dev # The root path where data is stored in etcdmetaSubPath: meta # metaRootPath = rootPath + '/' + metaSubPathkvSubPath: kv # kvRootPath = rootPath + '/' + kvSubPathlog:level: info # Only supports debug, info, warn, error, panic, or fatal. Default 'info'.# path is one of:# - "default" as os.Stderr,# - "stderr" as os.Stderr,# - "stdout" as os.Stdout,# - file path to append server logs to.# please adjust in embedded Milvus: /tmp/milvus/logs/etcd.logpath: stdoutssl:enabled: false # Whether to support ETCD secure connection modetlsCert: /path/to/etcd-client.pem # path to your cert filetlsKey: /path/to/etcd-client-key.pem # path to your key filetlsCACert: /path/to/ca.pem # path to your CACert file# TLS min version# Optional values: 1.0, 1.1, 1.2, 1.3。# We recommend using version 1.2 and above.tlsMinVersion: 1.3use:embed: false # Whether to enable embedded Etcd (an in-process EtcdServer).data:dir: default.etcd # Embedded Etcd only. please adjust in embedded Milvus: /tmp/milvus/etcdData/metastore:# Default value: etcd# Valid values: [etcd, tikv]type: etcd# Related configuration of tikv, used to store Milvus metadata.

# Notice that when TiKV is enabled for metastore, you still need to have etcd for service discovery.

# TiKV is a good option when the metadata size requires better horizontal scalability.

tikv:# Note that the default pd port of tikv is 2379, which conflicts with etcd.endpoints: 127.0.0.1:2389rootPath: by-dev # The root path where data is storedmetaSubPath: meta # metaRootPath = rootPath + '/' + metaSubPathkvSubPath: kv # kvRootPath = rootPath + '/' + kvSubPathlocalStorage:path: /var/lib/milvus/data/ # please adjust in embedded Milvus: /tmp/milvus/data/# Related configuration of MinIO/S3/GCS or any other service supports S3 API, which is responsible for data persistence for Milvus.

# We refer to the storage service as MinIO/S3 in the following description for simplicity.

minio:address: localhost # Address of MinIO/S3port: 9000 # Port of MinIO/S3accessKeyID: minioadmin # accessKeyID of MinIO/S3secretAccessKey: minioadmin # MinIO/S3 encryption stringuseSSL: false # Access to MinIO/S3 with SSLbucketName: a-bucket # Bucket name in MinIO/S3rootPath: files # The root path where the message is stored in MinIO/S3# Whether to useIAM role to access S3/GCS instead of access/secret keys# For more information, refer to# aws: https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html# gcp: https://cloud.google.com/storage/docs/access-control/iam# aliyun (ack): https://www.alibabacloud.com/help/en/container-service-for-kubernetes/latest/use-rrsa-to-enforce-access-control# aliyun (ecs): https://www.alibabacloud.com/help/en/elastic-compute-service/latest/attach-an-instance-ram-roleuseIAM: false# Cloud Provider of S3. Supports: "aws", "gcp", "aliyun".# You can use "aws" for other cloud provider supports S3 API with signature v4, e.g.: minio# You can use "gcp" for other cloud provider supports S3 API with signature v2# You can use "aliyun" for other cloud provider uses virtual host style bucket# When useIAM enabled, only "aws", "gcp", "aliyun" is supported for nowcloudProvider: aws# Custom endpoint for fetch IAM role credentials. when useIAM is true & cloudProvider is "aws".# Leave it empty if you want to use AWS default endpointiamEndpoint:# Log level for aws sdk log.# Supported level: off, fatal, error, warn, info, debug, tracelogLevel: fatal# Cloud data center regionregion: ""# Cloud whether use virtual host bucket modeuseVirtualHost: false# timeout for request time in millisecondsrequestTimeoutMs: 10000# Milvus supports four MQ: rocksmq(based on RockDB), natsmq(embedded nats-server), Pulsar and Kafka.

# You can change your mq by setting mq.type field.

# If you don't set mq.type field as default, there is a note about enabling priority if we config multiple mq in this file.

# 1. standalone(local) mode: rocksmq(default) > Pulsar > Kafka

# 2. cluster mode: Pulsar(default) > Kafka (rocksmq and natsmq is unsupported in cluster mode)

mq:# Default value: "default"# Valid values: [default, pulsar, kafka, rocksmq, natsmq]type: default# Related configuration of pulsar, used to manage Milvus logs of recent mutation operations, output streaming log, and provide log publish-subscribe services.

pulsar:address: localhost # Address of pulsarport: 6650 # Port of Pulsarwebport: 80 # Web port of pulsar, if you connect directly without proxy, should use 8080maxMessageSize: 5242880 # 5 * 1024 * 1024 Bytes, Maximum size of each message in pulsar.tenant: publicnamespace: defaultrequestTimeout: 60 # pulsar client global request timeout in secondsenableClientMetrics: false # Whether to register pulsar client metrics into milvus metrics path.# If you want to enable kafka, needs to comment the pulsar configs

# kafka:

# brokerList:

# saslUsername:

# saslPassword:

# saslMechanisms: PLAIN

# securityProtocol: SASL_SSL

# readTimeout: 10 # read message timeout in secondsrocksmq:# The path where the message is stored in rocksmq# please adjust in embedded Milvus: /tmp/milvus/rdb_datapath: /var/lib/milvus/rdb_datalrucacheratio: 0.06 # rocksdb cache memory ratiorocksmqPageSize: 67108864 # 64 MB, 64 * 1024 * 1024 bytes, The size of each page of messages in rocksmqretentionTimeInMinutes: 4320 # 3 days, 3 * 24 * 60 minutes, The retention time of the message in rocksmq.retentionSizeInMB: 8192 # 8 GB, 8 * 1024 MB, The retention size of the message in rocksmq.compactionInterval: 86400 # 1 day, trigger rocksdb compaction every day to remove deleted data# compaction compression type, only support use 0,7.# 0 means not compress, 7 will use zstd# len of types means num of rocksdb level.compressionTypes: [0, 0, 7, 7, 7]# natsmq configuration.

# more detail: https://docs.nats.io/running-a-nats-service/configuration

natsmq:server: # server side configuration for natsmq.port: 4222 # 4222 by default, Port for nats server listening.storeDir: /var/lib/milvus/nats # /var/lib/milvus/nats by default, directory to use for JetStream storage of nats.maxFileStore: 17179869184 # (B) 16GB by default, Maximum size of the 'file' storage.maxPayload: 8388608 # (B) 8MB by default, Maximum number of bytes in a message payload.maxPending: 67108864 # (B) 64MB by default, Maximum number of bytes buffered for a connection Applies to client connections.initializeTimeout: 4000 # (ms) 4s by default, waiting for initialization of natsmq finished.monitor:trace: false # false by default, If true enable protocol trace log messages.debug: false # false by default, If true enable debug log messages.logTime: true # true by default, If set to false, log without timestamps.logFile: /tmp/milvus/logs/nats.log # /tmp/milvus/logs/nats.log by default, Log file path relative to .. of milvus binary if use relative path.logSizeLimit: 536870912 # (B) 512MB by default, Size in bytes after the log file rolls over to a new one.retention:maxAge: 4320 # (min) 3 days by default, Maximum age of any message in the P-channel.maxBytes: # (B) None by default, How many bytes the single P-channel may contain. Removing oldest messages if the P-channel exceeds this size.maxMsgs: # None by default, How many message the single P-channel may contain. Removing oldest messages if the P-channel exceeds this limit.# Related configuration of rootCoord, used to handle data definition language (DDL) and data control language (DCL) requests

rootCoord:dmlChannelNum: 16 # The number of dml channels created at system startupmaxDatabaseNum: 64 # Maximum number of databasemaxPartitionNum: 4096 # Maximum number of partitions in a collectionminSegmentSizeToEnableIndex: 1024 # It's a threshold. When the segment size is less than this value, the segment will not be indexedimportTaskExpiration: 900 # (in seconds) Duration after which an import task will expire (be killed). Default 900 seconds (15 minutes).importTaskRetention: 86400 # (in seconds) Milvus will keep the record of import tasks for at least `importTaskRetention` seconds. Default 86400, seconds (24 hours).enableActiveStandby: false# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 53100grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912# Related configuration of proxy, used to validate client requests and reduce the returned results.

proxy:timeTickInterval: 200 # ms, the interval that proxy synchronize the time tickhealthCheckTimeout: 3000 # ms, the interval that to do component healthy checkmsgStream:timeTick:bufSize: 512maxNameLength: 255 # Maximum length of name for a collection or alias# Maximum number of fields in a collection.# As of today (2.2.0 and after) it is strongly DISCOURAGED to set maxFieldNum >= 64.# So adjust at your risk!maxFieldNum: 64maxShardNum: 16 # Maximum number of shards in a collectionmaxDimension: 32768 # Maximum dimension of a vector# Whether to produce gin logs.\n# please adjust in embedded Milvus: falseginLogging: trueginLogSkipPaths: "/" # skipped url path for gin log split by commamaxTaskNum: 1024 # max task number of proxy task queueaccessLog:enable: false# Log filename, set as "" to use stdout.# filename: ""# define formatters for access log by XXX:{format: XXX, method:[XXX,XXX]}formatters:# "base" formatter could not set methods# all method will use "base" formatter defaultbase:# will not print access log if set as ""format: "[$time_now] [ACCESS] <$user_name: $user_addr> $method_name [status: $method_status] [code: $error_code] [sdk: $sdk_version] [msg: $error_msg] [traceID: $trace_id] [timeCost: $time_cost]"query:format: "[$time_now] [ACCESS] <$user_name: $user_addr> $method_name [status: $method_status] [code: $error_code] [sdk: $sdk_version] [msg: $error_msg] [traceID: $trace_id] [timeCost: $time_cost] [database: $database_name] [collection: $collection_name] [partitions: $partition_name] [expr: $method_expr]"# set formatter owners by method name(method was all milvus external interface)# all method will use base formatter default# one method only could use one formatter# if set a method formatter mutiple times, will use random fomatter.methods: ["Query", "Search", "Delete"]# localPath: /tmp/milvus_accesslog // log file rootpath# maxSize: 64 # max log file size(MB) of singal log file, mean close when time <= 0.# rotatedTime: 0 # max time range of singal log file, mean close when time <= 0;# maxBackups: 8 # num of reserved backups. will rotate and crate a new backup when access log file trigger maxSize or rotatedTime.# cacheSize: 10240 # write cache of accesslog in Byte# minioEnable: false # update backups to milvus minio when minioEnable is true.# remotePath: "access_log/" # file path when update backups to minio# remoteMaxTime: 0 # max time range(in Hour) of backups in minio, 0 means close time retention.http:enabled: true # Whether to enable the http serverdebug_mode: false # Whether to enable http server debug mode# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 19530internalPort: 19529grpc:serverMaxSendSize: 268435456serverMaxRecvSize: 67108864clientMaxSendSize: 268435456clientMaxRecvSize: 67108864# Related configuration of queryCoord, used to manage topology and load balancing for the query nodes, and handoff from growing segments to sealed segments.

queryCoord:autoHandoff: true # Enable auto handoffautoBalance: true # Enable auto balancebalancer: ScoreBasedBalancer # Balancer to useglobalRowCountFactor: 0.1 # expert parameters, only used by scoreBasedBalancerscoreUnbalanceTolerationFactor: 0.05 # expert parameters, only used by scoreBasedBalancerreverseUnBalanceTolerationFactor: 1.3 #expert parameters, only used by scoreBasedBalanceroverloadedMemoryThresholdPercentage: 90 # The threshold percentage that memory overloadbalanceIntervalSeconds: 60memoryUsageMaxDifferencePercentage: 30checkInterval: 1000channelTaskTimeout: 60000 # 1 minutesegmentTaskTimeout: 120000 # 2 minutedistPullInterval: 500heartbeatAvailableInterval: 10000 # 10s, Only QueryNodes which fetched heartbeats within the duration are availableloadTimeoutSeconds: 600checkHandoffInterval: 5000# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 19531grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912taskMergeCap: 1taskExecutionCap: 256enableActiveStandby: false # Enable active-standbybrokerTimeout: 5000 # broker rpc timeout in milliseconds# Related configuration of queryNode, used to run hybrid search between vector and scalar data.

queryNode:dataSync:flowGraph:maxQueueLength: 16 # Maximum length of task queue in flowgraphmaxParallelism: 1024 # Maximum number of tasks executed in parallel in the flowgraphstats:publishInterval: 1000 # Interval for querynode to report node information (milliseconds)segcore:cgoPoolSizeRatio: 2.0 # cgo pool size ratio to max read concurrencyknowhereThreadPoolNumRatio: 4# Use more threads to make better use of SSD throughput in disk index.# This parameter is only useful when enable-disk = true.# And this value should be a number greater than 1 and less than 32.chunkRows: 128 # The number of vectors in a chunk.interimIndex: # build a vector temperate index for growing segment or binlog to accelerate searchenableIndex: truenlist: 128 # segment index nlistnprobe: 16 # nprobe to search segment, based on your accuracy requirement, must smaller than nlistmemExpansionRate: 1.15 # the ratio of building interim index memory usage to raw dataloadMemoryUsageFactor: 1 # The multiply factor of calculating the memory usage while loading segmentsenableDisk: false # enable querynode load disk index, and search on disk indexmaxDiskUsagePercentage: 95cache:enabled: true # deprecated, TODO: remove itmemoryLimit: 2147483648 # 2 GB, 2 * 1024 *1024 *1024 # deprecated, TODO: remove itreadAheadPolicy: willneed # The read ahead policy of chunk cache, options: `normal, random, sequential, willneed, dontneed`grouping:enabled: truemaxNQ: 1000topKMergeRatio: 20scheduler:receiveChanSize: 10240unsolvedQueueSize: 10240# maxReadConcurrentRatio is the concurrency ratio of read task (search task and query task).# Max read concurrency would be the value of runtime.NumCPU * maxReadConcurrentRatio.# It defaults to 2.0, which means max read concurrency would be the value of runtime.NumCPU * 2.# Max read concurrency must greater than or equal to 1, and less than or equal to runtime.NumCPU * 100.# (0, 100]maxReadConcurrentRatio: 1cpuRatio: 10 # ratio used to estimate read task cpu usage.maxTimestampLag: 86400# read task schedule policy: fifo(by default), user-task-polling.scheduleReadPolicy:# fifo: A FIFO queue support the schedule.# user-task-polling:# The user's tasks will be polled one by one and scheduled.# Scheduling is fair on task granularity.# The policy is based on the username for authentication.# And an empty username is considered the same user.# When there are no multi-users, the policy decay into FIFOname: fifomaxPendingTask: 10240# user-task-polling configure:taskQueueExpire: 60 # 1 min by default, expire time of inner user task queue since queue is empty.enableCrossUserGrouping: false # false by default Enable Cross user grouping when using user-task-polling policy. (close it if task of any user can not merge others).maxPendingTaskPerUser: 1024 # 50 by default, max pending task in scheduler per user.# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 21123grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912indexCoord:bindIndexNodeMode:enable: falseaddress: localhost:22930withCred: falsenodeID: 0segment:minSegmentNumRowsToEnableIndex: 1024 # It's a threshold. When the segment num rows is less than this value, the segment will not be indexedindexNode:scheduler:buildParallel: 1enableDisk: true # enable index node build disk vector indexmaxDiskUsagePercentage: 95# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 21121grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912dataCoord:channel:watchTimeoutInterval: 300 # Timeout on watching channels (in seconds). Datanode tickler update watch progress will reset timeout timer.balanceSilentDuration: 300 # The duration before the channelBalancer on datacoord to runbalanceInterval: 360 #The interval for the channelBalancer on datacoord to check balance statussegment:maxSize: 512 # Maximum size of a segment in MBdiskSegmentMaxSize: 2048 # Maximum size of a segment in MB for collection which has Disk indexsealProportion: 0.23# The time of the assignment expiration in ms# Warning! this parameter is an expert variable and closely related to data integrity. Without specific# target and solid understanding of the scenarios, it should not be changed. If it's necessary to alter# this parameter, make sure that the newly changed value is larger than the previous value used before restart# otherwise there could be a large possibility of data lossassignmentExpiration: 2000maxLife: 86400 # The max lifetime of segment in seconds, 24*60*60# If a segment didn't accept dml records in maxIdleTime and the size of segment is greater than# minSizeFromIdleToSealed, Milvus will automatically seal it.# The max idle time of segment in seconds, 10*60.maxIdleTime: 600minSizeFromIdleToSealed: 16 # The min size in MB of segment which can be idle from sealed.# The max number of binlog file for one segment, the segment will be sealed if# the number of binlog file reaches to max value.maxBinlogFileNumber: 32smallProportion: 0.5 # The segment is considered as "small segment" when its # of rows is smaller than# (smallProportion * segment max # of rows).# A compaction will happen on small segments if the segment after compaction will havecompactableProportion: 0.85# over (compactableProportion * segment max # of rows) rows.# MUST BE GREATER THAN OR EQUAL TO <smallProportion>!!!# During compaction, the size of segment # of rows is able to exceed segment max # of rows by (expansionRate-1) * 100%.expansionRate: 1.25enableCompaction: true # Enable data segment compactioncompaction:enableAutoCompaction: truerpcTimeout: 10 # compaction rpc request timeout in secondsmaxParallelTaskNum: 10 # max parallel compaction task numberindexBasedCompaction: trueenableGarbageCollection: truegc:interval: 3600 # gc interval in secondsmissingTolerance: 3600 # file meta missing tolerance duration in seconds, 3600dropTolerance: 10800 # file belongs to dropped entity tolerance duration in seconds. 10800enableActiveStandby: false# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 13333grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912dataNode:dataSync:flowGraph:maxQueueLength: 16 # Maximum length of task queue in flowgraphmaxParallelism: 1024 # Maximum number of tasks executed in parallel in the flowgraphmaxParallelSyncTaskNum: 6 # Maximum number of sync tasks executed in parallel in each flush managerskipMode:# when there are only timetick msg in flowgraph for a while (longer than coldTime),# flowGraph will turn on skip mode to skip most timeticks to reduce cost, especially there are a lot of channelsenable: trueskipNum: 4coldTime: 60segment:insertBufSize: 16777216 # Max buffer size to flush for a single segment.deleteBufBytes: 67108864 # Max buffer size to flush del for a single channelsyncPeriod: 600 # The period to sync segments if buffer is not empty.# can specify ip for example# ip: 127.0.0.1ip: # if not specify address, will use the first unicastable address as local ipport: 21124grpc:serverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912memory:forceSyncEnable: true # `true` to force sync if memory usage is too highforceSyncSegmentNum: 1 # number of segments to sync, segments with top largest buffer will be synced.watermarkStandalone: 0.2 # memory watermark for standalone, upon reaching this watermark, segments will be synced.watermarkCluster: 0.5 # memory watermark for cluster, upon reaching this watermark, segments will be synced.timetick:byRPC: truechannel:# specify the size of global work pool of all channels# if this parameter <= 0, will set it as the maximum number of CPUs that can be executing# suggest to set it bigger on large collection numbers to avoid blockingworkPoolSize: -1# specify the size of global work pool for channel checkpoint updating# if this parameter <= 0, will set it as 1000# suggest to set it bigger on large collection numbers to avoid blockingupdateChannelCheckpointMaxParallel: 1000# Configures the system log output.

log:level: info # Only supports debug, info, warn, error, panic, or fatal. Default 'info'.file:rootPath: # root dir path to put logs, default "" means no log file will print. please adjust in embedded Milvus: /tmp/milvus/logsmaxSize: 300 # MBmaxAge: 10 # Maximum time for log retention in day.maxBackups: 20format: text # text or jsonstdout: true # Stdout enable or notgrpc:log:level: WARNINGserverMaxSendSize: 536870912serverMaxRecvSize: 268435456clientMaxSendSize: 268435456clientMaxRecvSize: 536870912client:compressionEnabled: falsedialTimeout: 200keepAliveTime: 10000keepAliveTimeout: 20000maxMaxAttempts: 10initialBackOff: 0.2 # secondsmaxBackoff: 10 # secondsbackoffMultiplier: 2.0 # deprecated# Configure the proxy tls enable.

tls:serverPemPath: configs/cert/server.pemserverKeyPath: configs/cert/server.keycaPemPath: configs/cert/ca.pemcommon:chanNamePrefix:cluster: by-devrootCoordTimeTick: rootcoord-timetickrootCoordStatistics: rootcoord-statisticsrootCoordDml: rootcoord-dmlreplicateMsg: replicate-msgrootCoordDelta: rootcoord-deltasearch: searchsearchResult: searchResultqueryTimeTick: queryTimeTickdataCoordStatistic: datacoord-statistics-channeldataCoordTimeTick: datacoord-timetick-channeldataCoordSegmentInfo: segment-info-channelsubNamePrefix:proxySubNamePrefix: proxyrootCoordSubNamePrefix: rootCoordqueryNodeSubNamePrefix: queryNodedataCoordSubNamePrefix: dataCoorddataNodeSubNamePrefix: dataNodedefaultPartitionName: _default # default partition name for a collectiondefaultIndexName: _default_idx # default index nameentityExpiration: -1 # Entity expiration in seconds, CAUTION -1 means never expireindexSliceSize: 16 # MBthreadCoreCoefficient:highPriority: 10 # This parameter specify how many times the number of threads is the number of cores in high priority thread poolmiddlePriority: 5 # This parameter specify how many times the number of threads is the number of cores in middle priority thread poollowPriority: 1 # This parameter specify how many times the number of threads is the number of cores in low priority thread poolDiskIndex:MaxDegree: 56SearchListSize: 100PQCodeBudgetGBRatio: 0.125BuildNumThreadsRatio: 1SearchCacheBudgetGBRatio: 0.1LoadNumThreadRatio: 8BeamWidthRatio: 4consistencyLevelUsedInDelete: "Bounded"gracefulTime: 5000 # milliseconds. it represents the interval (in ms) by which the request arrival time needs to be subtracted in the case of Bounded Consistency.gracefulStopTimeout: 1800 # seconds. it will force quit the server if the graceful stop process is not completed during this time.storageType: remote # please adjust in embedded Milvus: local, available values are [local, remote, opendal], value minio is deprecated, use remote instead# Default value: auto# Valid values: [auto, avx512, avx2, avx, sse4_2]# This configuration is only used by querynode and indexnode, it selects CPU instruction set for Searching and Index-building.simdType: autosecurity:authorizationEnabled: true# The superusers will ignore some system check processes,# like the old password verification when updating the credential# superUsers: roottlsMode: 0session:ttl: 30 # ttl value when session granting a lease to register serviceretryTimes: 30 # retry times when session sending etcd requests# preCreatedTopic decides whether using existed topicpreCreatedTopic:enabled: false# support pre-created topics# the name of pre-created topicsnames: ["topic1", "topic2"]# need to set a separated topic to stand for currently consumed timestamp for each channeltimeticker: "timetick-channel"ImportMaxFileSize: 17179869184 # 16 * 1024 * 1024 * 1024# max file size to import for bulkInsertlocks:metrics:enable: falsethreshold:info: 500 # minimum milliseconds for printing durations in info levelwarn: 1000 # minimum milliseconds for printing durations in warn levelttMsgEnabled: true # Whether the instance disable sending ts messagesbloomFilterSize: 100000maxBloomFalsePositive: 0.05# QuotaConfig, configurations of Milvus quota and limits.

# By default, we enable:

# 1. TT protection;

# 2. Memory protection.

# 3. Disk quota protection.

# You can enable:

# 1. DML throughput limitation;

# 2. DDL, DQL qps/rps limitation;

# 3. DQL Queue length/latency protection;

# 4. DQL result rate protection;

# If necessary, you can also manually force to deny RW requests.

quotaAndLimits:enabled: true # `true` to enable quota and limits, `false` to disable.limits:maxCollectionNum: 65536maxCollectionNumPerDB: 65536# quotaCenterCollectInterval is the time interval that quotaCenter# collects metrics from Proxies, Query cluster and Data cluster.# seconds, (0 ~ 65536)quotaCenterCollectInterval: 3ddl:enabled: falsecollectionRate: -1 # qps, default no limit, rate for CreateCollection, DropCollection, LoadCollection, ReleaseCollectionpartitionRate: -1 # qps, default no limit, rate for CreatePartition, DropPartition, LoadPartition, ReleasePartitionindexRate:enabled: falsemax: -1 # qps, default no limit, rate for CreateIndex, DropIndexflushRate:enabled: falsemax: -1 # qps, default no limit, rate for flushcompactionRate:enabled: falsemax: -1 # qps, default no limit, rate for manualCompactiondml:# dml limit rates, default no limit.# The maximum rate will not be greater than max.enabled: falseinsertRate:collection:max: -1 # MB/s, default no limitmax: -1 # MB/s, default no limitupsertRate:collection:max: -1 # MB/s, default no limitmax: -1 # MB/s, default no limitdeleteRate:collection:max: -1 # MB/s, default no limitmax: -1 # MB/s, default no limitbulkLoadRate:collection:max: -1 # MB/s, default no limit, not support yet. TODO: limit bulkLoad ratemax: -1 # MB/s, default no limit, not support yet. TODO: limit bulkLoad ratedql:# dql limit rates, default no limit.# The maximum rate will not be greater than max.enabled: falsesearchRate:collection:max: -1 # vps (vectors per second), default no limitmax: -1 # vps (vectors per second), default no limitqueryRate:collection:max: -1 # qps, default no limitmax: -1 # qps, default no limitlimitWriting:# forceDeny false means dml requests are allowed (except for some# specific conditions, such as memory of nodes to water marker), true means always reject all dml requests.forceDeny: falsettProtection:enabled: false# maxTimeTickDelay indicates the backpressure for DML Operations.# DML rates would be reduced according to the ratio of time tick delay to maxTimeTickDelay,# if time tick delay is greater than maxTimeTickDelay, all DML requests would be rejected.# secondsmaxTimeTickDelay: 300memProtection:# When memory usage > memoryHighWaterLevel, all dml requests would be rejected;# When memoryLowWaterLevel < memory usage < memoryHighWaterLevel, reduce the dml rate;# When memory usage < memoryLowWaterLevel, no action.enabled: truedataNodeMemoryLowWaterLevel: 0.85 # (0, 1], memoryLowWaterLevel in DataNodesdataNodeMemoryHighWaterLevel: 0.95 # (0, 1], memoryHighWaterLevel in DataNodesqueryNodeMemoryLowWaterLevel: 0.85 # (0, 1], memoryLowWaterLevel in QueryNodesqueryNodeMemoryHighWaterLevel: 0.95 # (0, 1], memoryHighWaterLevel in QueryNodesgrowingSegmentsSizeProtection:# No action will be taken if the growing segments size is less than the low watermark.# When the growing segments size exceeds the low watermark, the dml rate will be reduced,# but the rate will not be lower than `minRateRatio * dmlRate`.enabled: falseminRateRatio: 0.5lowWaterLevel: 0.2highWaterLevel: 0.4diskProtection:enabled: true # When the total file size of object storage is greater than `diskQuota`, all dml requests would be rejected;diskQuota: -1 # MB, (0, +inf), default no limitdiskQuotaPerCollection: -1 # MB, (0, +inf), default no limitlimitReading:# forceDeny false means dql requests are allowed (except for some# specific conditions, such as collection has been dropped), true means always reject all dql requests.forceDeny: falsequeueProtection:enabled: false# nqInQueueThreshold indicated that the system was under backpressure for Search/Query path.# If NQ in any QueryNode's queue is greater than nqInQueueThreshold, search&query rates would gradually cool off# until the NQ in queue no longer exceeds nqInQueueThreshold. We think of the NQ of query request as 1.# int, default no limitnqInQueueThreshold: -1# queueLatencyThreshold indicated that the system was under backpressure for Search/Query path.# If dql latency of queuing is greater than queueLatencyThreshold, search&query rates would gradually cool off# until the latency of queuing no longer exceeds queueLatencyThreshold.# The latency here refers to the averaged latency over a period of time.# milliseconds, default no limitqueueLatencyThreshold: -1resultProtection:enabled: false# maxReadResultRate indicated that the system was under backpressure for Search/Query path.# If dql result rate is greater than maxReadResultRate, search&query rates would gradually cool off# until the read result rate no longer exceeds maxReadResultRate.# MB/s, default no limitmaxReadResultRate: -1# colOffSpeed is the speed of search&query rates cool off.# (0, 1]coolOffSpeed: 0.9trace:# trace exporter type, default is stdout,# optional values: ['stdout', 'jaeger', 'otlp']exporter: stdout# fraction of traceID based sampler,# optional values: [0, 1]# Fractions >= 1 will always sample. Fractions < 0 are treated as zero.sampleFraction: 0otlp:endpoint: # "127.0.0.1:4318"secure: truejaeger:url: # "http://127.0.0.1:14268/api/traces"# when exporter is jaeger should set the jaeger's URLautoIndex:params:build: '{"M": 18,"efConstruction": 240,"index_type": "HNSW", "metric_type": "IP"}'

修改milvus.yml文件:common > security > authorizationEnabled

修改docker-compose.yml做资源映射

启动:

# 拉取镜像

docker-compose pull

# 启动容器

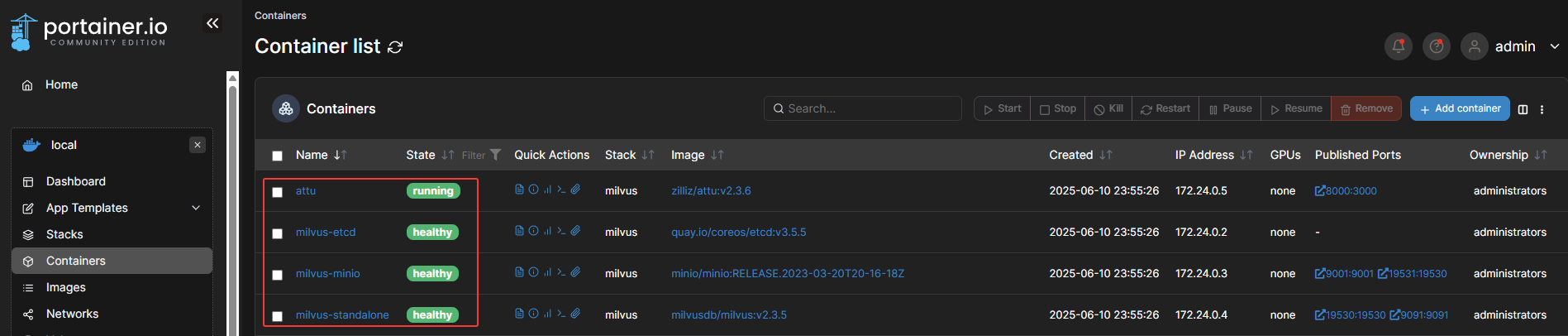

docker-compose up -d

# 查看启动状态(健康状态)

docker-compose ps -a

# 停止容器

docker-compose down

开启防火墙端口(本人使用的是京东云,如果你使用别的,只需查找相应方法就可以):

测试本地是否能够连接milvus:

from pymilvus import connections, utility

import timedef test_connection(host, port, retries=3, delay=2):"""测试 Milvus 连接Args:host: Milvus 服务器地址port: Milvus 服务器端口retries: 重试次数delay: 重试间隔(秒)"""for attempt in range(retries):try:print(f"\n尝试连接 Milvus ({attempt + 1}/{retries})...")print(f"连接地址: {host}:{port}")# 尝试连接connections.connect(alias="default",host=host,port=port,timeout=10, # 设置超时时间为 10 秒user="root", # 或者配置文件中的用户名password="Milvus" # 默认密码,如果你没有修改)# 获取服务器版本version = utility.get_server_version()print("✅ 连接成功!")print(f"Milvus 版本: {version}")# 列出所有集合collections = utility.list_collections()print(f"可用集合: {collections}")# 断开连接connections.disconnect("default")return Trueexcept Exception as e:print(f"❌ 连接失败 (尝试 {attempt + 1}/{retries}):")print(f"错误信息: {str(e)}")if attempt < retries - 1:print(f"等待 {delay} 秒后重试...")time.sleep(delay)else:print("\n所有重试都失败了。请检查:")print("1. Milvus 服务是否正在运行")print("2. 服务器地址和端口是否正确")print("3. 网络连接是否正常")print("4. 防火墙设置是否允许该连接")return Falseif __name__ == "__main__":# 测试远程连接print("\n=== 测试远程连接 ===")test_connection("117.72.38.155", "19530")

如果你想修改端口号,可以在docker-compose.yml中进行修改ports部分内容:

ports:- "9001:9001"- "19531:19530"

然后执行:

docker-compose down # 停止并删除旧容器(不会删除镜像和数据)

docker-compose up -d # 使用新配置重新启动容器

——物理层)

)