作业:

kaggle找到一个图像数据集,用cnn网络进行训练并且用grad-cam做可视化

划分数据集

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

import os

from sklearn.model_selection import train_test_split

from shutil import copyfile

import cv2

from torch.nn import functional as F# 数据集划分

data_root = "flowers" # 数据集根目录

classes = ["daisy", "tulip", "rose", "sunflower", "dandelion"]for folder in ["train", "val", "test"]:os.makedirs(os.path.join(data_root, folder), exist_ok=True)for cls in classes:cls_path = os.path.join(data_root, cls)imgs = [f for f in os.listdir(cls_path) if f.lower().endswith((".jpg", ".jpeg", ".png"))]# 划分数据集(测试集20%,验证集20% of 剩余数据,训练集60%)train_val, test = train_test_split(imgs, test_size=0.2, random_state=42)train, val = train_test_split(train_val, test_size=0.25, random_state=42)# 复制到train/val/test下的类别子文件夹(关键修正!)for split, imgs_list in zip(["train", "val", "test"], [train, val, test]):split_class_path = os.path.join(data_root, split, cls)# 创建子文件夹:train/chamomile/os.makedirs(split_class_path, exist_ok=True)for img in imgs_list:copyfile(os.path.join(cls_path, img), os.path.join(split_class_path, img))数据预处理

# 数据预处理(新增旋转增强)# 设置中文字体支持

plt.rcParams["font.family"] = ["SimHei"]

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题# 检查GPU是否可用

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")print(f"使用设备: {device}")# 训练集数据增强(彩色图像通用处理)

train_transform = transforms.Compose([transforms.Resize((224, 224)), # 调整尺寸为224x224(匹配CNN输入)transforms.RandomCrop(224, padding=4), # 随机裁剪并填充,增加数据多样性transforms.RandomHorizontalFlip(), # 水平翻转(概率0.5)transforms.RandomRotation(15), # 新增旋转transforms.ColorJitter(brightness=0.2, contrast=0.2), # 颜色抖动transforms.ToTensor(), # 转换为张量transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225)) # ImageNet标准归一化

])# 测试集仅归一化,不增强

test_transform = transforms.Compose([transforms.Resize((224, 224)),transforms.ToTensor(),transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))

]))加载数据集

# 数据加载器(保持不变)data_root = "flowers" # 数据集根目录,需包含5个子类别文件夹train_dataset = datasets.ImageFolder(os.path.join(data_root, "train"), transform=train_transform)val_dataset = datasets.ImageFolder(os.path.join(data_root, "val"), transform=test_transform)test_dataset = datasets.ImageFolder(os.path.join(data_root, "test"), transform=test_transform)# 创建数据加载器

batch_size = 32

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=2)

val_loader = DataLoader(val_dataset, batch_size=batch_size, shuffle=False, num_workers=2)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False, num_workers=2)# 获取类别名称(自动从文件夹名获取)

class_names = train_dataset.classesprint(f"检测到的类别: {class_names}") # 确保输出5个类别名称定义模型

# 模型定义(新增第4卷积块)

class FlowerCNN(nn.Module):def __init__(self, num_classes=5):super().__init__()# 卷积块1self.conv1 = nn.Conv2d(3, 32, 3, padding=1)self.bn1 = nn.BatchNorm2d(32)self.relu1 = nn.ReLU()self.pool1 = nn.MaxPool2d(2, 2) # 224→112# 卷积块2self.conv2 = nn.Conv2d(32, 64, 3, padding=1)self.bn2 = nn.BatchNorm2d(64)self.relu2 = nn.ReLU()self.pool2 = nn.MaxPool2d(2, 2) # 112→56# 卷积块3self.conv3 = nn.Conv2d(64, 128, 3, padding=1)self.bn3 = nn.BatchNorm2d(128)self.relu3 = nn.ReLU()self.pool3 = nn.MaxPool2d(2, 2) # 56→28# 卷积块4self.conv4 = nn.Conv2d(128, 256, 3, padding=1) # 新增卷积块self.bn4 = nn.BatchNorm2d(256)self.relu4 = nn.ReLU()self.pool4 = nn.MaxPool2d(2, 2) # 28→14# 全连接层self.fc1 = nn.Linear(256 * 14 * 14, 512) # 计算方式:224->112->56->28->14(四次池化后尺寸)self.dropout = nn.Dropout(0.5)self.fc2 = nn.Linear(512, num_classes) # 输出5个类别def forward(self, x):x = self.pool1(self.relu1(self.bn1(self.conv1(x))))x = self.pool2(self.relu2(self.bn2(self.conv2(x))))x = self.pool3(self.relu3(self.bn3(self.conv3(x))))x = self.pool4(self.relu4(self.bn4(self.conv4(x)))) # 新增池化x = x.view(x.size(0), -1) # 展平特征图x = self.dropout(self.relu1(self.fc1(x)))x = self.fc2(x)return x# 初始化模型并移至设备# 训练配置(增加轮数,使用StepLR)

model = FlowerCNN().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1)训练模型

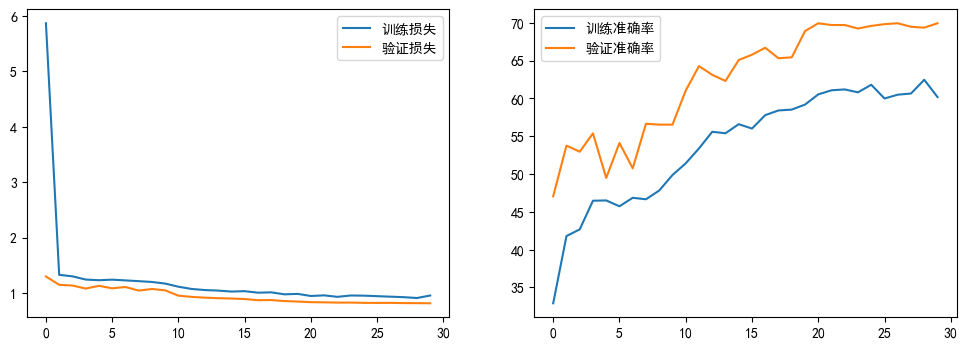

def train_model(epochs=30):best_val_acc = 0.0train_loss, val_loss, train_acc, val_acc = [], [], [], []for epoch in range(epochs):model.train()running_loss, correct, total = 0.0, 0, 0for data, target in train_loader:data, target = data.to(device), target.to(device)optimizer.zero_grad()outputs = model(data)loss = criterion(outputs, target)loss.backward()optimizer.step()running_loss += loss.item()_, pred = torch.max(outputs, 1)correct += (pred == target).sum().item()total += target.size(0)# 计算 epoch 指标epoch_train_loss = running_loss / len(train_loader)epoch_train_acc = 100 * correct / total# 验证集评估model.eval()val_running_loss, val_correct, val_total = 0.0, 0, 0with torch.no_grad():for data, target in val_loader:data, target = data.to(device), target.to(device)outputs = model(data)val_running_loss += criterion(outputs, target).item()_, pred = torch.max(outputs, 1)val_correct += (pred == target).sum().item()val_total += target.size(0)epoch_val_loss = val_running_loss / len(val_loader)epoch_val_acc = 100 * val_correct / val_totalscheduler.step()# 记录历史数据train_loss.append(epoch_train_loss)val_loss.append(epoch_val_loss)train_acc.append(epoch_train_acc)val_acc.append(epoch_val_acc)print(f"Epoch {epoch+1}/{epochs} | 训练损失: {epoch_train_loss:.4f} 验证准确率: {epoch_val_acc:.2f}%")# 保存最佳模型if epoch_val_acc > best_val_acc:torch.save(model.state_dict(), "best_model.pth")best_val_acc = epoch_val_acc# 绘制曲线plt.figure(figsize=(12, 4))# 损失曲线plt.subplot(1, 2, 1); plt.plot(train_loss, label='训练损失'); plt.plot(val_loss, label='验证损失'); plt.legend()# 准确率曲线plt.subplot(1, 2, 2); plt.plot(train_acc, label='训练准确率'); plt.plot(val_acc, label='验证准确率'); plt.legend()plt.show()return best_val_acc# 训练与可视化(保持不变)

print("开始训练...")

train_model(epochs=30)

print("训练完成,开始可视化...")开始训练...

Epoch 1/30 | 训练损失: 5.8699 验证准确率: 47.05%

Epoch 2/30 | 训练损失: 1.3307 验证准确率: 53.76%

Epoch 3/30 | 训练损失: 1.3045 验证准确率: 52.95%

Epoch 4/30 | 训练损失: 1.2460 验证准确率: 55.38%

Epoch 5/30 | 训练损失: 1.2342 验证准确率: 49.48%

Epoch 6/30 | 训练损失: 1.2442 验证准确率: 54.10%

Epoch 7/30 | 训练损失: 1.2309 验证准确率: 50.75%

Epoch 8/30 | 训练损失: 1.2172 验证准确率: 56.65%

Epoch 9/30 | 训练损失: 1.2025 验证准确率: 56.53%

Epoch 10/30 | 训练损失: 1.1733 验证准确率: 56.53%

Epoch 11/30 | 训练损失: 1.1167 验证准确率: 61.04%

Epoch 12/30 | 训练损失: 1.0763 验证准确率: 64.28%

Epoch 13/30 | 训练损失: 1.0564 验证准确率: 63.12%

Epoch 14/30 | 训练损失: 1.0469 验证准确率: 62.31%

Epoch 15/30 | 训练损失: 1.0295 验证准确率: 65.09%

Epoch 16/30 | 训练损失: 1.0365 验证准确率: 65.78%

Epoch 17/30 | 训练损失: 1.0091 验证准确率: 66.71%

Epoch 18/30 | 训练损失: 1.0152 验证准确率: 65.32%

Epoch 19/30 | 训练损失: 0.9794 验证准确率: 65.43%

Epoch 20/30 | 训练损失: 0.9875 验证准确率: 68.90%

Epoch 21/30 | 训练损失: 0.9496 验证准确率: 69.94%

Epoch 22/30 | 训练损失: 0.9608 验证准确率: 69.71%

Epoch 23/30 | 训练损失: 0.9342 验证准确率: 69.71%

Epoch 24/30 | 训练损失: 0.9586 验证准确率: 69.25%

Epoch 25/30 | 训练损失: 0.9554 验证准确率: 69.60%

Epoch 26/30 | 训练损失: 0.9463 验证准确率: 69.83%

Epoch 27/30 | 训练损失: 0.9373 验证准确率: 69.94%

Epoch 28/30 | 训练损失: 0.9282 验证准确率: 69.48%

Epoch 29/30 | 训练损失: 0.9130 验证准确率: 69.36%

Epoch 30/30 | 训练损失: 0.9585 验证准确率: 69.94%

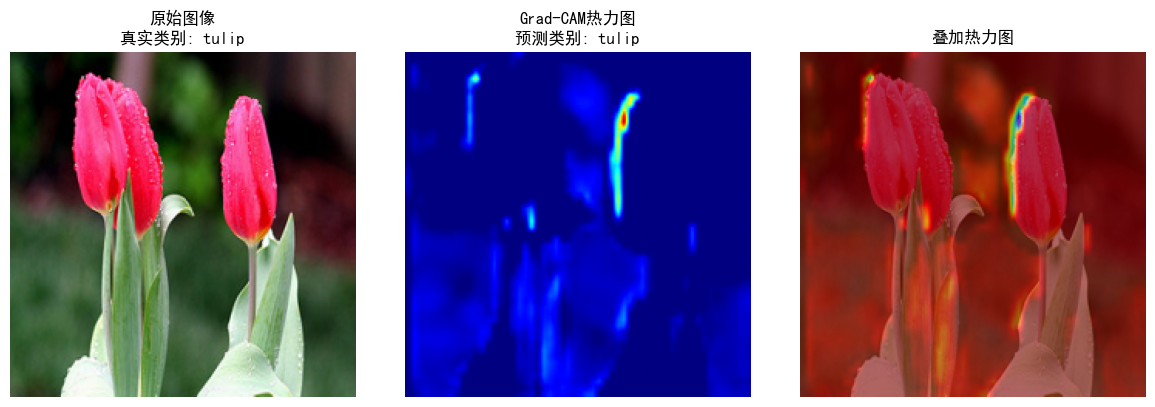

Grad-CAM可视化

class GradCAM:def __init__(self, model, target_layer_name="conv3"):self.model = model.eval() # 设置模型为评估模式self.target_layer_name = target_layer_name # 目标卷积层名称(需与模型定义一致)self.gradients, self.activations = None, None # 存储梯度,激活值# 注册前向和反向钩子函数for name, module in model.named_modules():if name == target_layer_name:module.register_forward_hook(self.forward_hook)module.register_backward_hook(self.backward_hook)breakdef forward_hook(self, module, input, output):"""前向传播时保存激活值"""self.activations = output.detach() # 不记录梯度的激活值def backward_hook(self, module, grad_input, grad_output):"""反向传播时保存梯度"""self.gradients = grad_output[0].detach() # 提取梯度(去除批量维度)def generate(self, input_image, target_class=None):"""生成Grad-CAM热力图"""# 前向传播获取模型输出outputs = self.model(input_image) # 输出形状: [batch_size, num_classes]target_class = torch.argmax(outputs, dim=1).item() if target_class is None else target_class# 反向传播计算梯度self.model.zero_grad()one_hot = torch.zeros_like(outputs); one_hot[0, target_class] = 1outputs.backward(gradient=one_hot)# 计算通道权重(全局平均池化)weights = torch.mean(self.gradients, dim=(2, 3))# 生成类激活映射(CAM)cam = torch.sum(self.activations[0] * weights[0][:, None, None], dim=0)cam = F.relu(cam); cam = (cam - cam.min()) / (cam.max() - cam.min() + 1e-8) cam = F.interpolate(cam.unsqueeze(0).unsqueeze(0), size=(224, 224), mode='bilinear').squeeze()return cam.cpu().numpy(), target_class# 可视化函数(关键修改:增加图像尺寸统一和颜色通道转换)

def visualize_gradcam(img_path, model, class_names, alpha=0.6):"""可视化Grad-CAM结果:param img_path: 测试图像路径:param model: 训练好的模型:param class_names: 类别名称列表:param alpha: 热力图透明度(0-1)"""# 加载图像并统一尺寸为224x224(解决尺寸不匹配问题)img = Image.open(img_path).convert("RGB").resize((224, 224))img_np = np.array(img) / 255.0# 预处理图像(与模型输入一致)transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))])input_tensor = transform(img).unsqueeze(0).to(device)# 生成Grad-CAM热力图grad_cam = GradCAM(model, target_layer_name="conv3")heatmap, pred_class = grad_cam.generate(input_tensor)# 热力图后处理(解决颜色通道问题)heatmap = np.uint8(255 * heatmap); heatmap = cv2.applyColorMap(heatmap, cv2.COLORMAP_JET) / 255.0; heatmap_rgb = heatmap[:, :, ::-1]# 叠加原始图像和热力图(尺寸和通道完全匹配)superimposed = cv2.addWeighted(img_np, 1 - alpha, heatmap, alpha, 0)# 绘制结果plt.figure(figsize=(12, 4))plt.subplot(1, 3, 1); plt.imshow(img_np); plt.title(f"原始图像\n真实类别: {img_path.split('/')[-2]}"); plt.axis('off')plt.subplot(1, 3, 2); plt.imshow(heatmap_rgb); plt.title(f"Grad-CAM热力图\n预测类别: {class_names[pred_class]}"); plt.axis('off')plt.subplot(1, 3, 3); plt.imshow(superimposed); plt.title("叠加热力图"); plt.axis('off')plt.tight_layout(); plt.show()# 选择测试图像(需存在且路径正确)

test_image_path = "flowers/tulip/100930342_92e8746431_n.jpg" # 执行可视化

visualize_gradcam(test_image_path, model, class_names)

@浙大疏锦行