断点续传

- vue前端代码

- 后端代码

- controller 层

- service层

- 持久层

- 主表,初始化单次上传

- 文件表,单次上传所有的文件记录

- 文件分块表

科普信息参考其他博主

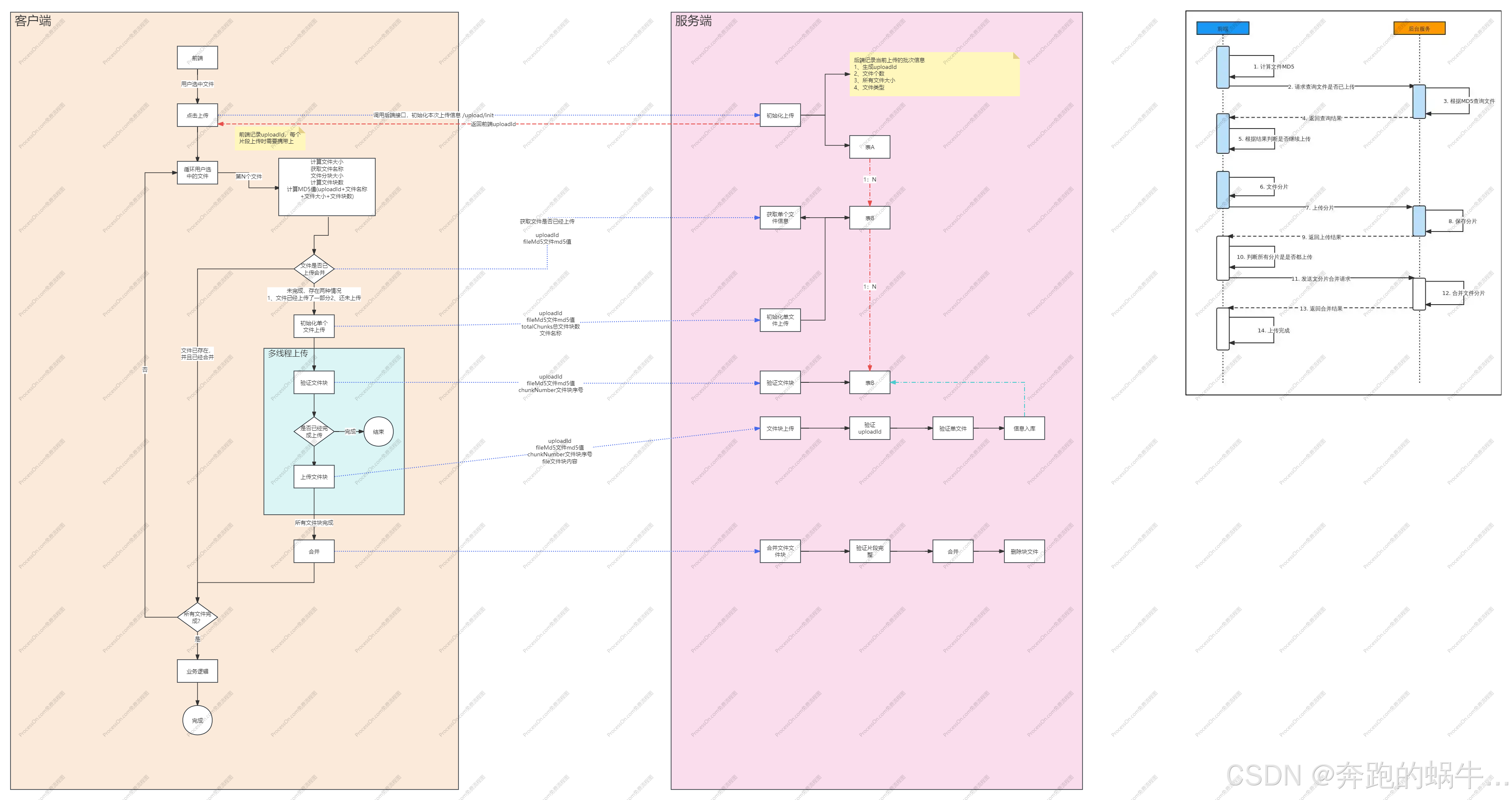

流程图

vue前端代码

这里是只做了demo示例,主线测试没什么问题,前端同学可参考修改

// 注意,需要导入 axios和spark-MD5

import SparkMD5 from 'spark-md5';

import axios from 'axios';

async upload() {// 1. 验证数据if (!this.verifyData.pass || this.fileList.length === 0) {this.$message.warning('无可上传的数据');return;}try {// 2. 准备参数const chunkSize = 10 * 1024 * 1024;const params = JSON.parse(JSON.stringify(this.form.content));params.fileNames = this.fileList.map(file => file.name);const uploadInitParam = {totalFileSize: this.fileList.reduce((sum, file) => sum + file.size, 0),fileCount: this.fileList.length,fileType: params.fileType};// 3. 初始化上传const initRes = await this.Ajax(API.bigFile.upload_init,uploadInitParam,'post',false,3000);if (initRes.status !== 0) {this.$message.warning('初始化失败');return;}this.$message.success('初始化成功');const uploadInit = initRes.data;// 4. 顺序上传每个文件for (const file of this.genomeFileList) {await this.uploadSingleFile(file, uploadInit, chunkSize);}// 5. 准备最终参数this.prepareFinalParams(params, uploadInit.id);// 6. 上传最终表单const uploadFormData = new FormData();if (this.excelList.length > 0) {uploadFormData .append('excelFile', this.excelList[0]);}uploadFormData .append('content', JSON.stringify(params));const finalRes = await this.Ajax(API.sample.save3,uploadFormData ,'post',true,30000);console.log("完成断点续传--------->res", finalRes);this.$message.success('文件上传流程完成');} catch (error) {console.error('上传过程中出错:', error);this.$message.error(`上传失败: ${error.message}`);}

},

async uploadSingleFile(file, uploadInit, chunkSize) {// 1. 计算文件MD5const chunkCount = Math.ceil(file.size / chunkSize);const spark = new SparkMD5();spark.append(uploadInit.id);spark.append(file.name);spark.append(file.size.toString());spark.append(chunkCount.toString());const fileMd5 = spark.end();// 2. 检查文件是否已上传const checkRes = await this.Ajax(API.bigFile.upload_file_check,{uploadId: uploadInit.id,fileMd5: fileMd5},'get',true,30000);if (checkRes.status !== 0) {throw new Error(`验证文件失败: ${file.name}`);}if (checkRes.data) {this.$message.success(`文件已存在: ${file.name}`);return;}// 3. 初始化文件上传const fileInitRes = await this.Ajax(API.bigFile.upload_file_init,{uploadId: uploadInit.id,fileMd5: fileMd5,fileName: file.name,fileSize: file.size,totalChunk: chunkCount,chunkSize: chunkSize},'post',true,30000);if (fileInitRes.status !== 0) {throw new Error(`初始化文件上传失败: ${file.name}`);}// 4. 顺序上传每个块for (let chunkIndex = 0; chunkIndex < chunkCount; chunkIndex++) {await this.uploadFileChunk(file, uploadInit, fileMd5, chunkIndex, chunkSize);}// 5. 合并文件const mergeRes = await this.Ajax(API.bigFile.upload_file_merge,{uploadId: uploadInit.id,fileMd5: fileMd5},'post',true,30000);if (mergeRes.status !== 0) {throw new Error(`文件合并失败: ${file.name}`);}this.$message.success(`文件上传完成: ${file.name}`);

},async uploadFileChunk(file, uploadInit, fileMd5, chunkIndex, chunkSize) {// 1. 准备文件块const start = chunkIndex * chunkSize;const end = Math.min(file.size, start + chunkSize);const fileChunk = file.slice(start, end);// 2. 计算块MD5const chunkFileMd5 = await this.calculateChunkMd5(fileChunk);// 3. 检查块是否已上传const checkRes = await this.Ajax(API.bigFile.upload_file_chunk_check,{uploadId: uploadInit.id,fileMd5: fileMd5,chunkFileMd5: chunkFileMd5,chunkNumber: chunkIndex + 1},'get',false,30000);if (checkRes.status !== 0) {throw new Error(`检查文件块失败: ${chunkIndex + 1}`);}if (checkRes.data) {this.$message.success(`文件块已存在: ${file.name} chunk ${chunkIndex + 1}`);return;}// 4. 上传块const formData = new FormData();formData.append('file', fileChunk);formData.append('uploadId', uploadInit.id);formData.append('fileMd5', fileMd5);formData.append('chunkFileMd5', chunkFileMd5);formData.append('chunkNumber', chunkIndex + 1);const uploadRes = await this.Ajax(API.bigFile.upload_file_chunk,formData,'post',false,30000);if (uploadRes.status !== 0) {throw new Error(`上传文件块失败: ${chunkIndex + 1}`);}// this.$message.success(`文件块上传完成: ${file.name} chunk ${chunkIndex + 1}`);

},

calculateChunkMd5(fileChunk) {return new Promise((resolve) => {const spark = new SparkMD5.ArrayBuffer();const fileReader = new FileReader();fileReader.onload = (e) => {spark.append(e.target.result);resolve(spark.end());};fileReader.onerror = () => {resolve('');};fileReader.readAsArrayBuffer(fileChunk);});

},

后端代码

controller 层

@Api(tags = "断点续传")

@RestController

@RequestMapping("/uploads")

public class ResumableUploadController {@Autowiredprivate ResumableUploadService resumableUploadService;@Autowiredprivate Validator validator;@Autowiredprivate OtherConfig otherConfig;@PostMapping("/upload/init")@ApiOperation(value = "初始化本次上传信息",notes = "初始化本次上传信息")@UserRightAnnotationpublic R<Object> uploadInit(@Valid @RequestBody UploadInitDto uploadInitDto) {return R.isOk(resumableUploadService.uploadInit(uploadInitDto));}@GetMapping("/upload/file/check")@ApiOperation(value = "单文件-检查是否已经合并完成",notes = "单文件-检查是否已经合并完成")@UserRightAnnotationpublic R<Object> uploadFileCheck(@RequestParam String uploadId, @RequestParam String fileMd5) {return R.isOk(resumableUploadService.uploadFileCheck(uploadId,fileMd5));}@PostMapping("/upload/file/init")@ApiOperation(value = "单文件-初始化上传文件信息",notes = "单文件-初始化上传文件信息")@UserRightAnnotationpublic R<Object> uploadFileInit(@Valid @RequestBody UploadFileInitDto uploadFileInitDto) {return R.isOk(resumableUploadService.uploadFileInit(uploadFileInitDto));}@GetMapping("/upload/file/chunk/check")@ApiOperation(value = "单文件-块-检查是否已经上传",notes = "单文件-块-检查是否已经上传")@UserRightAnnotationpublic R<Object> uploadFileChunkCheck(@RequestParam String uploadId,@RequestParam String fileMd5,@RequestParam String chunkFileMd5,@RequestParam Integer chunkNumber) {return R.isOk(resumableUploadService.uploadFileChunkCheck(uploadId, fileMd5, chunkFileMd5,chunkNumber));}@PostMapping("/upload/file/chunk")@ApiOperation(value = "单文件-块-上传",notes = "单文件-块-上传")@UserRightAnnotationpublic R<Object> uploadFileChunk(UploadFileChunkDto uploadFileChunkDto) {Set<ConstraintViolation<UploadFileChunkDto>> violations = validator.validate(uploadFileChunkDto);if(!violations.isEmpty()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(), "参数错误!");}MultipartFile file = uploadFileChunkDto.getFile();if(Objects.isNull(file) || file.getSize() == 0){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"请上传有内容文件!");}return R.isOk(resumableUploadService.uploadFileChunk(uploadFileChunkDto));}@PostMapping("/upload/file/merge")@ApiOperation(value = "单文件-合并",notes = "单文件-合并")@UserRightAnnotationpublic R<Object> uploadFileMerge(@Valid @RequestBody UploadFileMergeDto uploadFileMergeDto) {return R.isOk(resumableUploadService.uploadFileMerge(uploadFileMergeDto));}

}

service层

@Service

@Slf4j

public class ResumableUploadService {@Autowiredprivate UploadRepository uploadRepository;@Autowiredprivate UploadFileRepository uploadFileRepository;@Autowiredprivate UploadFileChunkRepository uploadFileChunkRepository;@Autowiredprivate OtherConfig otherConfig;@Autowiredprivate TaskConfig taskConfig;@Autowiredprivate FileService fileService;public Upload uploadInit(UploadInitDto uploadInitDto) {log.info("初始化上传--------uploadInit---------->dto={}",JacksonUtil.toJson(uploadInitDto));User user = UserUtil.getCurrentUser();Upload uploadInit = new Upload();BeanUtils.copyProperties(uploadInitDto, uploadInit);uploadInit.setId(IdUtils.getUuid());uploadInit.setCreatedBy(user.getId());uploadInit.setCreatedDate(DateUtils.getCurrentDate());uploadInit.setUpdateDate(DateUtils.getCurrentDate());StringBuilder sb = new StringBuilder();sb.append(taskConfig.getUploadfilepath()).append(Constants.RESUMABLE_UPLOAD_FOLDERNAME).append(File.separator).append(uploadInit.getId());uploadInit.setPathname(sb.toString());return uploadRepository.save(uploadInit);}@Transactional(rollbackFor = Exception.class)@SneakyThrowspublic void uploadDelete(String uploadId) {log.info("删除整个上传批次--------uploadDelete---------->uploadId={}",uploadId);Optional<Upload> uploadOptional = uploadRepository.findById(uploadId);if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"警告,非法删除!");}uploadFileRepository.deleteByUploadId(uploadId);uploadRepository.deleteById(uploadId);File file = new File(upload.getPathname());if(file.exists()){FileUtils.deleteDirectory(new File(upload.getPathname()));}log.info("deleteUpload------------------->删除信息已完成,uploadId={}",uploadId);}public boolean uploadFileCheck(String uploadId, String fileMd5) {log.info("检查单个文件上传是否完成--------uploadFileCheck---------->uploadId={},fileMd5={}",uploadId,fileMd5);Optional<Upload> uploadOptional = uploadRepository.findById(uploadId);if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}Optional<UploadFile> uploadFileOptional = uploadFileRepository.findById(fileMd5);if(!uploadFileOptional.isPresent()){return false;}UploadFile uploadFile = uploadFileOptional.get();if(Constants.NORMAL.equals(uploadFile.getMergeStatus())){// 已经合并完成return true;}return false;}public UploadFile uploadFileInit(UploadFileInitDto uploadFileInitDto) {log.info("初始化单个文件上传--------uploadFileInit---------->dto={}",JacksonUtil.toJson(uploadFileInitDto));Optional<Upload> uploadOptional = uploadRepository.findById(uploadFileInitDto.getUploadId());if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}otherConfig.checkFileName(uploadFileInitDto.getFileName(),upload.getFileType());// 计算MD5是否和前端传递的MD5一致StringBuilder sb = new StringBuilder();sb.append(uploadFileInitDto.getUploadId()).append(uploadFileInitDto.getFileName()).append(uploadFileInitDto.getFileSize()).append(uploadFileInitDto.getTotalChunk());String fileMd5 = Md5Util.calculateMd5(sb.toString());if(!StringUtils.equals(fileMd5,uploadFileInitDto.getFileMd5())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件MD5不一致!");}Optional<UploadFile> uploadFileOptional = uploadFileRepository.findById(uploadFileInitDto.getFileMd5());if(uploadFileOptional.isPresent()){UploadFile uploadFile = uploadFileOptional.get();if(!StringUtils.equals(uploadFile.getUploadId(),uploadFileInitDto.getUploadId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}// 已经存在return uploadFileOptional.get();}// 验证文件名称不能重复UploadFile uploadFile = uploadFileRepository.findByUploadIdAndFileName(upload.getId(),uploadFileInitDto.getFileName());if(!Objects.isNull(uploadFile)){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件名称重复!");}uploadFile = new UploadFile();BeanUtils.copyProperties(uploadFileInitDto,uploadFile);uploadFile.setId(fileMd5);uploadFile.setMergeStatus(Constants.INVALID);uploadFile.setCreatedDate(DateUtils.getCurrentDate());return uploadFileRepository.save(uploadFile);}public boolean uploadFileChunkCheck(String uploadId, String fileMd5, String chunkFileMd5,Integer chunkNumber) {Optional<Upload> uploadOptional = uploadRepository.findById(uploadId);if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}Optional<UploadFile> uploadFileOptional = uploadFileRepository.findById(fileMd5);if(!uploadFileOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件信息不存在!");}UploadFile uploadFile = uploadFileOptional.get();if(!StringUtils.equals(uploadId,uploadFile.getUploadId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件信息不存在!");}if(chunkNumber > uploadFile.getTotalChunk()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块序号错误!");}UploadFileChunk uploadFileChunk = uploadFileChunkRepository.findByUploadIdAndUploadFileIdAndChunkFileMd5AndChunkNumber(uploadId,fileMd5,chunkFileMd5,chunkNumber);return !Objects.isNull(uploadFileChunk);}@SneakyThrowspublic UploadFileChunk uploadFileChunk(UploadFileChunkDto uploadFileChunkDto) {Optional<Upload> uploadOptional = uploadRepository.findById(uploadFileChunkDto.getUploadId());if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}Optional<UploadFile> uploadFileOptional = uploadFileRepository.findById(uploadFileChunkDto.getFileMd5());if(!uploadFileOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件信息不存在!");}UploadFile uploadFile = uploadFileOptional.get();if(!StringUtils.equals(uploadFileChunkDto.getUploadId(),uploadFile.getUploadId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件信息不存在!");}if(uploadFileChunkDto.getChunkNumber() > uploadFile.getTotalChunk()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块序号错误!");}UploadFileChunk uploadFileChunk = uploadFileChunkRepository.findByUploadIdAndUploadFileIdAndChunkFileMd5AndChunkNumber(uploadFileChunkDto.getUploadId(),uploadFileChunkDto.getFileMd5(),uploadFileChunkDto.getChunkFileMd5(),uploadFileChunkDto.getChunkNumber());if(!Objects.isNull(uploadFileChunk)){if(!uploadFileChunk.getChunkNumber().equals(uploadFileChunkDto.getChunkNumber())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块序号与原上传块号不匹配!");}return uploadFileChunk;}MultipartFile file = uploadFileChunkDto.getFile();String md5 = Md5Util.calculateMd5(file);if(!StringUtils.equals(md5,uploadFileChunkDto.getChunkFileMd5())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块md5不匹配!");}FileInfo fileInfo = fileService.uploadFileChunk(file, upload.getPathname(), uploadFileChunkDto.getFileMd5(), uploadFileChunkDto.getChunkFileMd5(), uploadFileChunkDto.getChunkNumber());if(Objects.isNull(fileInfo)){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"上传失败,请联系管理员!");}// 上传成功,保存文件块信息uploadFileChunk = new UploadFileChunk();BeanUtils.copyProperties(uploadFileChunkDto,uploadFileChunk);BeanUtils.copyProperties(fileInfo,uploadFileChunk);uploadFileChunk.setUploadFileId(uploadFileChunkDto.getFileMd5());uploadFileChunk.setCreatedDate(DateUtils.getCurrentDate());return uploadFileChunkRepository.save(uploadFileChunk);}@SneakyThrowspublic UploadFile uploadFileMerge(UploadFileMergeDto uploadFileMergeDto) {log.info("合并文件块------------------>dto={}",JacksonUtil.toJson(uploadFileMergeDto));Optional<Upload> uploadOptional = uploadRepository.findById(uploadFileMergeDto.getUploadId());if(!uploadOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"uploadId错误");}Upload upload = uploadOptional.get();if(!StringUtils.equals(upload.getCreatedBy(),UserUtil.getCurrentUserId())){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"非法请求!");}Optional<UploadFile> uploadFileOptional = uploadFileRepository.findById(uploadFileMergeDto.getFileMd5());if(!uploadFileOptional.isPresent()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件信息不存在!");}UploadFile uploadFile = uploadFileOptional.get();if(Constants.NORMAL.equals(uploadFile.getMergeStatus())){return uploadFile;}List<UploadFileChunk> uploadFileChunkList = uploadFileChunkRepository.findListByUploadIdAndUploadFileId(uploadFileMergeDto.getUploadId(), uploadFileMergeDto.getFileMd5());if(uploadFileChunkList.size() != uploadFile.getTotalChunk()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块数量与文件块总数不符!");}List<String> chunkFileId = new ArrayList<>();// 验证文件块Map<Integer,UploadFileChunk> chunkMap = Maps.newHashMap();for (UploadFileChunk uploadFileChunk : uploadFileChunkList) {chunkMap.put(uploadFileChunk.getChunkNumber(),uploadFileChunk);chunkFileId.add(uploadFileChunk.getId());}List<File> chunkFileList = new ArrayList<>(uploadFile.getTotalChunk());File file;for (int i = 1; i <= uploadFile.getTotalChunk(); i++) {if(!chunkMap.containsKey(i)){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块序号错误!");}UploadFileChunk uploadFileChunk = chunkMap.get(i);file = new File(uploadFileChunk.getFilePath());if(!file.exists()){throw new ServiceException(HttpStatus.BAD_REQUEST.value(),"文件块不存在!");}chunkFileList.add(file);}// 合并文件FileInfo fileInfo = fileService.mergeChunkFile(chunkFileList, uploadFile,upload.getPathname());uploadFile.setFileName(fileInfo.getFileName());uploadFile.setFilePath(fileInfo.getFilePath());uploadFile.setMergeStatus(Constants.NORMAL);uploadFileRepository.save(uploadFile);uploadFileChunkRepository.deleteAllById(chunkFileId);// 删除文件块String chunkPathname = upload.getPathname().concat(File.separator).concat(uploadFile.getId());FileUtils.deleteDirectory(new File(chunkPathname));return uploadFile;}}// 补充 上传与合并

@SneakyThrows

public FileInfo uploadFileChunk(MultipartFile file, String pathname, String uploadFileId,String chunkFileMd5,Integer chunkNumber){try (InputStream inputStream = file.getInputStream()){FileInfo fileInfo = new FileInfo();fileInfo.setId(IdUtils.getUuid());fileInfo.setFileName(chunkNumber.toString().concat(SplitSymbolEnum.SPLIT_UNDERLINE.getSymbol()).concat(chunkFileMd5));fileInfo.setFileSize(file.getSize());// 定义文件路径StringBuilder sb = new StringBuilder();sb.append(pathname).append(File.separator).append(uploadFileId).append(File.separator)// 编号_md5值.append(fileInfo.getFileName());fileInfo.setFilePath(sb.toString());FileUtils.copyToFile(inputStream,new File(fileInfo.getFilePath()));return fileInfo;}catch (Exception e){log.error("FileService.uploadFileChunk--------出现未知异常--------->pathname={},uploadFileId={},chunkFileMd5={},chunkNumber={}",pathname,uploadFileId,chunkFileMd5,chunkNumber,e);}return null;

}/**** @param chunkFileList 排好序的文件* @param uploadFile* @param pathname*/

public FileInfo mergeChunkFile(List<File> chunkFileList,UploadFile uploadFile,String pathname) {StringBuilder sb = new StringBuilder();sb.append(pathname).append(File.separator).append(uploadFile.getFileName()).append(File.separator);String finalFilePath = pathname.concat(File.separator).concat(uploadFile.getFileName());try (RandomAccessFile destFile = new RandomAccessFile(finalFilePath, "rw")) {// 按分片索引排序// 逐个写入for (File chunk : chunkFileList) {try (FileInputStream fis = new FileInputStream(chunk)) {byte[] buffer = new byte[1024];int len;while ((len = fis.read(buffer)) != -1) {destFile.write(buffer, 0, len);}}catch (Exception e){log.error("FileService.mergeChunkFile--------出现未知异常--------->chunkFile={}",chunk.getAbsolutePath());}}} catch (Exception e) {log.error("FileService.mergeChunkFile--------出现未知异常--------->chunkFileList={},fileName={},pathname={}",chunkFileList,uploadFile.getFileName(),pathname,e);throw new ServiceException(HttpStatus.INTERNAL_SERVER_ERROR.value(),"合并文件失败!");}File file = new File(finalFilePath);if(!file.exists()){log.error("FileService.mergeChunkFile--------出现未知异常--------->chunkFileList={},fileName={},pathname={}",chunkFileList,uploadFile.getFileName(),pathname);throw new ServiceException(HttpStatus.INTERNAL_SERVER_ERROR.value(),"合并文件失败!");}FileInfo fileInfo = new FileInfo();fileInfo.setId(IdUtils.getUuid());fileInfo.setFileName(uploadFile.getFileName());fileInfo.setFileSize(file.length());fileInfo.setFilePath(finalFilePath);return fileInfo;

}

持久层

主表,初始化单次上传

@Data

@Entity

@Table(name = "upload")

public class Upload implements Serializable {@Id@ApiModelProperty(example = "主键")@Column(length = 32)private String id;@ApiModelProperty(value = "总文件大小")private Long totalFileSize;@ApiModelProperty(value = "文件数量")private Integer fileCount;@ApiModelProperty(value = "文件类型")@Column(length = 32)private String fileType;@ApiModelProperty(value = "文件类型")@Column(length = 300)private String pathname;@ApiModelProperty(value = "创建人",example = "创建人")@Column(length = 32)private String createdBy;@ApiModelProperty(value = "创建时间",example = "创建时间")@JsonFormat(shape =JsonFormat.Shape.STRING, pattern= "yyyy-MM-dd HH:mm:ss" ,timezone ="GMT+8")@Temporal(TemporalType.TIMESTAMP)private Date createdDate;@ApiModelProperty(value = "更新时间",example = "更新时间")@JsonFormat(shape =JsonFormat.Shape.STRING, pattern= "yyyy-MM-dd HH:mm:ss" ,timezone ="GMT+8")@Temporal(TemporalType.TIMESTAMP)private Date updateDate;}

文件表,单次上传所有的文件记录

@Data

@Entity

@Table(name = "upload_file")

public class UploadFile implements Serializable {@Id@ApiModelProperty(example = "主键")@Column(length = 32)private String id;@ApiModelProperty(example = "upload主键")@Column(length = 32)private String uploadId;@ApiModelProperty(value = "文件名称")@Column(length = 300)private String fileName;@ApiModelProperty(value = "文件大小")private Long fileSize;@ApiModelProperty(value = "总文件块")private Integer totalChunk;@ApiModelProperty(value = "分块大小")private Long chunkSize;@ApiModelProperty(value = "文件路径")@Column(length = 300)private String filePath;@ApiModelProperty(value = "合并状态")private Integer mergeStatus;@ApiModelProperty(value = "创建时间",example = "创建时间")@JsonFormat(shape =JsonFormat.Shape.STRING, pattern= "yyyy-MM-dd HH:mm:ss" ,timezone ="GMT+8")@Temporal(TemporalType.TIMESTAMP)private Date createdDate;

}

文件分块表

@Data

@Entity

@Table(name = "upload_file_chunk")

public class UploadFileChunk implements Serializable {@Id@ApiModelProperty(example = "主键")@Column(length = 32)private String id;@ApiModelProperty(example = "upload主键")@Column(length = 32)private String uploadId;@ApiModelProperty(example = "文件主键")@Column(length = 32)private String uploadFileId;@ApiModelProperty(example = "md5值")@Column(length = 32)private String chunkFileMd5;@ApiModelProperty(example = "块编号")private Integer chunkNumber;@ApiModelProperty(value = "文件大小")private Long fileSize;@ApiModelProperty(value = "文件名称")@Column(length = 300)private String fileName;@ApiModelProperty(value = "文件路径")@Column(length = 300)private String filePath;@ApiModelProperty(value = "创建时间",example = "创建时间")@JsonFormat(shape =JsonFormat.Shape.STRING, pattern= "yyyy-MM-dd HH:mm:ss" ,timezone ="GMT+8")@Temporal(TemporalType.TIMESTAMP)private Date createdDate;}

结语:保持谁使用谁删除

祝~~ 愉快

)

—— 程序的“数据基石”)

)

(黑马视频笔记))

)

)

)