之前介绍了知识图谱与检索增强的融合探索GraphRAG。

https://blog.csdn.net/liliang199/article/details/151189579

这里尝试在CPU环境,基于GraphRAG+Ollama,验证GraphRAG构建知识图谱和检索增强查询过程。

1 环境安装

1.1 GraphRAG安装

在本地cpu环境,基于linux conda安装python,pip安装graphrag,过程如下。

conda create -n graphrag python=3.10

conda activate graphrag

pip install graphrag==0.5.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

安装graphrag 0.5.0(之后版本可能和ollama有兼容问题)

1.2 Ollama LLM安装

假设ollama已安装,具体安装过程参考

https://blog.csdn.net/liliang199/article/details/149267372

这里ollama下载llm模型mistral和embedding模型nomic-embed-text

ollama pull nomic-embed-text

ollama pull mistral

默认ollama模型上下文长度为2048,不能有效支持GraphRAG,需要对上下文长度进行修改。

导出现有llm模型配置,配置文件为Modelfile

ollama show --modelfile mistral:latest > Modelfile

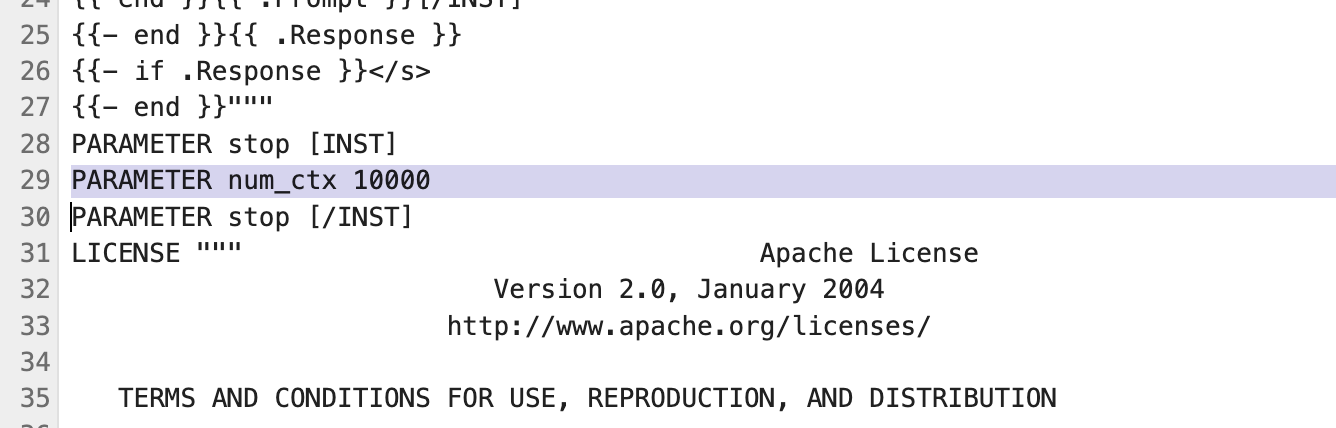

修改Modelfile,在PARAMETER区域添加如下配置,支持10k上下文,可依据具体情况设定。

PARAMETER num_ctx 10000

修改后示例如下

基于修改后的Modelfile,创建新的ollama模型,指令如下。

ollama create mistral:10k -f Modelfile

查看新创建模型

ollama list

2 GraphRAG图谱构建验证

2.1 测试数据准备

首先创建工作目录

mkdir ragtest/input -p

输入为如下小1000行的文本,获取命令如下。

https://www.gutenberg.org/cache/epub/7785/pg7785.txt

wget https://www.gutenberg.org/cache/epub/7785/pg7785.txt -O ragtest/input/Transformers_intro.txt

此时,./ragtest包含测试数据,初始化指令如下。

graphrag init --root ./ragtest

生成参数配置文件ragtest/settings.yaml

2.2 环境变量设置

设置GRAPHRAG_API_KEY和GRAPHRAG_CLAIM_EXTRACTION_ENABLED

export GRAPHRAG_API_KEY=ollama

export GRAPHRAG_CLAIM_EXTRACTION_ENABLED=True

设置参数GRAPHRAG_CLAIM_EXTRACTION_ENABLED=True,否则无法生成协变量,Local Search出错。

2.3 模型参数配置

模型参数配置文件ragtest/settings.yaml

修改llm model为mistral:10k,embedding model为nomic-embed-text

调用本地ollama llm服务,所以设置api_base: http://localhost:11434/v1

本地cpu部署,计算很慢,所以设置一个很长的request_timeout: 18000

没有GPU,过大concurrent_requests没效果,反而导致超时,设置concurrent_requests: 1

### This config file contains required core defaults that must be set, along with a handful of common optional settings.

### For a full list of available settings, see https://microsoft.github.io/graphrag/config/yaml/### LLM settings ###

## There are a number of settings to tune the threading and token limits for LLM calls - check the docs.encoding_model: cl100k_base # this needs to be matched to your model!

llm:

api_key: ${GRAPHRAG_API_KEY} # set this in the generated .env file

type: openai_chat # or azure_openai_chat

model: mistral:10kapi_base: http://localhost:11434/v1

request_timeout: 18000

concurrent_requests: 1

model_supports_json: true # recommended if this is available for your model.

# audience: "https://cognitiveservices.azure.com/.default"

# api_base: https://<instance>.openai.azure.com

# api_version: 2024-02-15-preview

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>parallelization:

stagger: 0.3

# num_threads: 50async_mode: threaded # or asyncio

embeddings:

async_mode: threaded # or asyncio

vector_store:

type: lancedb

db_uri: 'output/lancedb'

container_name: default

overwrite: true

llm:

api_key: ${GRAPHRAG_API_KEY}

type: openai_embedding # or azure_openai_embedding

model: nomic-embed-text

api_base: http://localhost:11434/v1

request_timeout: 18000concurrent_requests: 1

# api_base: https://<instance>.openai.azure.com

# api_version: 2024-02-15-preview

# audience: "https://cognitiveservices.azure.com/.default"

# organization: <organization_id>

# deployment_name: <azure_model_deployment_name>### Input settings ###

input:

type: file # or blob

file_type: text # or csv

base_dir: "input"

file_encoding: utf-8

file_pattern: ".*\\.txt$"chunks:

size: 1200

overlap: 100

group_by_columns: [id]### Storage settings ###

## If blob storage is specified in the following four sections,

## connection_string and container_name must be providedcache:

type: file # or blob

base_dir: "cache"reporting:

type: file # or console, blob

base_dir: "logs"storage:

type: file # or blob

base_dir: "output"## only turn this on if running `graphrag index` with custom settings

## we normally use `graphrag update` with the defaults

update_index_storage:

# type: file # or blob

# base_dir: "update_output"### Workflow settings ###

skip_workflows: []

entity_extraction:

prompt: "prompts/entity_extraction.txt"

entity_types: [organization,person,geo,event]

max_gleanings: 1summarize_descriptions:

prompt: "prompts/summarize_descriptions.txt"

max_length: 500claim_extraction:

enabled: false

prompt: "prompts/claim_extraction.txt"

description: "Any claims or facts that could be relevant to information discovery."

max_gleanings: 1community_reports:

prompt: "prompts/community_report.txt"

max_length: 2000

max_input_length: 8000cluster_graph:

max_cluster_size: 10embed_graph:

enabled: false # if true, will generate node2vec embeddings for nodesumap:

enabled: false # if true, will generate UMAP embeddings for nodessnapshots:

graphml: false

raw_entities: false

top_level_nodes: false

embeddings: false

transient: false### Query settings ###

## The prompt locations are required here, but each search method has a number of optional knobs that can be tuned.

## See the config docs: https://microsoft.github.io/graphrag/config/yaml/#querylocal_search:

prompt: "prompts/local_search_system_prompt.txt"global_search:

map_prompt: "prompts/global_search_map_system_prompt.txt"

reduce_prompt: "prompts/global_search_reduce_system_prompt.txt"

knowledge_prompt: "prompts/global_search_knowledge_system_prompt.txt"drift_search:

prompt: "prompts/drift_search_system_prompt.txt"

2.4 数据索引构建

然后就是构建索引,这里需要设置--reporter "rich",不设置会报错。

nohup graphrag index --root ./ragtest --reporter "rich" > run.log &

本地CPU运行ollama其实不太有效,耗时太长,导致各种奇怪超时和报错,所以最好有GPU。

另外,虽然可以调用外部LLM服务,但GraphRAG索引会消耗大量tokens,这需要不差钱。

附录

问题1: Invalid value for '--reporter'

Invalid value for '--reporter' (env var: 'None'): <ReporterType.RICH: 'rich'> is not one of 'rich', 'print', 'none'. │

补全输出参数"none"/"print"/"rich",比如 --reporter "rich"

reference

---

GraphRAG

https://github.com/msolhab/graphrag

Project Gutenberg

https://www.gutenberg.org/

Global Search Notebook

https://microsoft.github.io/graphrag/examples_notebooks/global_search/

GraphRAG-知识图谱与检索增强的融合探索

https://blog.csdn.net/liliang199/article/details/151189579

GraphTest - 直接使用阿里API,总体费用相对可控。

https://github.com/NanGePlus/GraphragTest

GraphRAG(最新版)+Ollama本地部署,以及中英文示例

https://juejin.cn/post/7439046849883226146

傻瓜操作:GraphRAG、Ollama 本地部署及踩坑记录

https://blog.csdn.net/weixin_42107217/article/details/141649920

)

)

)

)