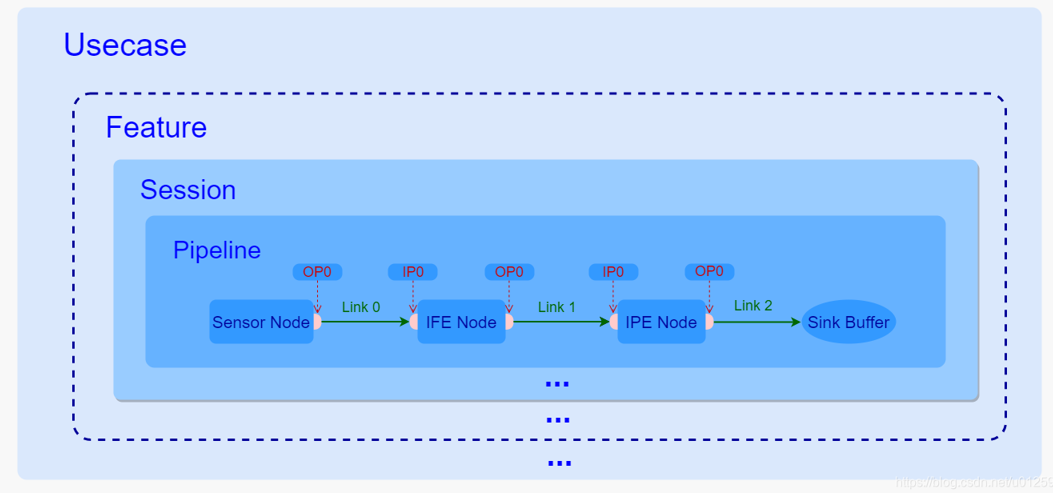

组件关系

整体流程:

camxhal3.cpp:704 open()camxhal3.cpp:1423 configure_streams()chxextensionmodule.cpp:2810 InitializeOverrideSessionchxusecaseutils.cpp:850 GetMatchingUsecase()chxadvancedcamerausecase.cpp:4729 Initialize()chxadvancedcamerausecase.cpp:5757 SelectFeatures()usecase ID匹配逻辑

GetMatchingUsecase 函数根据输入的相机信息(pCamInfo)和流配置(pStreamConfig)返回最适合的用例ID(UsecaseId)。

– UsecaseSelector::GetMatchingUsecase

代码逻辑总结

- 优先级最高的用例:

QuadCFA用例(特定传感器和流配置)

超级慢动作模式 - 多摄像头用例:

如果启用VR模式,选择MultiCameraVR

否则选择MultiCamera - 单摄像头用例:

根据流数量(2/3/4)选择不同的用例

考虑多种功能标志:ZSL、GPU处理、MFNR、EIS等 - 特殊用例:

最后检查是否为手电筒小部件用例 - 默认用例:

如果没有匹配其他条件,使用Default用例

关键判断条件

流数量(num_streams)

摄像头类型和数量

各种功能模块的启用状态(通过ExtensionModule获取)

特定的流配置检查(通过IsXXXStreamConfig函数)

UsecaseId UsecaseSelector::GetMatchingUsecase(const LogicalCameraInfo* pCamInfo, // 摄像头逻辑信息camera3_stream_configuration_t* pStreamConfig) // 流配置信息

{UsecaseId usecaseId = UsecaseId::Default; // 默认用例UINT32 VRDCEnable = ExtensionModule::GetInstance()->GetDCVRMode(); // 获取VR模式设置// 检查是否为QuadCFA传感器且符合特定条件if ((pStreamConfig->num_streams == 2) && IsQuadCFASensor(pCamInfo, NULL) &&(LogicalCameraType_Default == pCamInfo->logicalCameraType)){// 如果快照尺寸小于传感器binning尺寸,选择默认ZSL用例// 只有当快照尺寸大于传感器binning尺寸时,才选择QuadCFA用例if (TRUE == QuadCFAMatchingUsecase(pCamInfo, pStreamConfig)){usecaseId = UsecaseId::QuadCFA;CHX_LOG_CONFIG("Quad CFA usecase selected");return usecaseId;}}// 检查是否为超级慢动作模式if (pStreamConfig->operation_mode == StreamConfigModeSuperSlowMotionFRC){usecaseId = UsecaseId::SuperSlowMotionFRC;CHX_LOG_CONFIG("SuperSlowMotionFRC usecase selected");return usecaseId;}// 重置用例标志VideoEISV2Usecase = 0;VideoEISV3Usecase = 0;GPURotationUsecase = FALSE;GPUDownscaleUsecase = FALSE;// 多摄像头VR用例判断if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && VRDCEnable){CHX_LOG_CONFIG("MultiCameraVR usecase selected");usecaseId = UsecaseId::MultiCameraVR;}// 多摄像头用例判断else if ((NULL != pCamInfo) && (pCamInfo->numPhysicalCameras > 1) && (pStreamConfig->num_streams > 1)){CHX_LOG_CONFIG("MultiCamera usecase selected");usecaseId = UsecaseId::MultiCamera;}else{SnapshotStreamConfig snapshotStreamConfig;CHISTREAM** ppChiStreams = reinterpret_cast<CHISTREAM**>(pStreamConfig->streams);// 根据流数量选择用例switch (pStreamConfig->num_streams){case 2: // 2个流的情况if (TRUE == IsRawJPEGStreamConfig(pStreamConfig)){CHX_LOG_CONFIG("Raw + JPEG usecase selected");usecaseId = UsecaseId::RawJPEG;break;}// 检查是否启用ZSLif (FALSE == m_pExtModule->DisableZSL()){if (TRUE == IsPreviewZSLStreamConfig(pStreamConfig)){usecaseId = UsecaseId::PreviewZSL;CHX_LOG_CONFIG("ZSL usecase selected");}}// 检查是否启用GPU旋转if(TRUE == m_pExtModule->UseGPURotationUsecase()){CHX_LOG_CONFIG("GPU Rotation usecase flag set");GPURotationUsecase = TRUE;}// 检查是否启用GPU下采样if (TRUE == m_pExtModule->UseGPUDownscaleUsecase()){CHX_LOG_CONFIG("GPU Downscale usecase flag set");GPUDownscaleUsecase = TRUE;}// 检查是否启用MFNR(多帧降噪)if (TRUE == m_pExtModule->EnableMFNRUsecase()){if (TRUE == MFNRMatchingUsecase(pStreamConfig)){usecaseId = UsecaseId::MFNR;CHX_LOG_CONFIG("MFNR usecase selected");}}// 检查是否启用无3A的HFR(高帧率)if (TRUE == m_pExtModule->EnableHFRNo3AUsecas()){CHX_LOG_CONFIG("HFR without 3A usecase flag set");HFRNo3AUsecase = TRUE;}break;case 3: // 3个流的情况// 设置EIS(电子图像稳定)标志VideoEISV2Usecase = m_pExtModule->EnableEISV2Usecase();VideoEISV3Usecase = m_pExtModule->EnableEISV3Usecase();// 检查ZSL预览if (FALSE == m_pExtModule->DisableZSL() && (TRUE == IsPreviewZSLStreamConfig(pStreamConfig))){usecaseId = UsecaseId::PreviewZSL;CHX_LOG_CONFIG("ZSL usecase selected");}// 检查Raw+JPEG配置else if(TRUE == IsRawJPEGStreamConfig(pStreamConfig) && FALSE == m_pExtModule->DisableZSL()){CHX_LOG_CONFIG("Raw + JPEG usecase selected");usecaseId = UsecaseId::RawJPEG;}// 检查视频实时拍摄配置else if((FALSE == IsVideoEISV2Enabled(pStreamConfig)) && (FALSE == IsVideoEISV3Enabled(pStreamConfig)) &&(TRUE == IsVideoLiveShotConfig(pStreamConfig)) && (FALSE == m_pExtModule->DisableZSL())){CHX_LOG_CONFIG("Video With Liveshot, ZSL usecase selected");usecaseId = UsecaseId::VideoLiveShot;}// 处理BPS摄像头和EIS设置if ((NULL != pCamInfo) && (RealtimeEngineType_BPS == pCamInfo->ppDeviceInfo[0]->pDeviceConfig->realtimeEngine)){if((TRUE == IsVideoEISV2Enabled(pStreamConfig)) || (TRUE == IsVideoEISV3Enabled(pStreamConfig))){CHX_LOG_CONFIG("BPS Camera EIS V2 = %d, EIS V3 = %d",IsVideoEISV2Enabled(pStreamConfig),IsVideoEISV3Enabled(pStreamConfig));// 对于BPS摄像头且至少启用一个EIS的情况// 设置(伪)用例将引导到feature2选择器usecaseId = UsecaseId::PreviewZSL;}}break;case 4: // 4个流的情况GetSnapshotStreamConfiguration(pStreamConfig->num_streams, ppChiStreams, snapshotStreamConfig);// 检查HEIC格式和Raw流if ((SnapshotStreamType::HEIC == snapshotStreamConfig.type) && (NULL != snapshotStreamConfig.pRawStream)){CHX_LOG_CONFIG("Raw + HEIC usecase selected");usecaseId = UsecaseId::RawJPEG;}break;default: // 其他情况CHX_LOG_CONFIG("Default usecase selected");break;}}// 检查是否为手电筒小部件用例if (TRUE == ExtensionModule::GetInstance()->IsTorchWidgetUsecase()){CHX_LOG_CONFIG("Torch widget usecase selected");usecaseId = UsecaseId::Torch;}CHX_LOG_INFO("usecase ID:%d",usecaseId);return usecaseId;

}

/// @brief Usecase identifying enums

enum class UsecaseId

{NoMatch = 0,Default = 1,Preview = 2,PreviewZSL = 3,MFNR = 4,MFSR = 5,MultiCamera = 6,QuadCFA = 7,RawJPEG = 8,MultiCameraVR = 9,Torch = 10,YUVInBlobOut = 11,VideoLiveShot = 12,SuperSlowMotionFRC = 13,MaxUsecases = 14,

};

Feature 匹配

feature的定义

/// @brief Advance feature types

enum AdvanceFeatureType

{

AdvanceFeatureNone = 0x0, ///< mask for none features

AdvanceFeatureZSL = 0x1, ///< mask for feature ZSL

AdvanceFeatureMFNR = 0x2, ///< mask for feature MFNR

AdvanceFeatureHDR = 0x4, ///< mask for feature HDR(AE_Bracket)

AdvanceFeatureSWMF = 0x8, ///< mask for feature SWMF

AdvanceFeatureMFSR = 0x10, ///< mask for feature MFSR

AdvanceFeatureQCFA = 0x20, ///< mask for feature QuadCFA

AdvanceFeature2Wrapper = 0x40, ///< mask for feature2 wrapper

AdvanceFeatureCountMax = AdvanceFeature2Wrapper ///< Max of advance feature mask

};

CDKResult AdvancedCameraUsecase::FeatureSetup(camera3_stream_configuration_t* pStreamConfig)

{CDKResult result = CDKResultSuccess;if ((UsecaseId::PreviewZSL == m_usecaseId) ||(UsecaseId::YUVInBlobOut == m_usecaseId) ||(UsecaseId::VideoLiveShot == m_usecaseId) ||(UsecaseId::QuadCFA == m_usecaseId) ||(UsecaseId::RawJPEG == m_usecaseId)){SelectFeatures(pStreamConfig);}else if (UsecaseId::MultiCamera == m_usecaseId){SelectFeatures(pStreamConfig);}return result;

}// START of OEM to change section

VOID AdvancedCameraUsecase::SelectFeatures(camera3_stream_configuration_t* pStreamConfig)

{// OEM to change// 这个函数根据当前的pStreamConfig和静态设置决定要运行哪些特性INT32 index = 0;UINT32 enabledAdvanceFeatures = 0;// 从ExtensionModule获取已启用的高级特性掩码enabledAdvanceFeatures = ExtensionModule::GetInstance()->GetAdvanceFeatureMask();CHX_LOG("SelectFeatures(), enabled feature mask:%x", enabledAdvanceFeatures);// 如果当前是FastShutter模式,则强制启用SWMF和MFNR特性if (StreamConfigModeFastShutter == ExtensionModule::GetInstance()->GetOpMode(m_cameraId)){enabledAdvanceFeatures = AdvanceFeatureSWMF|AdvanceFeatureMFNR;}CHX_LOG("SelectFeatures(), enabled feature mask:%x", enabledAdvanceFeatures);// 遍历所有物理摄像头设备for (UINT32 physicalCameraIndex = 0 ; physicalCameraIndex < m_numOfPhysicalDevices ; physicalCameraIndex++){index = 0;// 检查当前用例是否属于以下类型之一if ((UsecaseId::PreviewZSL == m_usecaseId) ||(UsecaseId::MultiCamera == m_usecaseId) ||(UsecaseId::QuadCFA == m_usecaseId) ||(UsecaseId::VideoLiveShot == m_usecaseId) ||(UsecaseId::RawJPEG == m_usecaseId)){// 如果启用了MFNR(多帧降噪)特性if (AdvanceFeatureMFNR == (enabledAdvanceFeatures & AdvanceFeatureMFNR)){// 启用离线噪声重处理m_isOfflineNoiseReprocessEnabled = ExtensionModule::GetInstance()->EnableOfflineNoiseReprocessing();// 需要FD(人脸检测)流缓冲区m_isFDstreamBuffersNeeded = TRUE;}// 如果启用了SWMF(软件多帧)、HDR或2Wrapper特性if ((AdvanceFeatureSWMF == (enabledAdvanceFeatures & AdvanceFeatureSWMF)) ||(AdvanceFeatureHDR == (enabledAdvanceFeatures & AdvanceFeatureHDR)) ||((AdvanceFeature2Wrapper == (enabledAdvanceFeatures & AdvanceFeature2Wrapper)))){// 创建Feature2Wrapper的输入信息结构Feature2WrapperCreateInputInfo feature2WrapperCreateInputInfo;feature2WrapperCreateInputInfo.pUsecaseBase = this;feature2WrapperCreateInputInfo.pMetadataManager = m_pMetadataManager;feature2WrapperCreateInputInfo.pFrameworkStreamConfig =reinterpret_cast<ChiStreamConfigInfo*>(pStreamConfig);// 清除所有流的pHalStream指针for (UINT32 i = 0; i < feature2WrapperCreateInputInfo.pFrameworkStreamConfig->numStreams; i++){feature2WrapperCreateInputInfo.pFrameworkStreamConfig->pChiStreams[i]->pHalStream = NULL;}// 如果Feature2Wrapper尚未创建if (NULL == m_pFeature2Wrapper){// 如果是多摄像头用例if (TRUE == IsMultiCameraUsecase()){// 如果流配置中不包含融合流,则设置输入输出类型为YUV_OUTif (FALSE == IsFusionStreamIncluded(pStreamConfig)){feature2WrapperCreateInputInfo.inputOutputType =static_cast<UINT32>(InputOutputType::YUV_OUT);}// 添加内部输入流(RDI和FD流)for (UINT8 streamIndex = 0; streamIndex < m_numOfPhysicalDevices; streamIndex++){feature2WrapperCreateInputInfo.internalInputStreams.push_back(m_pRdiStream[streamIndex]);feature2WrapperCreateInputInfo.internalInputStreams.push_back(m_pFdStream[streamIndex]);}// 需要FD流缓冲区m_isFDstreamBuffersNeeded = TRUE;}// 创建Feature2Wrapper实例m_pFeature2Wrapper = Feature2Wrapper::Create(&feature2WrapperCreateInputInfo, physicalCameraIndex);}// 将创建的Feature2Wrapper添加到启用特性列表中m_enabledFeatures[physicalCameraIndex][index] = m_pFeature2Wrapper;index++;}}// 记录当前物理摄像头启用的特性数量m_enabledFeaturesCount[physicalCameraIndex] = index;}// 如果第一个物理摄像头有启用的特性if (m_enabledFeaturesCount[0] > 0){// 如果还没有活动的特性,则设置为第一个启用的特性if (NULL == m_pActiveFeature){m_pActiveFeature = m_enabledFeatures[0][0];}// 记录日志:选择的特性数量和预览的特性类型CHX_LOG_INFO("num features selected:%d, FeatureType for preview:%d",m_enabledFeaturesCount[0], m_pActiveFeature->GetFeatureType());}else{CHX_LOG_INFO("No features selected");}// 将最后一个快照特性设置为当前活动特性m_pLastSnapshotFeature = m_pActiveFeature;

}

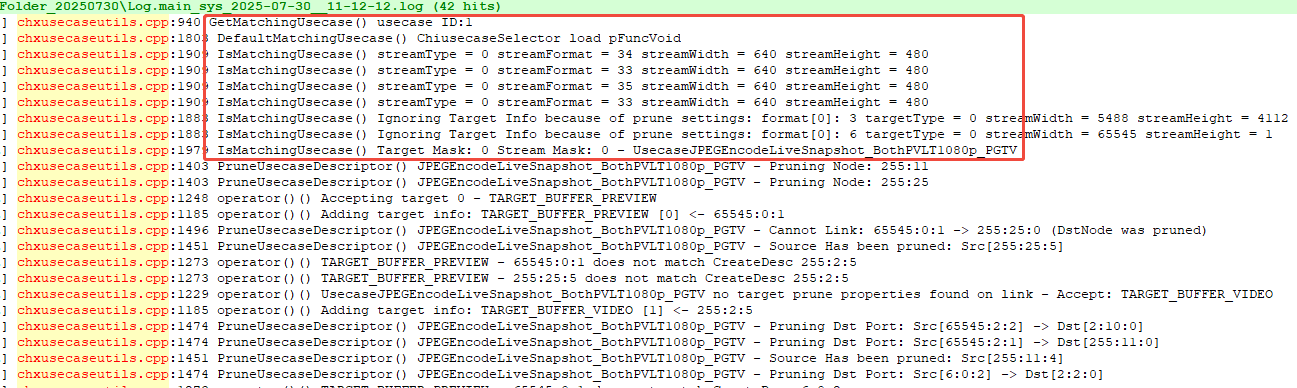

Usecase 匹配逻辑

- 如果是PreviewZSL、MultiCamera、QuadCFA等模式,直接ConfigureStream和BuildUsecase

- 其他模式-通过DefaultMatchingUsecase重新匹配

CDKResult AdvancedCameraUsecase::SelectUsecaseConfig(LogicalCameraInfo* pCameraInfo, ///< Camera infocamera3_stream_configuration_t* pStreamConfig) ///< Stream configuration

{if ((UsecaseId::PreviewZSL == m_usecaseId) ||(UsecaseId::YUVInBlobOut == m_usecaseId) ||(UsecaseId::VideoLiveShot == m_usecaseId) ||(UsecaseId::MultiCamera == m_usecaseId) ||(UsecaseId::QuadCFA == m_usecaseId) ||(UsecaseId::RawJPEG == m_usecaseId)){ConfigureStream(pCameraInfo, pStreamConfig);BuildUsecase(pCameraInfo, pStreamConfig);}else{CHX_LOG("Initializing using default usecase matching");m_pChiUsecase = UsecaseSelector::DefaultMatchingUsecase(pStreamConfig);}

}

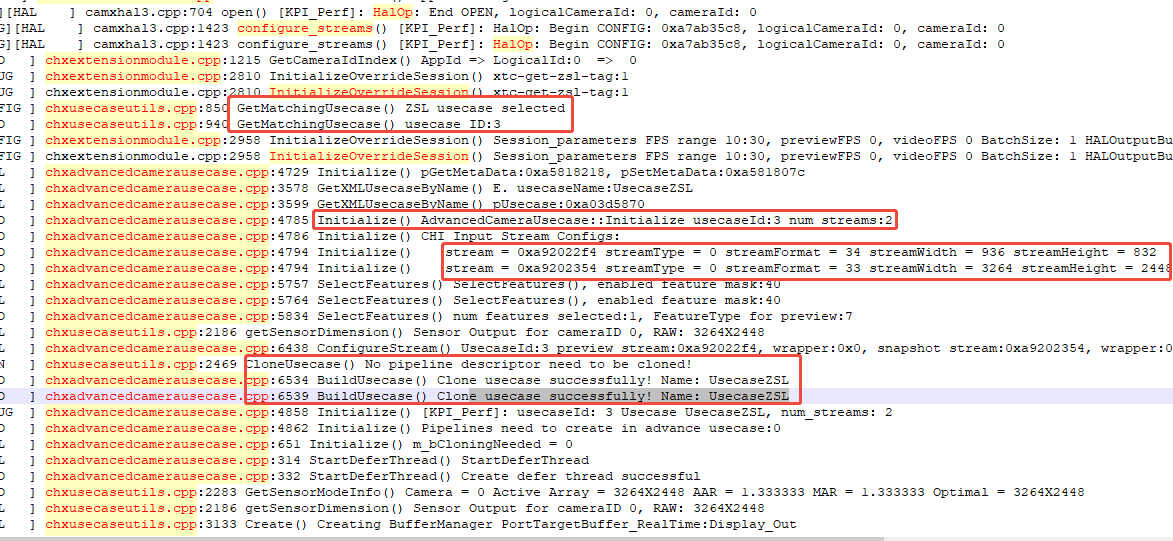

PreviewZSL 模式下

ZSL模式下,pAdvancedUsecase 赋值为UsecaseZSL,

BuildUsecase函数调用CloneUsecase来根据配置选择克隆ZSL调优用例或高级用例,

一般走pAdvancedUsecase

AdvancedCameraUsecase::Initialize {}//这里 ZSL_USECASE_NAME = "UsecaseZSL"pAdvancedUsecase = GetXMLUsecaseByName(ZSL_USECASE_NAME);}

- BuildUsecase

-

根据feature添加pipeline

-

根据feature克隆并配置用例模板

-

设置管道与会话、相机的映射关系(Map camera ID and session ID for the pipeline and prepare for pipeline/session creation)

-

为feature覆盖流配置

-

AdvancedCameraUsecase::BuildUsecase(){// 根据配置选择克隆ZSL调优用例或高级用例if (static_cast<UINT>(UsecaseZSLTuningId) == ExtensionModule::GetInstance()->OverrideUseCase()){m_pClonedUsecase = UsecaseSelector::CloneUsecase(pZslTuningUsecase, totalPipelineCount, pipelineIDMap);}else{m_pClonedUsecase = UsecaseSelector::CloneUsecase(pAdvancedUsecase, totalPipelineCount, pipelineIDMap);}

}

default模式下

调用流程

m_pChiUsecase = UsecaseSelector::DefaultMatchingUsecase(pStreamConfig);

--ChiUsecase* GetDefaultMatchingUsecase(camera3_stream_configuration_t* pStreamConfig)

---UsecaseSelector::DefaultMatchingUsecaseSelection(pStreamConfig)

----IsMatchingUsecase(pStreamConfig, pUsecase, &pruneSettings);

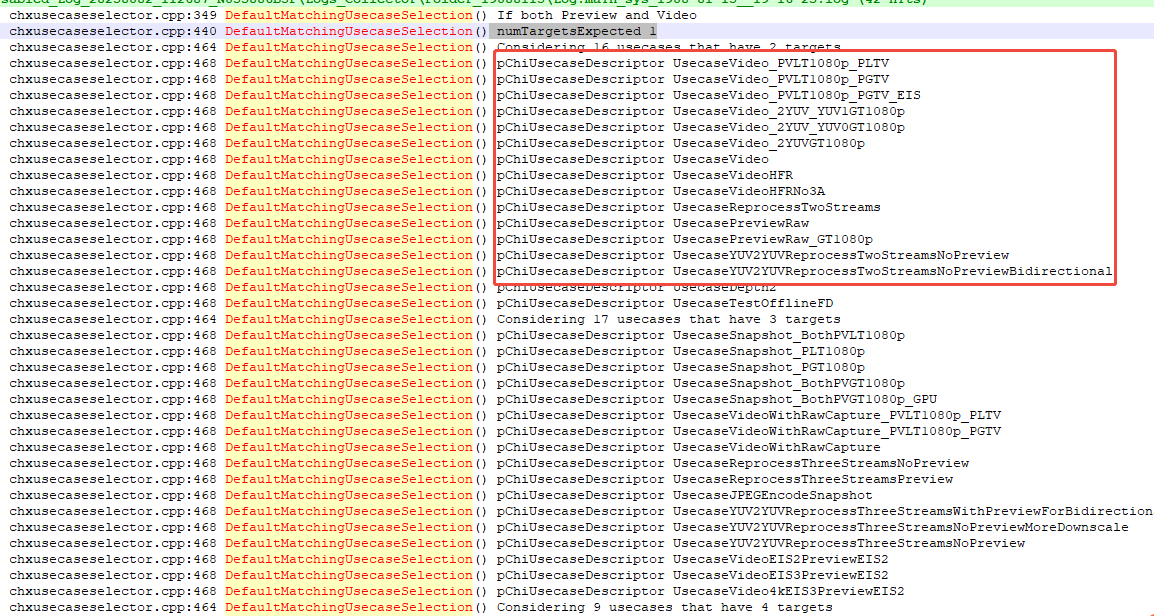

- DefaultMatchingUsecaseSelection

- 优先匹配Selected EISv3 usecase

- 其次匹配 Selected EISv2 usecase

- 根据pStreamConfig->num_streams匹配

extern "C" CAMX_VISIBILITY_PUBLIC ChiUsecase* UsecaseSelector::DefaultMatchingUsecaseSelection(camera3_stream_configuration_t* pStreamConfig)

{auto UsecaseMatches = [&pStreamConfig, &pruneSettings](const ChiUsecase* const pUsecase) -> BOOL{return IsMatchingUsecase(pStreamConfig, pUsecase, &pruneSettings);};if (pStreamConfig->num_streams <= ChiMaxNumTargets){else if (NULL == pSelectedUsecase){ //匹配EIS V3算法Usecaseif (TRUE == IsVideoEISV3Enabled(pStreamConfig)){

···if (TRUE == UsecaseMatches(&Usecases3Target[usecaseEIS3Id])){CHX_LOG("Selected EISv3 usecase");pSelectedUsecase = &Usecases3Target[usecaseEIS3Id];}}//匹配EIS V2算法Usecaseif ((TRUE == IsVideoEISV2Enabled(pStreamConfig)) && (NULL == pSelectedUsecase) &&(TRUE == UsecaseMatches(&Usecases3Target[UsecaseVideoEIS2PreviewEIS2Id]))){CHX_LOG("Selected EISv2 usecase");pSelectedUsecase = &Usecases3Target[UsecaseVideoEIS2PreviewEIS2Id];}// This if block is only for kamorta usecases where Preview & Video streams are presentif ((pStreamConfig->num_streams > 1) && (NULL == pSelectedUsecase)){// If both Preview and Video < 1080p then only Preview < Video and Preview >Video Scenario occursif ((numYUVStreams == 2) && (YUV0Height <= TFEMaxHeight && YUV0Width <= TFEMaxWidth) &&(YUV1Height <= TFEMaxHeight && YUV1Width <= TFEMaxWidth)){switch (pStreamConfig->num_streams){case 2:if (((YUV0Height * YUV0Width) < (YUV1Height * YUV1Width)) &&(TRUE == UsecaseMatches(&Usecases2Target[UsecaseVideo_PVLT1080p_PLTVId]))){pSelectedUsecase = &Usecases2Target[UsecaseVideo_PVLT1080p_PLTVId];}else if (TRUE == UsecaseMatches(&Usecases2Target[UsecaseVideo_PVLT1080p_PGTVId])){pSelectedUsecase = &Usecases2Target[UsecaseVideo_PVLT1080p_PGTVId];}break;case 3:if (TRUE == bJpegStreamExists){// JPEG is taking from RealTimeif (((YUV0Height * YUV0Width) < (YUV1Height * YUV1Width)) &&(TRUE == UsecaseMatches(&Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PLTVId]))){pSelectedUsecase = &Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PLTVId];}else if (TRUE == UsecaseMatches(&Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PGTVId])){pSelectedUsecase = &Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PGTVId];}}break;// For HEICcase 4:if (TRUE == bHEICStreamExists){if (((YUV0Height * YUV0Width) < (YUV1Height * YUV1Width)) &&(TRUE == UsecaseMatches(&Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PLTVId]))){pSelectedUsecase = &Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PLTVId];}else if (TRUE == UsecaseMatches(&Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PGTVId])){pSelectedUsecase = &Usecases5Target[UsecaseJPEGEncodeLiveSnapshot_BothPVLT1080p_PGTVId];}}break;default:break;}}}//如果上面匹配都失败了,pStreamConfig->num_streams -1if (NULL == pSelectedUsecase){if ((StreamConfigModeQTIEISLookAhead == pStreamConfig->operation_mode) ||(StreamConfigModeQTIEISRealTime == pStreamConfig->operation_mode)){// EIS is disabled, ensure that operation_mode is also set accordinglypStreamConfig->operation_mode = 0;}numTargetsExpected = pStreamConfig->num_streams - 1;}for (/* Initialized outside*/; numTargetsExpected < ChiMaxNumTargets; numTargetsExpected++){pChiTargetUsecases = &PerNumTargetUsecases[numTargetsExpected];if (0 == pChiTargetUsecases->numUsecases){continue;}CHX_LOG_INFO("Considering %u usecases that have %u targets", pChiTargetUsecases->numUsecases, numTargetsExpected + 1);for (UINT i = 0; i < pChiTargetUsecases->numUsecases; i++){const ChiUsecase* pChiUsecaseDescriptor = &pChiTargetUsecases->pChiUsecases[i];CHX_LOG_INFO("pChiUsecaseDescriptor %s ", pChiUsecaseDescriptor->pUsecaseName);···if (TRUE == UsecaseMatches(pChiUsecaseDescriptor)){}

}

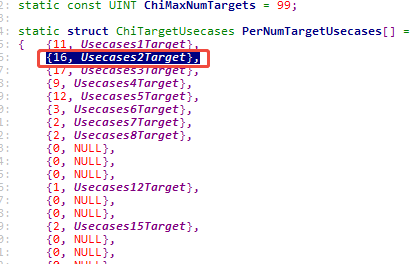

最后代码匹配逻辑:

- pStreamConfig->num_streams -1

- 循环从PerNumTargetUsecases去找Usecase

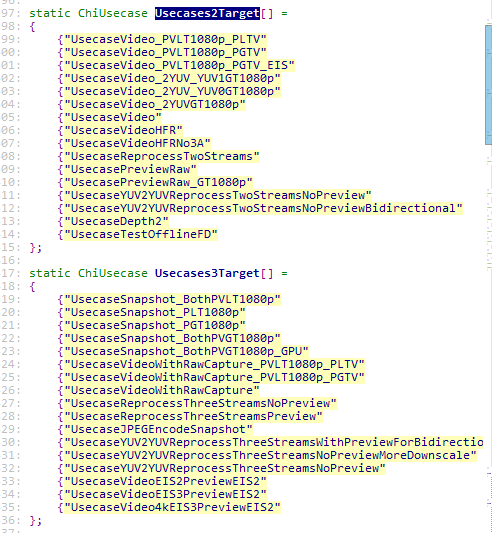

pStreamConfig->num_streams -1,这里-1是因为

比如2路流,pStreamConfig->num_streams -1 = 2-1 =1

PerNumTargetUsecases[1] 对应的是{16, Usecases2Target},

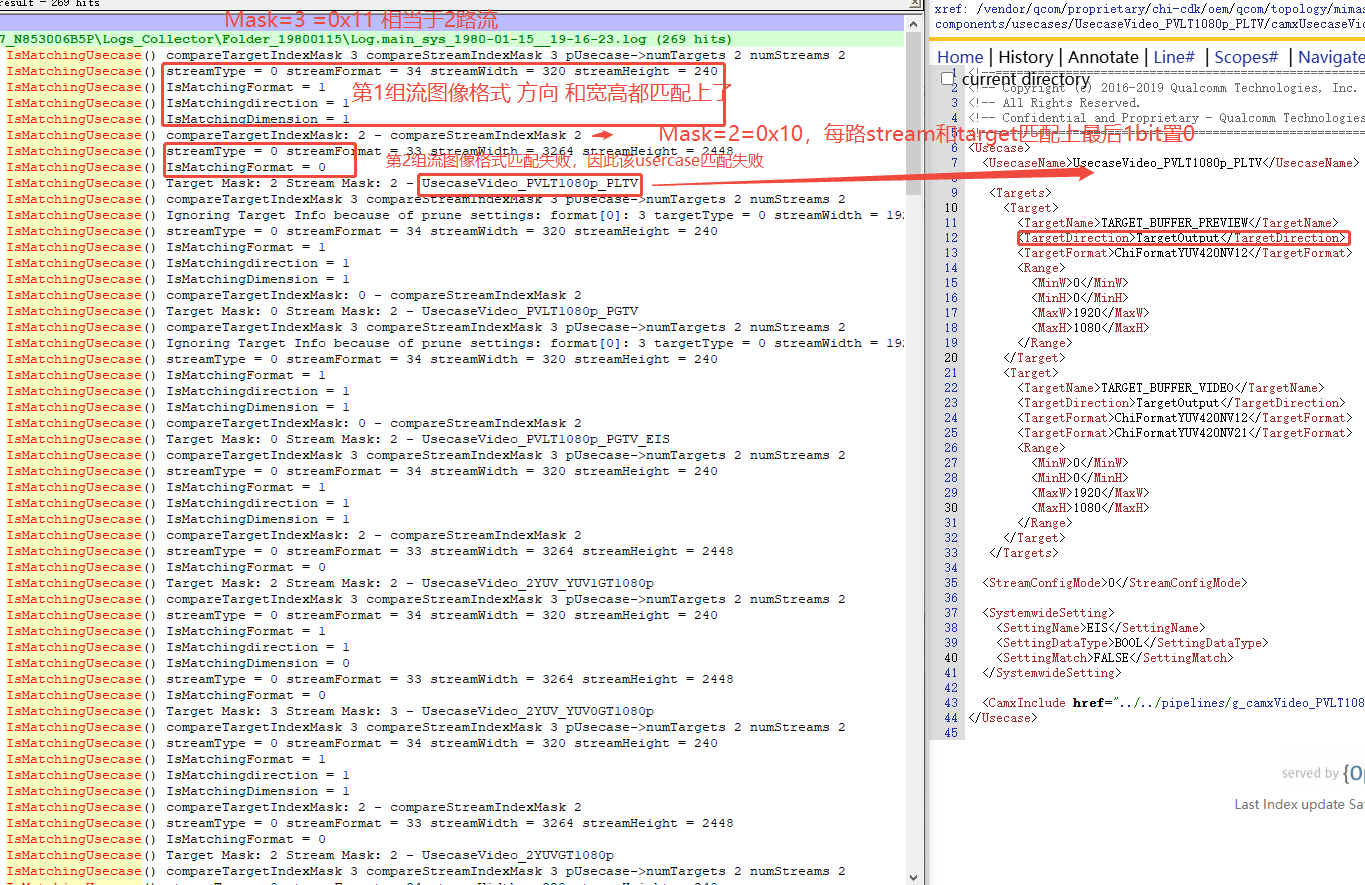

BOOL UsecaseSelector::IsMatchingUsecase

vendor/qcom/proprietary/chi-cdk/core/chiusecase/chxusecaseutils.cpp

主要匹配逻辑如下:

- IsMatchingVideo :比较是否是视频模式

- IsMatchingFormat: 比较图像格式

- IsMatchingdirection: 比较图像流是输入还是输出

- IsMatchingDimension:比较图片宽高是否符合要求

streamFormat 匹配格式

typedef enum ChiStreamFormat

{ChiStreamFormatYCrCb420_SP = 0x00000113, ///< YCrCb420_SP is mapped to ChiStreamFormatYCbCr420_888 with ZSL flagsChiStreamFormatRaw16 = 0x00000020, ///< Blob formatChiStreamFormatBlob = 0x00000021, ///< Carries data which does not have a standard image structure (e.g. JPEG)ChiStreamFormatImplDefined = 0x00000022, ///< Format is up to the device-specific Gralloc implementation.ChiStreamFormatYCbCr420_888 = 0x00000023, ///< Efficient YCbCr/YCrCb 4:2:0 buffer layout, layout-independentChiStreamFormatRawOpaque = 0x00000024, ///< Raw OpaqueChiStreamFormatRaw10 = 0x00000025, ///< Raw 10ChiStreamFormatRaw12 = 0x00000026, ///< Raw 12ChiStreamFormatRaw64 = 0x00000027, ///< Blob formatChiStreamFormatUBWCNV124R = 0x00000028, ///< UBWCNV12-4RChiStreamFormatNV12HEIF = 0x00000116, ///< HEIF video YUV420 formatChiStreamFormatNV12YUVFLEX = 0x00000125, ///< Flex NV12 YUV format with 1 batchChiStreamFormatNV12UBWCFLEX = 0x00000126, ///< Flex NV12 UBWC formatChiStreamFormatY8 = 0x20203859, ///< Y 8ChiStreamFormatY16 = 0x20363159, ///< Y 16ChiStreamFormatP010 = 0x7FA30C0A, ///< P010ChiStreamFormatUBWCTP10 = 0x7FA30C09, ///< UBWCTP10ChiStreamFormatUBWCNV12 = 0x7FA30C06, ///< UBWCNV12ChiStreamFormatPD10 = 0x7FA30C08, ///< PD10

} BufferFormat匹配格式

/// @brief Buffer Format

typedef enum ChiBufferFormat

{ChiFormatJpeg = 0, ///< JPEG format.ChiFormatY8 = 1, ///< Luma only, 8 bits per pixel.ChiFormatY16 = 2, ///< Luma only, 16 bits per pixel.ChiFormatYUV420NV12 = 3, ///< YUV 420 format as described by the NV12 fourcc.ChiFormatYUV420NV21 = 4, ///< YUV 420 format as described by the NV21 fourcc.ChiFormatYUV420NV16 = 5, ///< YUV 422 format as described by the NV16 fourccChiFormatBlob = 6, ///< Any non image dataChiFormatRawYUV8BIT = 7, ///< Packed YUV/YVU raw format. 16 bpp: 8 bits Y and 8 bits UV./// U and V are interleaved as YUYV or YVYV.ChiFormatRawPrivate = 8, ///< Private RAW formats where data is packed into 64bit word./// 8BPP: 64-bit word contains 8 pixels p0-p7, p0 is stored at LSB./// 10BPP: 64-bit word contains 6 pixels p0-p5,/// most significant 4 bits are set to 0. P0 is stored at LSB./// 12BPP: 64-bit word contains 5 pixels p0-p4,/// most significant 4 bits are set to 0. P0 is stored at LSB./// 14BPP: 64-bit word contains 4 pixels p0-p3,/// most significant 8 bits are set to 0. P0 is stored at LSB.ChiFormatRawMIPI = 9, ///< MIPI RAW formats based on MIPI CSI-2 specification./// 8BPP: Each pixel occupies one bytes, starting at LSB./// Output width of image has no restrictions./// 10BPP: 4 pixels are held in every 5 bytes./// The output width of image must be a multiple of 4 pixels.ChiFormatRawMIPI10 = ChiFormatRawMIPI, ///< By default 10-bit, ChiFormatRawMIPI10ChiFormatRawPlain16 = 10, ///< Plain16 RAW format. Single pixel is packed into two bytes,/// little endian format./// Not all bits may be used as RAW data is/// generally 8, or 10 bits per pixel./// Lower order bits are filled first.ChiFormatRawPlain16LSB10bit = ChiFormatRawPlain16, ///< By default 10-bit, ChiFormatRawPlain16LSB10bitChiFormatRawMeta8BIT = 11, ///< Generic 8-bit raw meta data for internal camera usage.ChiFormatUBWCTP10 = 12, ///< UBWC TP10 format (as per UBWC2.0 design specification)ChiFormatUBWCNV12 = 13, ///< UBWC NV12 format (as per UBWC2.0 design specification)ChiFormatUBWCNV124R = 14, ///< UBWC NV12-4R format (as per UBWC2.0 design specification)ChiFormatYUV420NV12TP10 = 15, ///< YUV 420 format 10bits per comp tight packed format.ChiFormatYUV420NV21TP10 = 16, ///< YUV 420 format 10bits per comp tight packed format.ChiFormatYUV422NV16TP10 = 17, ///< YUV 422 format 10bits per comp tight packed format.ChiFormatPD10 = 18, ///< PD10 formatChiFormatRawMIPI8 = 19, ///< 8BPP: Each pixel occupies one bytes, starting at LSB./// Output width of image has no restrictions.ChiFormatP010 = 22, ///< P010 format.ChiFormatRawPlain64 = 23, ///< Raw Plain 64ChiFormatUBWCP010 = 24, ///< UBWC P010 format.ChiFormatRawDepth = 25, ///< 16 bit depthChiFormatRawMIPI12 = 26, ///< 12BPP: 2 pixels are held in every 3 bytes./// The output width of image must be a multiple of 2 pixels.ChiFormatRawPlain16LSB12bit = 27, ///< Plain16 RAW format. 12 bits per pixel.ChiFormatRawMIPI14 = 28, /// 14BPP: 4 pixels are held in every 7 bytes./// The output width of image must be a multiple of 4 pixels.ChiFormatRawPlain16LSB14bit = 29, ///< Plain16 RAW format. 14 bits per pixel.

} CHIBUFFERFORMAT;

基于log解析代码逻辑

源码实现

// 函数:判断当前流配置是否匹配指定的用例

// 参数:

// pStreamConfig - 流配置信息

// pUsecase - 用例信息

// pPruneSettings - 修剪设置(用于排除某些目标)

// 返回值:BOOL - TRUE表示匹配,FALSE表示不匹配

BOOL UsecaseSelector::IsMatchingUsecase(const camera3_stream_configuration_t* pStreamConfig,const ChiUsecase* pUsecase,const PruneSettings* pPruneSettings)

{// 断言检查输入参数不能为空CHX_ASSERT(NULL != pStreamConfig);CHX_ASSERT(NULL != pUsecase);// 初始化变量UINT numStreams = pStreamConfig->num_streams; // 流数量BOOL isMatching = FALSE; // 匹配结果标志UINT streamConfigMode = pUsecase->streamConfigMode; // 用例的流配置模式BOOL bTargetVideoCheck = FALSE; // 是否需要检查视频目标BOOL bHasVideoTarget = FALSE; // 用例是否有视频目标BOOL bHasVideoStream = FALSE; // 流配置中是否有视频流UINT videoStreamIdx = 0; // 视频流索引// 初始化比较掩码:// compareTargetIndexMask - 用于跟踪需要比较的目标(初始设置为所有目标都需要比较)// compareStreamIndexMask - 用于跟踪需要比较的流(初始设置为所有流都需要比较)UINT compareTargetIndexMask = ((1 << pUsecase->numTargets) - 1);UINT compareStreamIndexMask = ((1 << numStreams) - 1);// 检查流配置中是否有视频流for (UINT streamIdx = 0; streamIdx < numStreams; streamIdx++){if(IsVideoStream(pStreamConfig->streams[streamIdx])){bHasVideoStream = TRUE;videoStreamIdx = streamIdx;break;}}// 检查用例中是否有视频目标for (UINT targetIdx = 0; targetIdx < pUsecase->numTargets; targetIdx++){ChiTarget* pTargetInfo = pUsecase->ppChiTargets[targetIdx];if (!CdkUtils::StrCmp(pTargetInfo->pTargetName, "TARGET_BUFFER_VIDEO")){bHasVideoTarget = TRUE;break;}}// 设置视频目标检查标志(当既有视频流又有视频目标时需要特殊检查)bTargetVideoCheck = bHasVideoStream && bHasVideoTarget;// 检查流配置模式是否匹配if (streamConfigMode == static_cast<UINT>(pStreamConfig->operation_mode)){// 遍历用例中的所有目标for (UINT targetInfoIdx = 0; targetInfoIdx < pUsecase->numTargets; targetInfoIdx++){ChiTarget* pTargetInfo = pUsecase->ppChiTargets[targetInfoIdx];// 检查是否需要修剪当前目标(根据修剪设置)if ((NULL != pUsecase->pTargetPruneSettings) &&(TRUE == ShouldPrune(pPruneSettings, &pUsecase->pTargetPruneSettings[targetInfoIdx]))){CHX_LOG_INFO("Ignoring Target Info because of prune settings: ""format[0]: %u targetType = %d streamWidth = %d streamHeight = %d",pTargetInfo->pBufferFormats[0],pTargetInfo->direction,pTargetInfo->dimension.maxWidth,pTargetInfo->dimension.maxHeight);// 从比较掩码中移除当前目标compareTargetIndexMask = ChxUtils::BitReset(compareTargetIndexMask, targetInfoIdx);continue; // 跳过被修剪的目标}isMatching = FALSE; // 重置匹配标志// 检查当前目标是否是视频目标BOOL bIsVideoTarget = !CdkUtils::StrCmp(pTargetInfo->pTargetName, "TARGET_BUFFER_VIDEO");// 遍历所有流for (UINT streamId = 0; streamId < numStreams; streamId++){// 如果当前流已经被匹配过,则跳过if (FALSE == ChxUtils::IsBitSet(compareStreamIndexMask, streamId)){continue;}ChiStream* pStream = reinterpret_cast<ChiStream*>(pStreamConfig->streams[streamId]);CHX_ASSERT(pStream != NULL);if (NULL != pStream){// 获取流属性INT streamFormat = pStream->format;UINT streamType = pStream->streamType;UINT32 streamWidth = pStream->width;UINT32 streamHeight = pStream->height;CHX_LOG("streamType = %d streamFormat = %d streamWidth = %d streamHeight = %d",streamType, streamFormat, streamWidth, streamHeight);// 检查格式是否匹配isMatching = IsMatchingFormat(reinterpret_cast<ChiStream*>(pStream),pTargetInfo->numFormats,pTargetInfo->pBufferFormats);// 检查流类型(方向)是否匹配if (TRUE == isMatching){isMatching = ((streamType == static_cast<UINT>(pTargetInfo->direction)) ? TRUE : FALSE);}// 检查分辨率是否在目标范围内if (TRUE == isMatching){BufferDimension* pRange = &pTargetInfo->dimension;if ((streamWidth >= pRange->minWidth) && (streamWidth <= pRange->maxWidth) &&(streamHeight >= pRange->minHeight) && (streamHeight <= pRange->maxHeight)){isMatching = TRUE;}else{isMatching = FALSE;}}// 特殊处理视频流和目标if (bTargetVideoCheck){BOOL bIsVideoStream = (videoStreamIdx == streamId);if(bIsVideoTarget ^ bIsVideoStream) // XOR操作:必须同时是视频或同时不是视频{isMatching = FALSE;}}// 如果匹配成功,更新掩码并跳出循环if (TRUE == isMatching){pTargetInfo->pChiStream = pStream; // 将流与目标关联// 从比较掩码中移除已匹配的目标和流compareTargetIndexMask = ChxUtils::BitReset(compareTargetIndexMask, targetInfoIdx);compareStreamIndexMask = ChxUtils::BitReset(compareStreamIndexMask, streamId);break; // 处理下一个流}}}// 如果当前流没有找到匹配的目标,则整个用例不匹配if (FALSE == isMatching){break;}}}// 最终检查:所有流都必须找到匹配的目标if (TRUE == isMatching){isMatching = (0 == compareStreamIndexMask) ? TRUE : FALSE;}// 记录调试信息CHX_LOG_VERBOSE("Target Mask: %x Stream Mask: %x - %s",compareTargetIndexMask,compareStreamIndexMask,pUsecase->pUsecaseName);return isMatching;

}

)

)

![[python][selenium] Web UI自动化8种页面元素定位方式](http://pic.xiahunao.cn/[python][selenium] Web UI自动化8种页面元素定位方式)

)

与分组分区】【下游收集器】)