场景:

用于管理MySQL高可用

- 下载jq包

每台orchestrator集群机器上都进行下载。

# wget http://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

# rpm -ivh epel-release-latest-7.noarch.rpm

# yum repolist ###检查是否已经添加到源列表

# yum install -y jq

-

添加域名解析

若Orchestrator启用域名作为管理,编辑/etc/hosts(不采用该方式)

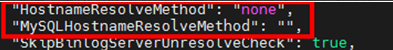

注:若直接用ip管理,不启用域名管理集群,则不需要配置。需要在orchestrator配置文件里设定以下两个参数为:

-

后端数据库设置

Create database orchestrator;

CREATE USER ‘orchestrator’@‘%’ IDENTIFIED BY ‘orchestrator’;

GRANT ALL ON orchestrator.* TO ‘orchestrator’@‘%’; -

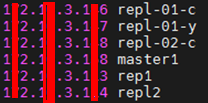

被管理的mysql集群设置

- 用户授权

CREATE USER ‘orchestrator’@‘%’ IDENTIFIED BY ‘orchestrator’;

GRANT SUPER, PROCESS, REPLICATION SLAVE, RELOAD ON . TO ‘orchestrator’@‘%’;

GRANT SELECT ON mysql.slave_master_info TO ‘orchestrator’@‘%’;

GRANT SELECT ON performance_schema.replication_group_members TO ‘orchestrator’@‘%’;

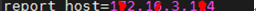

2) 设置report_host参数

orchestrator使用ip进行管理,MySQL配置文件需要加report_host参数。

如若出现给定主库,发现不了从库,给被管理的mysql服务配置文件添加“report_host=ip”的参数,然后重启mysql服务生效。

由主库发现从库,由参数DiscoverByShowSlaveHosts控制。如果为true,则会尝试先通过show slave hosts命令去发现从库。

从库设置了正确的report_host,show slave hosts中的host字段显示正确的IP,则直接通过show slave hosts发现从库。

从库没有设置report_host,show slave hosts中的host字段显示为空,则通过processlist发现从库。

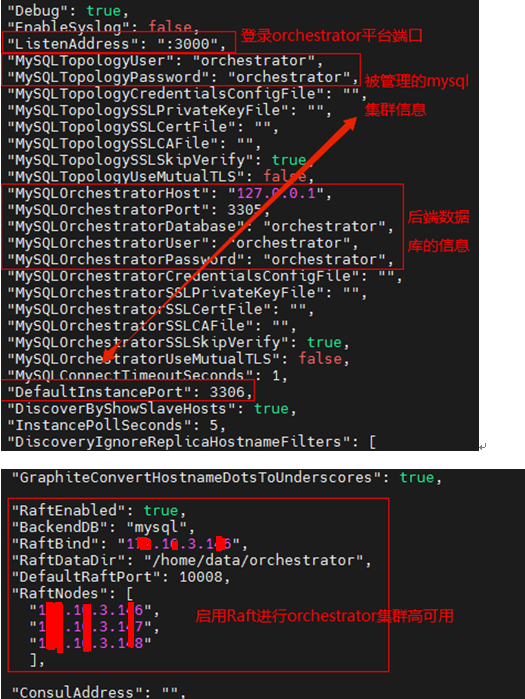

5. 配置文件说明

安装包有提供模板

/usr/local/orchestrator/ orchestrator-sample.conf.json ,在模板上根据具体情况进行参数填写,然后重命名orchestrator.conf.json,放到/etc目录下,

需要设置的基本参数及说明如下:

ListenAddress web界面的http端口

MySQLTopologyUser 被管理的MySQL的用户(明文)

MySQLTopologyPassword 被管理的MySQL的密码(密文)

MySQLOrchestratorHost orch后端数据库地址

MySQLOrchestratorPort orch后端数据库端口

MySQLOrchestratorDatabase orch后端数据库名

MySQLOrchestratorUser orch后端数据库用户名(明文)

MySQLOrchestratorPassword orch后端数据库密码(明文)

DefaultInstancePort 被管理MySQL的默认端口

RaftEnabled 是否开启Raft,保证orch的高可用。

RaftDataDir Raft的数据目录

BackendDB 后端数据库类型。

RaftBind Raft 的 bind地址,每台机器填自己的ip

DefaultRaftPort DefaultRaftPort

RaftNodes Raft的节点。

示例:

{"Debug": true,"EnableSyslog": false,"ListenAddress": ":3000","MySQLTopologyUser": "orchestrator","MySQLTopologyPassword": "orch`123qwer","MySQLTopologyCredentialsConfigFile": "","MySQLTopologySSLPrivateKeyFile": "","MySQLTopologySSLCertFile": "","MySQLTopologySSLCAFile": "","MySQLTopologySSLSkipVerify": true,"MySQLTopologyUseMutualTLS": false,"MySQLOrchestratorHost": "127.0.0.1","MySQLOrchestratorPort": 3305,"MySQLOrchestratorDatabase": "orchestrator","MySQLOrchestratorUser": "orchestrator","MySQLOrchestratorPassword": "orchestrator","MySQLOrchestratorCredentialsConfigFile": "","MySQLOrchestratorSSLPrivateKeyFile": "","MySQLOrchestratorSSLCertFile": "","MySQLOrchestratorSSLCAFile": "","MySQLOrchestratorSSLSkipVerify": true,"MySQLOrchestratorUseMutualTLS": false,"MySQLConnectTimeoutSeconds": 1,"DefaultInstancePort": 3305,"DiscoverByShowSlaveHosts": true,"InstancePollSeconds": 5,"DiscoveryIgnoreReplicaHostnameFilters": ["a_host_i_want_to_ignore[.]example[.]com",".*[.]ignore_all_hosts_from_this_domain[.]example[.]com","a_host_with_extra_port_i_want_to_ignore[.]example[.]com:3307"],"UnseenInstanceForgetHours": 240,"SnapshotTopologiesIntervalHours": 0,"InstanceBulkOperationsWaitTimeoutSeconds": 10,"HostnameResolveMethod": "none","MySQLHostnameResolveMethod": "","SkipBinlogServerUnresolveCheck": true,"ExpiryHostnameResolvesMinutes": 60,"RejectHostnameResolvePattern": "","ReasonableReplicationLagSeconds": 10,"ProblemIgnoreHostnameFilters": [],"VerifyReplicationFilters": false,"ReasonableMaintenanceReplicationLagSeconds": 20,"CandidateInstanceExpireMinutes": 60,"AuditLogFile": "","AuditToSyslog": false,"RemoveTextFromHostnameDisplay": ".mydomain.com:3306","ReadOnly": false,"AuthenticationMethod": "basic","HTTPAuthUser": "orchestrator","HTTPAuthPassword": "orchestrator","AuthUserHeader": "","PowerAuthUsers": ["*"],"ClusterNameToAlias": {"127.0.0.1": "test suite"},"ReplicationLagQuery": "","DetectClusterAliasQuery": "SELECT SUBSTRING_INDEX(@@hostname, '.', 1)","DetectClusterDomainQuery": "","DetectInstanceAliasQuery": "","DetectPromotionRuleQuery": "","DataCenterPattern": "[.]([^.]+)[.][^.]+[.]mydomain[.]com","PhysicalEnvironmentPattern": "[.]([^.]+[.][^.]+)[.]mydomain[.]com","PromotionIgnoreHostnameFilters": [],"DetectSemiSyncEnforcedQuery": "","ServeAgentsHttp": false,"AgentsServerPort": ":3001","AgentsUseSSL": false,"AgentsUseMutualTLS": false,"AgentSSLSkipVerify": false,"AgentSSLPrivateKeyFile": "","AgentSSLCertFile": "","AgentSSLCAFile": "","AgentSSLValidOUs": [],"UseSSL": false,"UseMutualTLS": false,"SSLSkipVerify": false,"SSLPrivateKeyFile": "","SSLCertFile": "","SSLCAFile": "","SSLValidOUs": [],"URLPrefix": "","StatusEndpoint": "/api/status","StatusSimpleHealth": true,"StatusOUVerify": false,"AgentPollMinutes": 60,"UnseenAgentForgetHours": 6,"StaleSeedFailMinutes": 60,"SeedAcceptableBytesDiff": 8192,"PseudoGTIDPattern": "","PseudoGTIDPatternIsFixedSubstring": false,"PseudoGTIDMonotonicHint": "asc:","DetectPseudoGTIDQuery": "","BinlogEventsChunkSize": 10000,"SkipBinlogEventsContaining": [],"ReduceReplicationAnalysisCount": true,"FailureDetectionPeriodBlockMinutes": 1,"FailMasterPromotionOnLagMinutes": 0,"RecoveryPeriodBlockSeconds": 60,"RecoveryIgnoreHostnameFilters": [],"RecoverMasterClusterFilters": ["*"],"RecoverIntermediateMasterClusterFilters": ["*"],"OnFailureDetectionProcesses": ["echo 'Detected {failureType} on {failureCluster}. Affected replicas: {countSlaves}' >> /tmp/recovery.log"],"PreGracefulTakeoverProcesses": ["echo 'Planned takeover about to take place on {failureCluster}. Master will switch to read_only' >> /tmp/recovery.log"],"PreFailoverProcesses": ["echo 'Will recover from {failureType} on {failureCluster}' >> /tmp/recovery.log"],"PostFailoverProcesses": ["echo '(for all types) Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log","source /usr/local/orchestrator/script/orch_hook.sh {failureType} {failureClusterAlias} {failedHost} {successorHost} >> /tmp/orch_vip.log","source /usr/local/orchestrator/script/dble.sh {failureType} {failureClusterAlias} {failedHost} {successorHost} >> /tmp/orch_dble.log"],"PostUnsuccessfulFailoverProcesses": [],"PostMasterFailoverProcesses": ["echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Promoted: {successorHost}:{successorPort}' >> /tmp/recovery.log"],"PostIntermediateMasterFailoverProcesses": ["echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log"],"PostGracefulTakeoverProcesses": ["echo 'Planned takeover complete' >> /tmp/recovery.log"],"CoMasterRecoveryMustPromoteOtherCoMaster": true,"DetachLostSlavesAfterMasterFailover": true,"ApplyMySQLPromotionAfterMasterFailover": true,"PreventCrossDataCenterMasterFailover": false,"PreventCrossRegionMasterFailover": false,"MasterFailoverDetachReplicaMasterHost": false,"MasterFailoverLostInstancesDowntimeMinutes": 0,"PostponeReplicaRecoveryOnLagMinutes": 0,"OSCIgnoreHostnameFilters": [],"GraphiteAddr": "","GraphitePath": "","GraphiteConvertHostnameDotsToUnderscores": true,"RaftEnabled": true,"BackendDB": "mysql","RaftBind": "1xx.1x.x.146","RaftDataDir": "/home/data/orchestrator","DefaultRaftPort": 10008,"RaftNodes": ["1xx.1x.x.146","1xx.1x.x.147","1xx.1x.x.148"],"ConsulAddress": "","ConsulAclToken": "","ConsulKVStoreProvider": "consul"

}

-

启动服务

在每台orchestrator集群机器的orchestrator安装目录下,运行:

./orchestrator --debug --config=/etc/orchestrator.conf.json http -

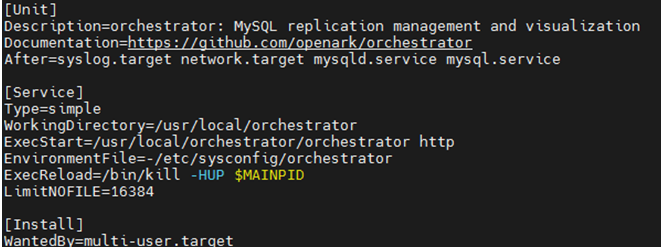

systemctl托管服务

解压orchestrator压缩包时,在

/usr/local/etc/systemd/system目录下会有托管文件:orchestrator.service。 将其移动到/usr/lib/systemd/system/orchestrator.service。内容如下:

启动:systemctl start orchestrator -

登录web界面

登录URL:1xx.1x.3.146:3000 或1xx.1x.3.147:3000 或 1xx.1x.3.148:3000

安全狗)

)

队列实践请看下一篇)

)

IOU)