Cilium动手实验室: 精通之旅---15.Isovalent Enterprise for Cilium: Network Policies

- 1. 环境信息

- 2. 测试环境部署

- 3. 默认规则

- 3.1 测试默认规则

- 3.2 小测验

- 4. 网络策略可视化

- 4.1 通过可视化创建策略

- 4.2 小测试

- 5. 测试策略

- 5.1 应用策略

- 5.2 流量观测

- 5.3 Hubble观测

- 5.4 小测试

- 6. 根据Hubble流更新网络策略

- 6.1 创建新策略

- 6.2 保存并执行策略

- 6.3 测试策略

- 6.4 测试拒绝策略

- 6.5 小测验

- 7. Boss战

- 7.1 题目

- 7.2 解题

1. 环境信息

LAB环境地址

https://isovalent.com/labs/cilium-network-policies/

Kind 部署1控制节点,2个worker

root@server:~# yq /etc/kind/${KIND_CONFIG}.yaml

---

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:- role: control-planeextraPortMappings:# localhost.run proxy- containerPort: 32042hostPort: 32042# Hubble relay- containerPort: 31234hostPort: 31234# Hubble UI- containerPort: 31235hostPort: 31235- role: worker- role: worker

networking:disableDefaultCNI: truekubeProxyMode: none

root@server:~# echo $HUBBLE_SERVER

localhost:31234

root@server:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kind-control-plane Ready control-plane 105m v1.31.0

kind-worker Ready <none> 105m v1.31.0

kind-worker2 Ready <none> 105m v1.31.0

2. 测试环境部署

让我们部署一个简单的演示应用程序来探索 Isovalent Enterprise for Cilium 的网络安全能力。我们将创建 3 个命名空间,并在它们之上部署 3 个服务:

kubectl create ns tenant-a

kubectl create ns tenant-b

kubectl create ns tenant-c

kubectl create -f https://docs.isovalent.com/public/tenant-services.yaml -n tenant-a

kubectl create -f https://docs.isovalent.com/public/tenant-services.yaml -n tenant-b

kubectl create -f https://docs.isovalent.com/public/tenant-services.yaml -n tenant-c

当应用程序启动时,我们检查一下是否所有 Cilium 组件都已正确部署。请注意,显示结果可能需要几秒钟时间!

root@server:~# cilium status --wait/¯¯\/¯¯\__/¯¯\ Cilium: OK\__/¯¯\__/ Operator: OK/¯¯\__/¯¯\ Envoy DaemonSet: OK\__/¯¯\__/ Hubble Relay: OK\__/ ClusterMesh: disabledDaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3cilium-envoy Running: 3cilium-operator Running: 2clustermesh-apiserver hubble-relay Running: 1hubble-ui Running: 1

Cluster Pods: 11/11 managed by Cilium

Helm chart version:

Image versions cilium quay.io/isovalent/cilium:v1.17.1-cee.beta.1: 3cilium-envoy quay.io/cilium/cilium-envoy:v1.31.5-1739264036-958bef243c6c66fcfd73ca319f2eb49fff1eb2ae@sha256:fc708bd36973d306412b2e50c924cd8333de67e0167802c9b48506f9d772f521: 3cilium-operator quay.io/isovalent/operator-generic:v1.17.1-cee.beta.1: 2hubble-relay quay.io/isovalent/hubble-relay:v1.17.1-cee.beta.1: 1hubble-ui quay.io/isovalent/hubble-ui-enterprise-backend:v1.3.2: 1hubble-ui quay.io/isovalent/hubble-ui-enterprise:v1.3.2: 1

Configuration: Unsupported feature(s) enabled: EnvoyDaemonSet (Limited). Please contact Isovalent Support for more information on how to grant an exception.

如果一切正常,前 3 行应指示 OK。 某些服务可能尚不可用。您可以稍等片刻,然后重试。

您还可以验证是否可以使用以下方法正确连接到哈勃中继(使用我们实验室中的端口 31234):

root@server:~# hubble status

Healthcheck (via localhost:31234): Ok

Current/Max Flows: 3,419/12,285 (27.83%)

Flows/s: 20.35

Connected Nodes: 3/3

并且所有节点都在 Hubble 中得到正确管理:

root@server:~# hubble list nodes

NAME STATUS AGE FLOWS/S CURRENT/MAX-FLOWS

kind-control-plane Connected 3m15s 1.88 454/4095 ( 11.09%)

kind-worker Connected 3m14s 2.74 628/4095 ( 15.34%)

kind-worker2 Connected 3m15s 13.11 2665/4095 ( 65.08%)

root@server:~#

在继续之前,我们检查一下是否所有 Pod 都已部署:

root@server:~# kubectl get pods --all-namespaces | grep "tenant"

tenant-a backend-service 1/1 Running 0 79s

tenant-a frontend-service 1/1 Running 0 79s

tenant-b backend-service 1/1 Running 0 78s

tenant-b frontend-service 1/1 Running 0 78s

tenant-c backend-service 1/1 Running 0 78s

tenant-c frontend-service 1/1 Running 0 78s

3. 默认规则

3.1 测试默认规则

在 tenant-a 中,我们可以在curl 的帮助下连接到各种服务。

首先,让我们看看 frontend-service pod 是否可以访问 backend-service 服务:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI backend-service.tenant-a

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 00:32:32 GMT

Connection: keep-alive

Keep-Alive: timeout=5

我们收到 HTTP/1.1 200 OK 响应,表明流量不受限制地流动。

现在,让我们测试集群的 tenant-b 中 backend-service 服务的流量:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI backend-service.tenant-b

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 00:36:39 GMT

Connection: keep-alive

Keep-Alive: timeout=5

我们收到 HTTP/1.1 200 OK 响应,表明流量不受限制地流动。

现在,让我们测试集群的 tenant-b 中 backend-service 服务的流量:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI backend-service.tenant-b

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 00:34:48 GMT

Connection: keep-alive

Keep-Alive: timeout=5

同样,允许流量。最后,检查对集群外部服务的访问权限,例如 api.twitter.com:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI api.twitter.com

HTTP/1.1 301 Moved Permanently

Date: Fri, 30 May 2025 00:35:07 GMT

Connection: keep-alive

location: https://api.twitter.com/

x-connection-hash: 1cc2475a532213170f64d1fe4a4c9001be570ef332f3191a37b4cdd7ce23b402

cf-cache-status: DYNAMIC

Set-Cookie: __cf_bm=jslvhdd_RmYVfybX6fDVQnNj_.sn_gbCdv5eIYmXBU8-1748565307-1.0.1.1-k4zQCxDXmmfqx9Kg6nGLrGhR.M1ixe8bZI2434SQ8IvmLwJrb5tnqb.36DjodWCSRl4Sz2y0.WQI4_3bHUp2EvupVvOr5aY7pRr43H92dvk; path=/; expires=Fri, 30-May-25 01:05:07 GMT; domain=.twitter.com; HttpOnly

Server: cloudflare tsa_b

CF-RAY: 947a2650cf8e9ee3-CDG

此响应返回 301 响应,该响应还显示流量正在流动。

我们可以看到,默认情况下,来自 tenant-a 命名空间中 Pod 的所有流量都是允许的:

- 在 tenant-a 命名空间中

- 到其他命名空间中的服务(例如

tenant-b) - 到 Kubernetes 集群外部的外部端点(例如

api.twitter.com)

Hubble CLI 连接到集群中的 Hubble Relay 组件,并检索名为“Flows”的日志。然后,此命令行工具允许您可视化和筛选流。

可视化 tenant-a 中的 frontend-service pod 发送的 TCP 流量 命名空间替换为:

root@server:~# hubble observe --from-pod tenant-a/frontend-service --protocol tcp

May 30 00:34:48.257: tenant-a/frontend-service (ID:61166) <> tenant-b/backend-service:80 (ID:32849) post-xlate-fwd TRANSLATED (TCP)

May 30 00:34:48.257: tenant-a/frontend-service:41388 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: SYN)

May 30 00:34:48.257: tenant-a/frontend-service:41388 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK)

May 30 00:34:48.257: tenant-a/frontend-service:41388 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

May 30 00:34:48.260: tenant-a/frontend-service:41388 (ID:61166) <> tenant-b/backend-service (ID:32849) pre-xlate-rev TRACED (TCP)

May 30 00:34:48.265: tenant-a/frontend-service:41388 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

May 30 00:34:48.267: tenant-a/frontend-service:41388 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK)

May 30 00:35:07.000: tenant-a/frontend-service:51098 (ID:61166) -> 172.66.0.227:80 (world) to-stack FORWARDED (TCP Flags: SYN)

May 30 00:35:07.005: tenant-a/frontend-service:51098 (ID:61166) -> 172.66.0.227:80 (world) to-stack FORWARDED (TCP Flags: ACK)

May 30 00:35:07.005: tenant-a/frontend-service:51098 (ID:61166) -> 172.66.0.227:80 (world) to-stack FORWARDED (TCP Flags: ACK, PSH)

May 30 00:35:07.109: tenant-a/frontend-service:51098 (ID:61166) -> 172.66.0.227:80 (world) to-stack FORWARDED (TCP Flags: ACK, FIN)

May 30 00:35:07.114: tenant-a/frontend-service:51098 (ID:61166) -> 172.66.0.227:80 (world) to-stack FORWARDED (TCP Flags: ACK)

May 30 00:36:39.823: tenant-a/frontend-service (ID:61166) <> 10.96.16.75:80 (world) pre-xlate-fwd TRACED (TCP)

May 30 00:36:39.823: tenant-a/frontend-service (ID:61166) <> tenant-b/backend-service:80 (ID:32849) post-xlate-fwd TRANSLATED (TCP)

May 30 00:36:39.823: tenant-a/frontend-service:33420 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: SYN)

May 30 00:36:39.823: tenant-a/frontend-service:33420 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK)

May 30 00:36:39.823: tenant-a/frontend-service:33420 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

May 30 00:36:39.823: tenant-a/frontend-service:33420 (ID:61166) <> tenant-b/backend-service (ID:32849) pre-xlate-rev TRACED (TCP)

May 30 00:36:39.824: tenant-a/frontend-service:33420 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

May 30 00:36:39.825: tenant-a/frontend-service:33420 (ID:61166) -> tenant-b/backend-service:80 (ID:32849) to-endpoint FORWARDED (TCP Flags: ACK)

您应该会看到一个日志列表,每个日志都包含:

- 时间戳

- 源 Pod,以及它的命名空间、端口和 Cilium 身份

- 流向(

->、<-,如果方向无法确定,有时为<>) - 目标 Pod,以及它的命名空间、端口和 Cilium 身份

- 跟踪观察点(例如

to-endpoint、to-stack、to-overlay) - 判定(例如

FORWARDED或DROPPED) - 协议(例如

UDP、TCP),可选带有标志

确定流中的三个请求(到 backend-service.tenant-a、api.twitter.com 和 backend-service.tenant-b)。

这些流确认所有三个请求都已转发到其目标,因为所有流都标记为 FORWARDED。

3.2 小测验

这个很明显所有都是的

√ All traffic is allowed from the namespace's pod to other pods in the same namespace

√ All traffic is allowed from the namespace's pod to pods in other namespaces

√ All traffic is allowed from the namespace's pod to external addresses

√ All traffic is allowed from pods in other namespaces to the namespace's pods

√ All traffic is allowed from external addresses to the namespace's pods

4. 网络策略可视化

4.1 通过可视化创建策略

- 单击左侧的 Policies 菜单项。

- 在菜单的左侧,现在有一个用于选择命名空间的下拉菜单。

- 选择

tenant-a命名空间。由于此命名空间中还没有网络策略,因此主窗格为空,并且策略编辑器会显示一条注释,指出 “No policy to show”。 - 在右下角,您可以看到一个流列表,所有流都已标记

forwarded,对应于 Hubble 知道的有关此命名空间的流量。

由于此命名空间当前允许所有内容,因此让我们创建一个策略!

-

单击 “Create empty policy” 按钮。

-

您将在主窗格中看到一个新策略,并在下角的编辑器窗格中看到它的 YAML 表示形式。

-

可视化工具中的中心框对应于策略的目标 Pod。连接到此框的所有箭头当前均为绿色,因为该策略目前允许所有流量。

📝 单击中心框右上角的按钮并指定以下值:

- 策略名称:

default - 策略命名空间:

tenant-a - 端点选择器:(留空 - 空的 Pod 选择器匹配命名空间中的所有 Pod)

单击其下方的绿色 Save 按钮。

- 策略名称:

这将更新编辑器窗格中的 YAML 文档,该文档现在应为:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: defaultnamespace: tenant-a

spec:endpointSelector: {}

在中心块中,分别单击 🔓 左下角和右下角的 Ingress Default Allow 和 Egress Default Allow 🔓 按钮。这将更新策略,使其具有将丢弃来自 tenant-a 命名空间中任何 Pod 的所有入站和出站连接的规则。

可视化工具中的所有箭头现在都已变为红色,YAML 规范现在应为:

spec:endpointSelector: {}ingress:- {}egress:- {}

现在我们有了默认拒绝,我们可以开始在策略中允许特定流量。

我们希望允许以下通信模式:

- 来自同一命名空间中的工作负载的 Ingress 。

- 出口到同一命名空间中的工作负载。

- 从命名空间中的工作负载出口到 KubeDNS/CoreDNS,以便命名空间中的 Pod 可以执行 DNS 请求。

为此,在可视化工具的左侧(即入口)上,找到第二个框,标题为 {} In Namespace,然后单击 Any pod 文本。在弹出窗口中,单击允许来自任何容器 。这会从 {} In Namespace 框向中心框添加一个绿色箭头,并向 YAML 策略清单添加新的 Ingress 规则:

ingress:- fromEndpoints:- {}

对 Egress In Namespace 框的右侧重复此步骤。

然后在右侧(即 Egress)的 In Cluster 框中,单击 Kubernetes DNS 部分,然后在弹出窗口中单击 Allow rule 按钮。再次将鼠标悬停在同一个 Kubernetes DNS 部分上,然后切换 DNS 代理选项。这会向 YAML 清单添加一个完整的块,允许 DNS (UDP/53) 流量到 kube-system/kube-dns pod。

现在,可视化工具中应该有三个绿色箭头,YAML 清单应如下所示:

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: defaultnamespace: tenant-a

spec:endpointSelector: {}ingress:- fromEndpoints:- {}egress:- toEndpoints:- {}- toEndpoints:- matchLabels:io.kubernetes.pod.namespace: kube-systemk8s-app: kube-dnstoPorts:- ports:- port: "53"protocol: UDPrules:dns:- matchPattern: "*"

现在,我们想将策略保存到我们的集群中。在编辑器窗格中,选择所有 YAML 代码并复制它。

并将它保存到文件tenant-a-default-policy.yaml 中

4.2 小测试

√ Cilium supports standard Kubernetes Network Policies

× The Hubble UI only allows you create Cilium Network Policies

√ Adding an empty ingress rule blocks incoming traffic

× Adding an empty egress rule blocks incoming traffic

√ Cilium Network Policies allow to filter DNS requests to Kube DNS

5. 测试策略

5.1 应用策略

应用策略:

kubectl apply -f tenant-a-default-policy.yaml

让我们在 tenant-a 命名空间中测试 frontend-service 和 backend-service pod 之间的连接:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI backend-service.tenant-a

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 01:17:58 GMT

Connection: keep-alive

Keep-Alive: timeout=5

我们可以看到,当我们收到 HTTP 回复时,命令成功了,这表明 tenant-a 命名空间内以及与 KubeDNS 的通信正在正常进行。

我们可以使用 hubble 可视化这些流量:

root@server:~# hubble observe --from-pod tenant-a/frontend-service

May 30 01:17:58.706: tenant-a/frontend-service:42252 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:17:58.706: tenant-a/frontend-service:42252 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.706: tenant-a/frontend-service:42252 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service (ID:61166) <> 10.96.0.10:53 (world) pre-xlate-fwd TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) post-xlate-fwd TRANSLATED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) policy-verdict:L3-L4 EGRESS ALLOWED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-proxy FORWARDED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> 172.18.0.2 (host) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> 172.18.0.2 (host) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) dns-request proxy FORWARDED (DNS Query backend-service.tenant-a.svc.cluster.local. AAAA)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) dns-request proxy FORWARDED (DNS Query backend-service.tenant-a.svc.cluster.local. A)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service:40047 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:17:58.707: tenant-a/frontend-service (ID:61166) <> backend-service.tenant-a.svc.cluster.local:80 (world) pre-xlate-fwd TRACED (TCP)

May 30 01:17:58.707: tenant-a/frontend-service (ID:61166) <> tenant-a/backend-service:80 (ID:12501) post-xlate-fwd TRANSLATED (TCP)

May 30 01:17:58.707: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) policy-verdict:L3-Only EGRESS ALLOWED (TCP Flags: SYN)

May 30 01:17:58.707: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) policy-verdict:L3-Only INGRESS ALLOWED (TCP Flags: SYN)

May 30 01:17:58.707: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) to-endpoint FORWARDED (TCP Flags: SYN)

May 30 01:17:58.708: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) to-endpoint FORWARDED (TCP Flags: ACK)

May 30 01:17:58.708: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

May 30 01:17:58.708: tenant-a/frontend-service:41038 (ID:61166) <> tenant-a/backend-service (ID:12501) pre-xlate-rev TRACED (TCP)

May 30 01:17:58.709: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

May 30 01:17:58.709: tenant-a/frontend-service:41038 (ID:61166) -> tenant-a/backend-service:80 (ID:12501) to-endpoint FORWARDED (TCP Flags: ACK)

EVENTS LOST: HUBBLE_RING_BUFFER CPU(0) 1

现在让我们测试被拒绝的策略。

测试与 api.twitter.com 的连接:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 api.twitter.com

command terminated with exit code 28

连接现在挂起(因为它在 L3/L4 被阻止),尝试 5 次后将出现超时。

同样,让我们使用以下命令测试内部集群服务:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 backend-service.tenant-b

command terminated with exit code 28

同样,连接挂起并超时。

这确认了对外部服务以及其他 Kubernetes 命名空间的策略被正确拒绝。

5.2 流量观测

可以看到 tenant-a 中的所有请求 Namespace:

root@server:~# hubble observe --namespace tenant-a

May 30 01:19:22.111: tenant-a/frontend-service:38600 (ID:61166) -> kube-system/coredns-6f6b679f8f-w4l8q:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:19:22.111: tenant-a/frontend-service:38600 (ID:61166) <> kube-system/coredns-6f6b679f8f-w4l8q (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.111: tenant-a/frontend-service:38600 (ID:61166) <> kube-system/coredns-6f6b679f8f-w4l8q (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.114: tenant-a/frontend-service:38600 (ID:61166) <- kube-system/coredns-6f6b679f8f-w4l8q:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:19:22.114: tenant-a/frontend-service:49697 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:19:22.114: tenant-a/frontend-service:49697 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.114: tenant-a/frontend-service:49697 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.116: tenant-a/frontend-service:49697 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:19:22.116: tenant-a/frontend-service:37254 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:19:22.116: tenant-a/frontend-service:37254 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.116: tenant-a/frontend-service:37254 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:19:22.117: tenant-a/frontend-service:37254 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:20:01.119: tenant-a/frontend-service:37720 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:20:01.119: tenant-a/frontend-service:37720 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.119: tenant-a/frontend-service:37720 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.119: tenant-a/frontend-service:37720 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) -> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-endpoint FORWARDED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <> kube-system/coredns-6f6b679f8f-rlcsr (ID:64246) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-overlay FORWARDED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) to-proxy FORWARDED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) dns-response proxy FORWARDED (DNS Answer TTL: 4294967295 (Proxy backend-service.tenant-b.svc.cluster.local. AAAA))

May 30 01:20:01.120: tenant-a/frontend-service:56405 (ID:61166) <- kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) dns-response proxy FORWARDED (DNS Answer "10.96.16.75" TTL: 30 (Proxy backend-service.tenant-b.svc.cluster.local. A))

May 30 01:20:01.120: kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) <> tenant-a/frontend-service (ID:61166) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.120: 10.96.0.10:53 (world) <> tenant-a/frontend-service (ID:61166) post-xlate-rev TRANSLATED (UDP)

May 30 01:20:01.120: kube-system/coredns-6f6b679f8f-rlcsr:53 (ID:64246) <> tenant-a/frontend-service (ID:61166) pre-xlate-rev TRACED (UDP)

May 30 01:20:01.120: 10.96.0.10:53 (world) <> tenant-a/frontend-service (ID:61166) post-xlate-rev TRANSLATED (UDP)

May 30 01:20:01.120: tenant-a/frontend-service (ID:61166) <> backend-service.tenant-b.svc.cluster.local:80 (world) pre-xlate-fwd TRACED (TCP)

May 30 01:20:01.120: tenant-a/frontend-service (ID:61166) <> tenant-b/backend-service:80 (ID:32849) post-xlate-fwd TRANSLATED (TCP)

May 30 01:20:01.120: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:01.120: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:02.172: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:02.172: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:03.196: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:03.196: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:04.220: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:04.220: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:05.244: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:05.244: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

看到标记为 FORWARDED 或 DROPPED 的流。

您可以使用 --verdict 标志筛选此条件,例如执行:

root@server:~# hubble observe --namespace tenant-a --verdict DROPPED

May 30 01:19:22.126: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:19:22.126: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:19:23.132: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:19:23.132: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:19:24.156: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:19:24.156: tenant-a/frontend-service:47400 (ID:61166) <> api.twitter.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:19:25.619: tenant-a/frontend-service:44836 (ID:61166) <> api.twitter.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:19:25.619: tenant-a/frontend-service:44836 (ID:61166) <> api.twitter.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:19:26.652: tenant-a/frontend-service:44836 (ID:61166) <> api.twitter.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:19:26.652: tenant-a/frontend-service:44836 (ID:61166) <> api.twitter.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:01.120: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:01.120: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:02.172: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:02.172: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:03.196: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:03.196: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:04.220: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:04.220: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:20:05.244: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:20:05.244: tenant-a/frontend-service:40778 (ID:61166) <> tenant-b/backend-service:80 (ID:32849) Policy denied DROPPED (TCP Flags: SYN)

您应该能够查看在上一个质询中丢弃的请求。

5.3 Hubble观测

单击 Connections 并选择 tenant-a 命名空间。

这将向您展示 Hubble UI 如何简化对服务连接的理解,并显示由于网络策略导致的丢弃而导致的连接失败。

在服务地图中,箭头末尾的红线表示已删除的流,而灰色表示流成功。

窗格底部的 flows (流) 表还显示了此命名空间的连接的简化视图,包括上次看到 flow 的时间。

5.4 小测试

√ The Hubble CLI allows to observe all Kubernetes traffic

× Hubble (CLI & UI) always display external DNS names

√ The Hubble service map displays connection drops

√ The Hubble CLI output can be filtered by pod

6. 根据Hubble流更新网络策略

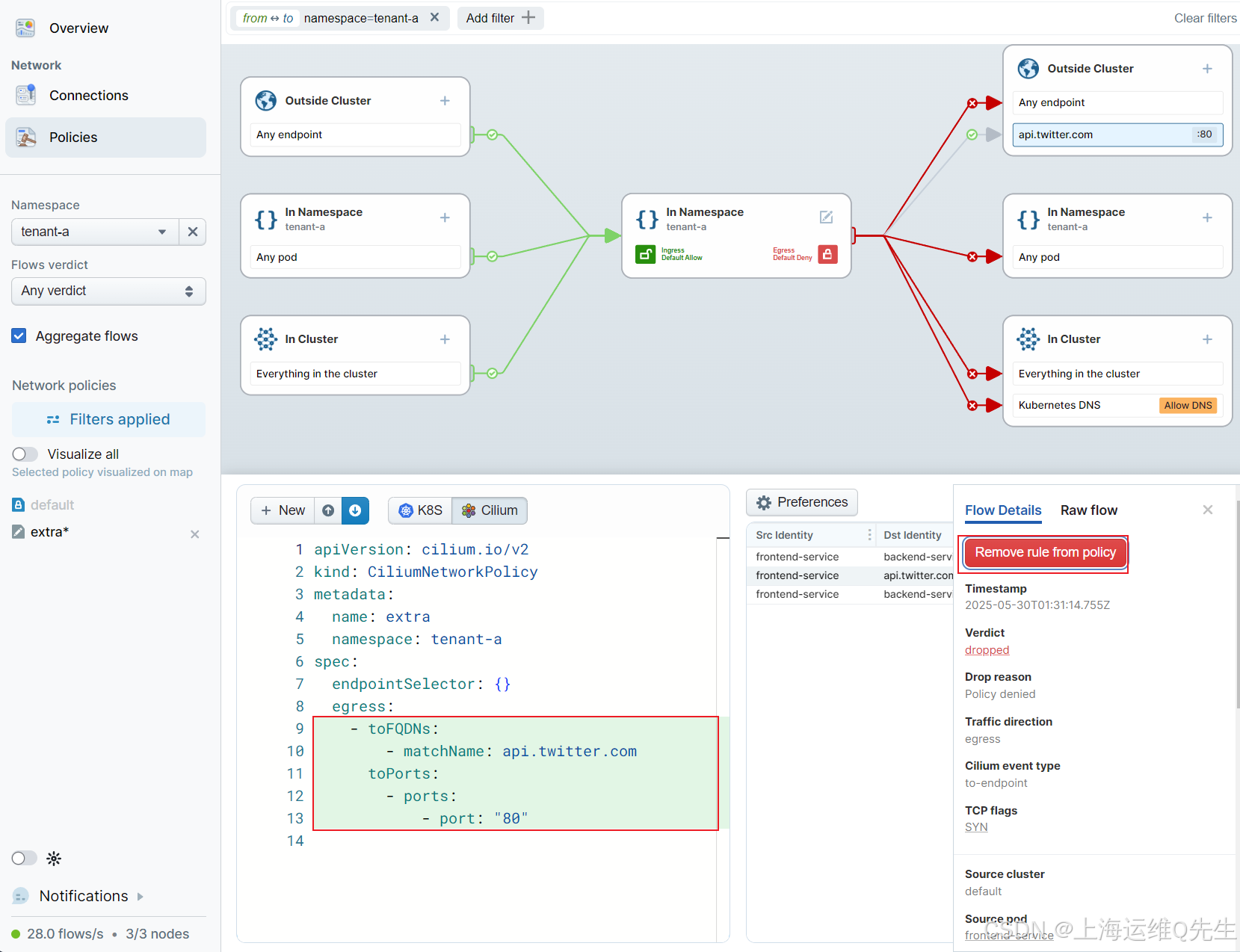

在 Hubble UI 中,转到 Policies ( 策略) 视图并选择 tenant-a 命名空间。

在右下角,我们看到 Hubble 已经识别了在 tenant-a 命名空间中观察到的一组当前策略不允许的流,并将它们标记为已删除 。

6.1 创建新策略

为了允许其他流量,我们可以向现有网络策略添加规则。

单击编辑器窗格左上角的 + New 按钮。

📝 然后单击中心框中的图标,并将策略重命名为 extra。点击Save 保存.

查看右下角窗格中的 flows 表。其中两个请求的判决被丢弃 ,即对 tenant-b 中的 backend-service 和 api.twitter.com 的请求。

单击与 tenant-b 中的 backend-service 对应的行,然后选择 Add rule to policy。YAML 清单现已更新以接受此流量!

重复该作以允许流量 api.twitter.com。

这将产生一个精细的网络策略,该策略允许所需的连接,同时保留 Zero Trust 网络策略的默认拒绝方面。

这些更改也会反映在策略可视化中。例如,选中右侧名为 In Cluster 的下框,现在在 DNS 规则下方显示了另一条规则。

由于我们正在制定新的网络策略,因此主窗格仅显示此特定策略的规则。

在左列的底部,您可以看到此命名空间的策略列表,其中 extra 以粗体字表示,允许您在策略之间切换。

切换列表顶部的 Visualize all 按钮。主窗格现在显示同时应用的所有策略的结果。

6.2 保存并执行策略

将extra的内容保存到文件

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: extranamespace: tenant-a

spec:endpointSelector: {}egress:- toFQDNs:- matchName: api.twitter.comtoPorts:- ports:- port: "80"

应用新规则:

kubectl apply -f tenant-a-extra-policy.yaml

6.3 测试策略

让我们验证一下我们的策略是否正常工作。执行我们之前运行的相同 curl 命令:

租户内部测试:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 backend-service.tenant-a

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 01:43:50 GMT

Connection: keep-alive

Keep-Alive: timeout=5

外部服务测试:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 api.twitter.com

HTTP/1.1 301 Moved Permanently

Date: Fri, 30 May 2025 01:44:05 GMT

Connection: keep-alive

location: https://api.twitter.com/

x-connection-hash: 4f4309bf4e4addf64c0e07844f4bb940265e0c08bfc6401ff84ab5dc0f1c11de

cf-cache-status: DYNAMIC

Set-Cookie: __cf_bm=jH7pT_6q5Ahf1bi5orj4hLi5ds4iwg.B59McL_hIEd4-1748569445-1.0.1.1-uUluHlV3wQ905kI4lnii8I9CfYkTZO.HCh0j53.4wH7NB0gdlqwxgrVb4rmXAxrR7YnCuEfYhgzDM8RalcHCWMcqJ484UI0ZINnbgz_Gov4; path=/; expires=Fri, 30-May-25 02:14:05 GMT; domain=.twitter.com; HttpOnly

Server: cloudflare tsa_b

CF-RAY: 947a8b56cce20358-CDG

其他租户服务测试:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 backend-service.tenant-b

command terminated with exit code 28

显然这与我们的预期相一致

6.4 测试拒绝策略

让我们检查一下其他外部目标是否仍然被拒绝:

另一个外部服务:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 www.google.com

command terminated with exit code 28

另一个内部服务:

root@server:~# kubectl exec -n tenant-a frontend-service -- \curl -sI --max-time 5 backend-service.tenant-c

command terminated with exit code 28

如您所见,这些仍然无法访问,我们可以使用以下方法检查流:

root@server:~# hubble observe --namespace tenant-a

May 30 01:45:15.992: tenant-a/frontend-service:37240 (ID:5591) -> kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-endpoint FORWARDED (UDP)

May 30 01:45:15.992: tenant-a/frontend-service:37240 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.992: tenant-a/frontend-service:37240 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.994: tenant-a/frontend-service:37240 (ID:5591) <- kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:15.994: tenant-a/frontend-service:33400 (ID:5591) -> kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-endpoint FORWARDED (UDP)

May 30 01:45:15.994: tenant-a/frontend-service:33400 (ID:5591) <> kube-system/coredns-6f6b679f8f-wbmhb (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.994: tenant-a/frontend-service:33400 (ID:5591) <> kube-system/coredns-6f6b679f8f-wbmhb (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.995: tenant-a/frontend-service:33400 (ID:5591) <- kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:15.996: tenant-a/frontend-service:52771 (ID:5591) -> kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-endpoint FORWARDED (UDP)

May 30 01:45:15.996: tenant-a/frontend-service:52771 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.996: tenant-a/frontend-service:52771 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:15.998: tenant-a/frontend-service:52771 (ID:5591) <- kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:29.005: tenant-a/frontend-service:59030 (ID:5591) -> kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-endpoint FORWARDED (UDP)

May 30 01:45:29.005: tenant-a/frontend-service:59030 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.005: tenant-a/frontend-service:59030 (ID:5591) <> kube-system/coredns-6f6b679f8f-54xhj (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.006: tenant-a/frontend-service:59030 (ID:5591) <- kube-system/coredns-6f6b679f8f-54xhj:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:29.006: tenant-a/frontend-service:49123 (ID:5591) -> kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-endpoint FORWARDED (UDP)

May 30 01:45:29.006: tenant-a/frontend-service:49123 (ID:5591) <> kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:29.006: tenant-a/frontend-service:49123 (ID:5591) <> kube-system/coredns-6f6b679f8f-wbmhb (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.006: tenant-a/frontend-service:49123 (ID:5591) <> kube-system/coredns-6f6b679f8f-wbmhb (ID:5794) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.007: tenant-a/frontend-service:49123 (ID:5591) <- kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-overlay FORWARDED (UDP)

May 30 01:45:29.007: tenant-a/frontend-service:49123 (ID:5591) <- kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) to-proxy FORWARDED (UDP)

May 30 01:45:29.007: tenant-a/frontend-service:49123 (ID:5591) <- kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) dns-response proxy FORWARDED (DNS Answer TTL: 4294967295 (Proxy backend-service.tenant-c.svc.cluster.local. AAAA))

May 30 01:45:29.007: tenant-a/frontend-service:49123 (ID:5591) <- kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) dns-response proxy FORWARDED (DNS Answer "10.96.102.16" TTL: 30 (Proxy backend-service.tenant-c.svc.cluster.local. A))

May 30 01:45:29.007: kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) <> tenant-a/frontend-service (ID:5591) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.007: 10.96.0.10:53 (world) <> tenant-a/frontend-service (ID:5591) post-xlate-rev TRANSLATED (UDP)

May 30 01:45:29.007: kube-system/coredns-6f6b679f8f-wbmhb:53 (ID:5794) <> tenant-a/frontend-service (ID:5591) pre-xlate-rev TRACED (UDP)

May 30 01:45:29.007: 10.96.0.10:53 (world) <> tenant-a/frontend-service (ID:5591) post-xlate-rev TRANSLATED (UDP)

May 30 01:45:29.007: tenant-a/frontend-service (ID:5591) <> backend-service.tenant-c.svc.cluster.local:80 (world) pre-xlate-fwd TRACED (TCP)

May 30 01:45:29.007: tenant-a/frontend-service (ID:5591) <> tenant-c/backend-service:80 (ID:3374) post-xlate-fwd TRANSLATED (TCP)

May 30 01:45:29.007: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:29.007: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:30.051: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:30.051: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:31.075: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:31.075: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:32.099: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:32.099: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:33.123: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:33.123: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

仅显示被DROP的流量

root@server:~# hubble observe --namespace tenant-a --verdict DROPPED

May 30 01:45:18.083: tenant-a/frontend-service:49892 (ID:5591) <> www.google.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:18.083: tenant-a/frontend-service:49892 (ID:5591) <> www.google.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:19.095: tenant-a/frontend-service:52790 (ID:5591) <> www.google.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:19.095: tenant-a/frontend-service:52790 (ID:5591) <> www.google.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:20.364: tenant-a/frontend-service:43234 (ID:5591) <> www.google.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:20.364: tenant-a/frontend-service:43234 (ID:5591) <> www.google.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:20.677: tenant-a/frontend-service:53370 (ID:5591) <> www.google.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:20.677: tenant-a/frontend-service:53370 (ID:5591) <> www.google.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:20.831: tenant-a/frontend-service:35940 (ID:5591) <> www.google.com:80 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:20.831: tenant-a/frontend-service:35940 (ID:5591) <> www.google.com:80 (world) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:29.007: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:29.007: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:30.051: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:30.051: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:31.075: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:31.075: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:32.099: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:32.099: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

May 30 01:45:33.123: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

May 30 01:45:33.123: tenant-a/frontend-service:44084 (ID:5591) <> tenant-c/backend-service:80 (ID:3374) Policy denied DROPPED (TCP Flags: SYN)

6.5 小测验

√ Rules can be added to an existing Network Policy

√ Rules can be added by creating a new Network Policy

√ The Hubble Network Policy editor allows to edit existing Kubernetes Network Policies

× Modifying Network Policies in Hubble automatically applies them to the cluster

× Hubble cannot let you view all policies applying to namespace at the same time

7. Boss战

7.1 题目

对于此实践考试,您需要:

- 在命名空间

tenant-b中创建名为default-exam的策略(使用default-exam.yaml文件) - 允许来自命名空间

tenant-b中所有 Pod 的流量在端口443上google.com - 允许

tenant-b中的 Kubernetes DNS 流量 - 允许流量流向端口

80上命名空间tenant-c中的 Pod backend-service - apply the policy 应用策略

可以使用以下命令做测试

kubectl exec -n tenant-b frontend-service -- curl -sI --max-time 5 backend-service.tenant-c

7.2 解题

根据题目1-3创建default-exam.yaml

root@server:~# yq default-exam.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: default-examnamespace: tenant-b

spec:endpointSelector: {}ingress:- {}egress:- toFQDNs:- matchName: google.comtoPorts:- ports:- port: "443"- toEndpoints:- matchLabels:any:io.kubernetes.pod.namespace: kube-systemany:k8s-app: kube-dnstoPorts:- ports:- port: "53"protocol: UDPrules:dns:- matchPattern: "*"- toEndpoints:- {}

部署策略

k apply -f default-exam.yaml

访问测试

root@server:~# k apply -f default-exam.yaml

ciliumnetworkpolicy.cilium.io/default-exam created

root@server:~# kubectl exec -n tenant-b frontend-service -- curl -sI --max-time 5 backend-service.tenant-c

command terminated with exit code 28

将被drop的添加到策略中

复制CiliumNetworkPolicy到文件default-exam.yaml

确认文件你内容,并应用配置

root@server:~# yq default-exam.yaml

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:name: default-examnamespace: tenant-b

spec:endpointSelector: {}

# ingress:

# - {}egress:- toEndpoints:- matchLabels:io.kubernetes.pod.namespace: kube-systemk8s-app: kube-dnstoPorts:- ports:- port: "53"protocol: UDPrules:dns:- matchPattern: "*"- toFQDNs:- matchName: google.comtoPorts:- ports:- port: "443"- toEndpoints:- matchLabels:k8s:app: backend-servicek8s:io.kubernetes.pod.namespace: tenant-ctoPorts:- ports:- port: "80"- toEndpoints:- {}

root@server:~# k apply -f default-exam.yaml

ciliumnetworkpolicy.cilium.io/default-exam configured

再次测试访问tenant-c的service

root@server:~# kubectl exec -n tenant-b frontend-service -- curl -sI --max-time 5 backend-service.tenant-c

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Thu, 05 Oct 2023 14:24:44 GMT

ETag: W/"809-18b003a03e0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2057

Date: Fri, 30 May 2025 01:57:28 GMT

Connection: keep-alive

Keep-Alive: timeout=5

显然它成功了,我们再测试到google.com的

root@server:~# kubectl exec -n tenant-b frontend-service -- curl -sI --max-time 5 https://google.com

HTTP/2 301

location: https://www.google.com/

content-type: text/html; charset=UTF-8

content-security-policy-report-only: object-src 'none';base-uri 'self';script-src 'nonce--v6mW7uA2tULH4CO1MAfKQ' 'strict-dynamic' 'report-sample' 'unsafe-eval' 'unsafe-inline' https: http:;report-uri https://csp.withgoogle.com/csp/gws/other-hp

date: Fri, 30 May 2025 02:03:29 GMT

expires: Sun, 29 Jun 2025 02:03:29 GMT

cache-control: public, max-age=2592000

server: gws

content-length: 220

x-xss-protection: 0

x-frame-options: SAMEORIGIN

显然也没问题

好了,提交下试试看.

新徽标GET!

解决方案)

![2025年渗透测试面试题总结-小鹏[实习]安全工程师(题目+回答)](http://pic.xiahunao.cn/2025年渗透测试面试题总结-小鹏[实习]安全工程师(题目+回答))

)

)